Abstract

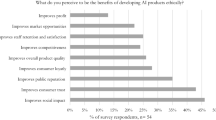

As the artificial intelligence (AI) industry booms and the systems they created are impacting our lives immensely, we begin to realize that these systems are not as impartial as we thought them to be. Even though they are machines that make logical decisions, biases and discrimination are able to creep into the data and model to affect outcomes causing harm. This pushes us to re-evaluate the design metrics for creating such systems and put more focus on integrating human values in the system. However, even when the awareness of the need for ethical AI systems is high, there are currently limited methodologies available for designers and engineers to incorporate human values into their designs. Our methodology tool aims to address this gap by assisting product teams to surface fairness concerns, navigate complex ethical choices around fairness, and overcome blind spots and team biases. It can also help them to stimulate perspective thinking from multiple parties and stakeholders. With our tool, we aim to lower the bar to add fairness into the design discussion so that more design teams can make better and more informed decisions for fairness in their application scenarios.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Angwin, J., Larson, J., Mattu, S., Kirchner, L.: Machine bias: there’s software used across the country to predict future criminals and it’s biased against blacks. ProPublica (2016)

Ballard, S., Chappell, K.M., Kennedy, K.: Judgment call the game: using value sensitive design and design fiction to surface ethical concerns related to technology. In: Proceedings of the 2019 on Designing Interactive Systems Conference, pp. 421–433 (2019)

Berk, R., Heidari, H., Jabbari, S., Kearns, M., Roth, A.: Fairness in criminal justice risk assessments: the state of the art. Soc. Meth. Res. 50, 3–44 (2018)

Binns, R.: Fairness in machine learning: lessons from political philosophy. In: Conference on Fairness, Accountability and Transparency, pp. 149–159. PMLR (2018)

Chouldechova, A.: Fair prediction with disparate impact: a study of bias in recidivism prediction instruments. Big Data 5(2), 153–163 (2017)

Coglianese, C.: Algorithmic Regulation. The Algorithmic Society, Technology, Power, and Knowledge (2020)

Corbett-Davies, S., Pierson, E., Feller, A., Goel, S., Huq, A.: Algorithmic decision making and the cost of fairness. In: Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 797–806 (2017)

Crawford, K.: Artificial intelligence’s white guy problem. The New York Times, 25 June 2016

Dwork, C., Hardt, M., Pitassi, T., Reingold, O., Zemel, R.: Fairness through awareness. In: Proceedings of the 3rd Innovations in Theoretical Computer Science Conference, pp. 214–226 (2012)

Farnadi, G., Babaki, B., Getoor, L.: Fairness in relational domains. In: Proceedings of the 2018 AAAI/ACM Conference on AI, Ethics, and Society, pp. 108–114 (2018)

Flanagan, M., Howe, D.C., Nissenbaum, H.: Values at play: design tradeoffs in socially-oriented game design. In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, pp. 751–760 (2005)

Friedman, B.: Value-sensitive design. Interactions 3(6), 16–23 (1996)

Friedman, B., Hendry, D.: The envisioning cards: a toolkit for catalyzing humanistic and technical imaginations. In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, pp. 1145–1148 (2012)

Friedman, B., Hendry, D.G., Borning, A.: A survey of value sensitive design methods. Found. Trends Hum. Comput. Interact. 11(2), 63–125 (2017)

Friedman, B., Kahn, P., Borning, A.: Value sensitive design: theory and methods. University of Washington, Technical report 02-12-01 (2002)

Grgic-Hlaca, N., Zafar, M.B., Gummadi, K.P., Weller, A.: The case for process fairness in learning: feature selection for fair decision making. In: NIPS Symposium on Machine Learning and the Law, vol. 1, p. 2 (2016)

Hardt, M., Price, E., Srebro, N.: Equality of opportunity in supervised learning. arXiv preprint arXiv:1610.02413 (2016)

Havens, J.: Heartificial Intelligence: Embracing Our Humanity to Maximize achines. Jeremy P. Tarcher/Penguin (2016)

Holstein, K., Wortman Vaughan, J., Daumé III, H., Dudik, M., Wallach, H.: Improving fairness in machine learning systems: what do industry practitioners need? In: Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, pp. 1–16 (2019)

Hutchinson, B., Mitchell, M.: 50 years of test (un)fairness: lessons for machine learning. In: Proceedings of the Conference on Fairness, Accountability, and Transparency, pp. 49–58 (2019)

Kleinberg, J., Mullainathan, S., Raghavan, M.: Inherent trade-offs in the fair determination of risk scores. arXiv preprint arXiv:1609.05807 (2016)

Kusner, M.J., Loftus, J.R., Russell, C., Silva, R.: Counterfactual fairness. arXiv preprint arXiv:1703.06856 (2017)

Makridakis, S.: The forthcoming artificial intelligence (AI) revolution: its impact on society and firms. Futures 90, 46–60 (2017)

Mehrabi, N., Morstatter, F., Saxena, N., Lerman, K., Galstyan, A.: A survey on bias and fairness in machine learning. arXiv preprint arXiv:1908.09635 (2019)

Obermeyer, Z., Powers, B., Vogeli, C., Mullainathan, S.: Dissecting racial bias in an algorithm used to manage the health of populations. Science 366(6464), 447–453 (2019)

Schwab, K.: The Fourth Industrial Revolution. Currency (2017)

Verma, S., Rubin, J.: Fairness definitions explained. In: 2018 IEEE/ACM International Workshop on Software Fairness (Fairware), pp. 1–7. IEEE (2018)

Yapo, A., Weiss, J.: Ethical implications of bias in machine learning. In: Proceedings of the 51st Hawaii International Conference on System Sciences (2018)

Acknowledgements

This research is supported, in part, by Nanyang Technological University, Nanyang Assistant Professorship (NAP); Alibaba Group through Alibaba Innovative Research (AIR) Program and Alibaba-NTU Singapore Joint Research Institute (JRI) (Alibaba-NTU-AIR2019B1), Nanyang Technological University, Singapore; the RIE 2020 Advanced Manufacturing and Engineering (AME) Programmatic Fund (No. A20G8b0102), Singapore; and the Joint SDU-NTU Centre for Artificial Intelligence Research (C-FAIR).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper

Shu, Y., Zhang, J., Yu, H. (2021). Fairness in Design: A Tool for Guidance in Ethical Artificial Intelligence Design. In: Meiselwitz, G. (eds) Social Computing and Social Media: Experience Design and Social Network Analysis . HCII 2021. Lecture Notes in Computer Science(), vol 12774. Springer, Cham. https://doi.org/10.1007/978-3-030-77626-8_34

Download citation

DOI: https://doi.org/10.1007/978-3-030-77626-8_34

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-77625-1

Online ISBN: 978-3-030-77626-8

eBook Packages: Computer ScienceComputer Science (R0)