Abstract

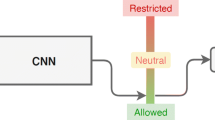

The Internet eases the broadcasting of data, information, and propaganda. The availability of myriad social media has turned the spotlight on violent extremism and expanded the scope and impact of ideology-oriented acts of violence. Automated image classification for this content is a highly sought-after goal, yet raises the question of potential bias and discrimination in case of incorrect classification. A requirement for addressing, and potentially counter-acting, bias, is the existence of a reliable training dataset. To demonstrate how such a dataset can be developed for highly sensitive topics, this article operationalizes the process of human-coding images posted on the open social web by violent religious extremists into four master categories and four subcategories. We concentrate on the group ISIS due to their prolific digital content creation. The developed training dataset is used to train a convolutional neural network to automatically detect extremist visual content on social media and determine its category. Using inter-coder reliability, we show that the training data can be reliably coded despite highly nuanced data and the existence of various categories and subcategories.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

Generalized descriptions of removable/censor-provoking content is available in the Terms of Service sections of the platforms, see for example Facebook’s Community Standards Enforcement page: https://govtrequests.facebook.com/community-standards-enforcement.

References

Ligon, G.S., Hall, M., Braun, C.: Digital participation roles of the global jihad: social media’s role in bringing together vulnerable individuals and VEO content. In: Nah, F.F.-H., Xiao, B.S. (eds.) HCIBGO 2018. LNCS, vol. 10923, pp. 485–495. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-91716-0_39

Hall, M., Logan, M., Ligon, G.S., Derrick, D.C.: Do machines replicate humans? Toward a unified understanding of radicalizing content on the open social web. Policy Internet 12(1) (2020). https://doi.org/10.1002/poi3.223

Bradshaw, S.: Disinformation optimised: gaming search engine algorithms to amplify junk news. Internet Policy Rev. 8(4), 1–24 (2019). https://doi.org/10.14763/2019.4.1442

De-Arteaga, M., Fogliato, R., Chouldechova, A.: A case for humans-in-the-loop: decisions in the presence of erroneous algorithmic scores. In: Conference Human Factors Computing System – Proceedings (2020). https://doi.org/10.1145/3313831.3376638

Hall, M., Mazarakis, A., Chorley, M.J., Caton, S.: Editorial of the special issue on following user pathways: key contributions and future directions in cross-platform social media research. Int. J. Hum. Comput. Interact. 34(10), 895–912 (2018). https://doi.org/10.1080/10447318.2018.1471575

Dubrawski, A., Miller, K., Barnes, M., Boecking, B., Kennedy, E.: Leveraging publicly available data to discern patterns of human-trafficking activity. J. Hum. Traffick. 1(1), 65–85 (2015). https://doi.org/10.1080/23322705.2015.1015342

Ulges, A., Stahl, A.: Automatic detection of child pornography using color visual words. In: 2011 IEEE International Conference on Multimedia and Expo, pp. 1–6 (2011). https://doi.org/10.1109/ICME.2011.6011977

Wendlandt, L., Mihalcea, R., Boyd, R.L., Pennebaker, J.W.: Multimodal analysis and prediction of latent user dimensions. In: Ciampaglia, G.L., Mashhadi, A., Yasseri, T. (eds.) SocInfo 2017. LNCS, vol. 10539, pp. 323–340. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-67217-5_20

Hashemi, M., Hall, M.: Identifying the responsible group for extreme acts of violence through pattern recognition. In: Nah, F.H., Xiao, B. (eds.) HCI in Business, Government, and Organizations. HCIBGO 2018. Lecture Notes in Computer Science, vol. 10923, pp. 594–605. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-91716-0_47

Hashemi, M., Hall, M.: Detecting and classifying online dark visual propaganda. Image Vis. Comput. 89, 95–105 (2019). https://doi.org/10.1016/j.imavis.2019.06.001

Dowthwaite, L., Seth, S.: IEEE P7003 TM Standard for Algorithmic Bias Considerations. In: 2018 IEEE/ACM International Workshop on Software Fairness (FairWare), pp. 38–41 (2018)

Dressel, J., Farid, H.: The accuracy, fairness, and limits of predicting recidivism. Sci. Adv. 4(1), eaao5580 (2018)

Khosla, A., Zhou, T., Malisiewicz, T., Efros, A.A., Torralba, A.: Undoing the damage of dataset bias. In: Fitzgibbon, A., Lazebnik, S., Perona, P., Sato, Y., Schmid, C. (eds.) ECCV 2012. LNCS, vol. 7572, pp. 158–171. Springer, Heidelberg (2012). https://doi.org/10.1007/978-3-642-33718-5_12

Raghavan, M., Barocas, S., Kleinberg, J., Levy, K.: Mitigating bias in algorithmic hiring: evaluating claims and practices (2020)

Obermeyer, Z., Powers, B., Vogeli, C., Mullainathan, S.: Dissecting racial bias in an algorithm used to manage the health of populations. Science (80-.) 366(6464), 447–453 (2019). https://doi.org/10.1126/science.aax2342

Caliskan, A., Bryson, J.J., Narayanan, A.: Semantics derived automatically from language corpora contain human-like biases. Science (80-.) 356(6334), 183–186 (2017). https://doi.org/10.1126/science.aal4230

Caton, S., Haas, C.: Fairness in machine learning: a survey. arXiv, October 2020

Oulasvirta, A., Hornbæk, K.: HCI research as problem-solving. In: ACM Conference on Human Factors in Computing Systems, CHI 2016, pp. 4956–4967 (2016). https://doi.org/10.1145/2858036.2858283

Derrick, D.C., Ligon, G.S., Harms, M., Mahoney, W.: Cyber-sophistication assessment methodology for public-facing terrorist web sites. J. Inf. Warf. 16(1), 13–30 (2017)

Nelson, R.: A Chronology and glossary of propaganda in the United States, Annotated (1996)

Bolognesi, M., Pilgram, R., van den Heerik, R.: Reliability in content analysis: the case of semantic feature norms classification. Behav. Res. Methods 49(6), 1984–2001 (2016). https://doi.org/10.3758/s13428-016-0838-6

Alom, Z., et al.: The history began from AlexNet: a comprehensive survey on deep learning approaches (2018). https://doi.org/10.1016/S0011-9164(00)80105-8

Muñoz, S.R., Bangdiwala, S.I.: Interpretation of Kappa and B statistics measures of agreement. J. Appl. Stat. 24(1), 105–112 (1997). https://doi.org/10.1080/02664769723918

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper

Hall, M., Haas, C. (2021). Brown Hands Aren’t Terrorists: Challenges in Image Classification of Violent Extremist Content. In: Duffy, V.G. (eds) Digital Human Modeling and Applications in Health, Safety, Ergonomics and Risk Management. AI, Product and Service. HCII 2021. Lecture Notes in Computer Science(), vol 12778. Springer, Cham. https://doi.org/10.1007/978-3-030-77820-0_15

Download citation

DOI: https://doi.org/10.1007/978-3-030-77820-0_15

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-77819-4

Online ISBN: 978-3-030-77820-0

eBook Packages: Computer ScienceComputer Science (R0)