Abstract

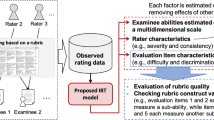

When human raters grade student writing assignments, writing assessment often involves the use of a scoring rubric consisting of multiple evaluation items in order to increase the objectivity of evaluation. However, even when using a rubric, assigned scores are known to be influenced by the characteristics of both the rubric’s evaluation items and the raters, thus decreasing the reliability of student assessment. To resolve this problem, these characteristic effects have been considered in many recently proposed item response theory (IRT) models for estimating student ability. Such IRT models assume unidimensionality, meaning that a rubric measures one latent ability; in practice, however, this assumption might not be satisfied because a rubric’s evaluation items are often designed to measure multiple sub-abilities that constitute a targeted ability. To address this issue, this study proposes a multidimensional extension of such an IRT model for rubric-based writing assessment. The proposed model improves the assessment reliability. Furthermore, the model is useful for objective and detailed analysis of rubric quality and its construct validity. This study demonstrates the effectiveness of the proposed model through simulation experiments and application to real data.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Abosalem, Y.: Beyond translation: adapting a performance-task-based assessment of critical thinking ability for use in Rwanda. Int. J. Second. Educ. 4(1), 1–11 (2016)

Bernardin, H.J., Thomason, S., Buckley, M.R., Kane, J.S.: Rater rating-level bias and accuracy in performance appraisals: the impact of rater personality, performance management competence, and rater accountability. Hum. Resour. Manag. 55(2), 321–340 (2016)

Brooks, S., Gelman, A., Jones, G., Meng, X.: Handbook of Markov Chain Monte Carlo. Chapman & Hall/ CRC Handbooks of Modern Statistical Methods, CRC Press, Boca Raton (2011)

Carpenter, B., Gelman, A., Hoffman, M., Lee, D., Goodrich, B., Betancourt, M., Brubaker, M., Guo, J., Li, P., Riddell, A.: Stan: a probabilistic programming language. J. Stat. Softw. Art. 76(1), 1–32 (2017)

Chan, S., Bax, S., Weir, C.: Researching participants taking IELTS Academic Writing Task 2 (AWT2) in paper mode and in computer mode in terms of score equivalence, cognitive validity and other factors. Technical report, IELTS Research Reports Online Series (2017)

Deng, S., McCarthy, D.E., Piper, M.E., Baker, T.B., Bolt, D.M.: Extreme response style and the measurement of intra-individual variability in affect. Multivar. Behav. Res. 53(2), 199–218 (2018)

Eckes, T.: Introduction to Many-Facet Rasch Measurement: Analyzing and Evaluating Rater-Mediated Assessments. Peter Lang Pub. Inc., New York (2015)

Fox, J.P.: Bayesian Item Response Modeling: Theory and applications. Springer, Heidelberg (2010)

Gelman, A., Carlin, J., Stern, H., Dunson, D., Vehtari, A., Rubin, D.: Bayesian Data Analysis, Third Edition. Chapman & Hall/CRC Texts in Statistical Science, Taylor & Francis, London (2013)

Gelman, A., Rubin, D.B.: Inference from iterative simulation using multiple sequences. Stat. Sci. 7(4), 457–472 (1992)

Hoffman, M.D., Gelman, A.: The No-U-Turn sampler: adaptively setting path lengths in Hamiltonian Monte Carlo. J. Mach. Learn. Res. 15, 1593–1623 (2014)

Hua, C., Wind, S.A.: Exploring the psychometric properties of the mind-map scoring rubric. Behaviormetrika 46(1), 73–99 (2019)

Hussein, M.A., Hassan, H.A., Nassef, M.: Automated language essay scoring systems: a literature review. PeerJ Comput. Sci. 5, e208 (2019)

Hutten, L.R.: Some Empirical Evidence for Latent Trait Model Selection. ERIC Clearinghouse (1980)

Jin, K.Y., Wang, W.C.: A new facets model for rater’s centrality/extremity response style. J. Educ. Meas. 55(4), 543–563 (2018)

Kaliski, P.K., Wind, S.A., Engelhard, G., Morgan, D.L., Plake, B.S., Reshetar, R.A.: Using the many-faceted Rasch model to evaluate standard setting judgments. Educ. Psychol. Measur. 73(3), 386–411 (2013)

Kose, I.A., Demirtasli, N.C.: Comparison of unidimensional and multidimensional models based on item response theory in terms of both variables of test length and sample size. Procedia. Soc. Behav. Sci. 46, 135–140 (2012)

Linacre, J.M.: Many-faceted Rasch Measurement. MESA Press, San Diego (1989)

Linlin, C.: Comparison of automatic and expert teachers’ rating of computerized English listening-speaking test. Engl. Lang. Teach. 13(1), 18 (2019)

Liu, O.L., Frankel, L., Roohr, K.C.: Assessing critical thinking in higher education: current state and directions for next-generation assessment. ETS Res. Rep. Ser. 1, 1–23 (2014)

Lord, F.: Applications of item response theory to practical testing problems. Erlbaum Associates, Mahwah (1980)

Martin-Fernandez, M., Revuelta, J.: Bayesian estimation of multidimensional item response models. a comparison of analytic and simulation algorithms. Int. J. Methodol. Exp. Psychol.38(1), 25–55 (2017)

Matsushita, K., Ono, K., Takahashi, Y.: Development of a rubric for writing assessment and examination of its reliability [in Japanese]. J. Lib. Gen. Educ. Soc. Japan 35(1), 107–115 (2013)

Muraki, E.: A generalized partial credit model. In: van der Linden, W.J., Hambleton, R.K. (eds.) Handbook of Modern Item Response Theory, pp. 153–164. Springer (1997)

Myford, C.M., Wolfe, E.W.: Detecting and measuring rater effects using many-facet Rasch measurement: part I. J. Appl. Meas. 4, 386–422 (2003)

Nakajima, A.: Achievements and issues in the application of rubrics in academic writing: a case study of the college of images arts and sciences [in Japanese]. Ritsumeikan High. Educ. Stud. 17, 199–215 (2017)

Nguyen, T., Uto, M., Abe, Y., Ueno, M.: Reliable peer assessment for team project based learning using item response theory. In: Proceedings of the International Conference on Computers in Education, pp. 144–153 (2015)

Patz, R.J., Junker, B.W., Johnson, M.S., Mariano, L.T.: The hierarchical rater model for rated test items and its application to large-scale educational assessment data. J. Educ. Behav. Stat. 27(4), 341–384 (2002)

Patz, R.J., Junker, B.: Applications and extensions of MCMC in IRT: multiple item types, missing data, and rated responses. J. Educ. Behav. Stat. 24(4), 342–366 (1999)

Rahman, A.A., Hanafi, N.M., Yusof, Y., Mukhtar, M.I., Yusof, A.M., Awang, H.: The effect of rubric on rater’s severity and bias in TVET laboratory practice assessment: analysis using many-facet Rasch measurement. J. Tech. Educ. Train. 12(1), 57–67 (2020)

Reckase, M.D.: Multidimensional Item Response Theory Models. Springer, New York (2009). https://doi.org/10.1007/978-0-387-89976-3_4

Rosen, Y., Tager, M.: Making student thinking visible through a concept map in computer-based assessment of critical thinking. J. Educ. Comput. Res. 50(2), 249–270 (2014)

Samejima, F.: Estimation of latent ability using a response pattern of graded scores. Psychometrika Monogr. 17, 1–100 (1969)

Schendel, R., Tolmie, A.: Assessment techniques and students’ higher-order thinking skills. Assess. Eval. High. Educ. 42(5), 673–689 (2017)

Shin, H.J., Rabe-Hesketh, S., Wilson, M.: Trifactor models for multiple-ratings data. Multivar. Behav. Res. 54(3), 360–381 (2019)

Soo Park, Y., Xing, K.: Rater model using signal detection theory for latent differential rater functioning. Multivar. Behav. Res. 54(4), 492–504 (2019)

Stan Development Team: RStan: the R interface to stan. R package version 2.17.3. http://mc-stan.org (2018)

Svetina, D., Valdivia, A., Underhill, S., Dai, S., Wang, X.: Parameter recovery in multidimensional item response theory models under complexity and nonnormality. Appl. Psychol. Meas. 41(7), 530–544 (2017)

Tavakol, M., Pinner, G.: Using the many-facet Rasch model to analyse and evaluate the quality of objective structured clinical examination: a non-experimental cross-sectional design. BMJ Open 9(9), 1–9 (2019)

Uto, M.: Accuracy of performance-test linking based on a many-facet Rasch model. Behavior Research Methods p. In press (2020)

Uto, M., Duc Thien, N., Ueno, M.: Group optimization to maximize peer assessment accuracy using item response theory and integer programming. IEEE Trans. Learn. Technol. 13(1), 91–106 (2020)

Uto, M., Okano, M.: Robust neural automated essay scoring using item response theory. In: Proceedings of the International Conference on Artificial Intelligence in Education, pp. 549–561 (2020)

Uto, M., Ueno, M.: Item response theory for peer assessment. IEEE Trans. Learn. Technol. 9(2), 157–170 (2016)

Uto, M., Ueno, M.: Empirical comparison of item response theory models with rater’s parameters. Heliyon 4(5), 1–32 (2018)

Uto, M., Ueno, M.: Item response theory without restriction of equal interval scale for rater’s score. In: Proceedings of the International Conference on Artificial Intelligence in Education, pp. 363–368 (2018)

Uto, M., Ueno, M.: A generalized many-facet Rasch model and its Bayesian estimation using Hamiltonian Monte Carlo. Behaviormetrika 47(2), 469–496 (2020)

Watanabe, S.: Asymptotic equivalence of Bayes cross validation and widely applicable information criterion in singular learning theory. J. Mach. Learn. Res. 11(12), 3571–3594 (2010)

Wilson, M., Hoskens, M.: The rater bundle model. J. Educ. Behav. Stat. 26(3), 283–306 (2001)

Wind, S.A., Jones, E.: The effects of incomplete rating designs in combination with rater effects. J. Educ. Meas. 56(1), 76–100 (2019)

Yao, L., Schwarz, R.D.: A multidimensional partial credit model with associated item and test statistics: an application to mixed-format tests. Appl. Psychol. Meas. 30(6), 469–492 (2006)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper

Uto, M. (2021). A Multidimensional Item Response Theory Model for Rubric-Based Writing Assessment. In: Roll, I., McNamara, D., Sosnovsky, S., Luckin, R., Dimitrova, V. (eds) Artificial Intelligence in Education. AIED 2021. Lecture Notes in Computer Science(), vol 12748. Springer, Cham. https://doi.org/10.1007/978-3-030-78292-4_34

Download citation

DOI: https://doi.org/10.1007/978-3-030-78292-4_34

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-78291-7

Online ISBN: 978-3-030-78292-4

eBook Packages: Computer ScienceComputer Science (R0)