Abstract

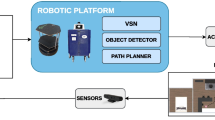

Service robots can undertake tasks that are impractical or even dangerous for us - e.g., industrial welding, space exploration, and others. To carry out these tasks reliably, however, they need Visual Intelligence capabilities at least comparable to those of humans. Despite the technological advances enabled by Deep Learning (DL) methods, Machine Visual Intelligence is still vastly inferior to Human Visual Intelligence. Methods which augment DL with Semantic Web technologies, on the other hand, have shown promising results. In the lack of concrete guidelines on which knowledge properties and reasoning capabilities to leverage within this new class of hybrid methods, this PhD work provides a reference framework of epistemic requirements for the development of Visually Intelligent Agents (VIA). Moreover, the proposed framework is used to derive a novel hybrid reasoning architecture, to address real-world robotic scenarios which require Visual Intelligence.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Aditya, S., Yang, Y., Baral, C.: Integrating knowledge and reasoning in image understanding. In: Proceedings of IJCAI 2019, pp. 6252–6259 (2019)

Alatise, M.B., Hancke, G.P.: A review on challenges of autonomous mobile robot and sensor fusion methods. IEEE Access 8, 39830–39846 (2020)

Landau, B., Jackendoff, R.: “What’’ and “where’’ in spatial language and spatial cognition. Behav. Brain Sci. 16, 217–265 (1993)

Bastianelli, E., Bardaro, G., Tiddi, I., Motta, E.: Meet HanS, the heath & safety autonomous inspector. In: Proceedings of the International Semantic Web Conference (ISWC), Poster&Demo Track (2018)

Borrmann, A., Rank, E.: Query support for BIMs using semantic and spatial conditions. In: Handbook of Research on Building Information Modeling and Construction Informatics: Concepts and Technologies (2010)

Chang, A.X., et al.: ShapeNet: an information-rich 3d model repository. arXiv preprint arXiv:1512.03012 (2015)

Chiatti, A., Bardaro, G., Motta, E., Daga, E.: Commonsense spatial reasoning for visually intelligent agents. arXiv preprint arXiv:2104.00387 (2021)

Chiatti, A., Motta, E., Daga, E.: Towards a framework for visual intelligence in service robotics: epistemic requirements and gap analysis. In: Proceedings of KR 2020- Special session on KR & Robotics, pp. 905–916. IJCAI (2020)

Chiatti, A., Motta, E., Daga, E., Bardaro, G.: Fit to measure: reasoning about sizes for robust object recognition. In: To appear in Proceedings of the AAAI2021 Spring Symposium on Combining Machine Learning and Knowledge Engineering (AAAI-MAKE 2021) (2021)

Daruna, A., Liu, W., Kira, Z., Chetnova, S.: RoboCSE: robot common sense embedding. In: Proceedings of ICRA, pp. 9777–9783. IEEE (2019)

Daruna, A.A., et al.: SiRoK: situated robot knowledge-understanding the balance between situated knowledge and variability. In: 2018 AAAI Spring Symposium Series (2018)

Deeken, H., Wiemann, T., Hertzberg, J.: Grounding semantic maps in spatial databases. Robot. Auton. Syst. 105, 146–165 (2018)

Gouidis, F., Vassiliades, A., Patkos, T., Argyros, A., Bassiliades, N., Plexousakis, D.: A review on intelligent object perception methods combining knowledge-based reasoning and machine learning. arXiv:1912.11861 [cs], March 2020

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of CVPR, pp. 770–778 (2016)

Hoffman, D.D.: Visual Intelligence: How We Create What We See. WW Norton & Company, New York (2000)

van Krieken, E., Acar, E., van Harmelen, F.: Analyzing differentiable fuzzy implications. In: Proceedings of KR 2020, pp. 893–903 (2020)

Krishna, R., Zhu, Y., Groth, O., Johnson, J., et al.: Visual genome: connecting language and vision using crowdsourced dense image annotations. Int. J. Comput. Vision 123(1), 32–73 (2017)

Krizhevsky, A., Sutskever, I., Hinton, G.E.: ImageNet classification with deep convolutional neural networks. Commun. ACM 60(6), 84–90 (2017)

Lake, B.M., Ullman, T.D., Tenenbaum, J.B., Gershman, S.J.: Building machines that learn and think like people. Behav. Brain Sci. 40 (2017)

LeCun, Y., Bengio, Y., Hinton, G.: Deep learning. Nature 521(7553)(2015)

Liu, D., Bober, M., Kittler, J.: Visual semantic information pursuit: a survey. IEEE Trans. Pattern Anal. Mach. Intell. (2019)

Liu, L., et al.: Deep learning for generic object detection: a survey. Int. J. Comput. Vis. 128(2), 261–318 (2020)

Mancini, M., Karaoguz, H., Ricci, E., Jensfelt, P., Caputo, B.: Knowledge is never enough: towards web aided deep open world recognition. In: IEEE ICRA, p. 9543, May 2019

Marcus, G.: Deep learning: a critical appraisal. arXiv preprint arXiv:1801.00631 (2018)

Marino, K., Salakhutdinov, R., Gupta, A.: The more you know: using knowledge graphs for image classification. In: Proceedings of IEEE CVPR, pp. 20–28, July 2017

Parisi, G.I., Kemker, R., Part, J.L., Kanan, C., Wermter, S.: Continual lifelong learning with neural networks: a review. Neural Netw. 113, 54–71 (2019)

Paulius, D., Sun, Y.: A survey of knowledge representation in service robotics. Robot. Auton. Syst. 118, 13–30 (2019)

Pearl, J.: Theoretical impediments to machine learning with seven sparks from the causal revolution. In: Proceedings of WSDM 2018, p. 3. ACM, February 2018

Serafini, L., Garcez, A.D.: Logic tensor networks: deep learning and logical reasoning from data and knowledge. arXiv:1606.04422 [cs], July 2016

Storks, S., Gao, Q., Chai, J.Y.: Recent advances in natural language inference: a survey of benchmarks, resources, and approaches. arXiv preprint arXiv:1904.01172 (2019)

Wu, Q., Teney, D., Wang, P., Shen, C., Dick, A., van den Hengel, A.: Visual question answering: a survey of methods and datasets. Comput. Vis. Image Underst. 163, 21–40 (2017)

Yang, K., Russakovsky, O., Deng, J.: Spatialsense: an adversarially crowdsourced benchmark for spatial relation recognition. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 2051–2060 (2019)

Young, J., Kunze, L., Basile, V., Cabrio, E., Hawes, N., Caputo, B.: Semantic web-mining and deep vision for lifelong object discovery. In: Proceedings of ICRA, pp. 2774–2779. IEEE (2017)

Zeng, A., et al.: Robotic pick-and-place of novel objects in clutter with multi-affordance grasping and cross-domain image matching. In: 2018 IEEE ICRA, pp. 1–8. IEEE (2018)

Acknowledgements

I would like to thank my supervisors, Prof. Enrico Motta and Dr. Enrico Daga, for their continuous support and guidance throughout this PhD project. It is also thanks to them if I have found out about the ESWC PhD symposium.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper

Chiatti, A. (2021). Towards Visually Intelligent Agents (VIA): A Hybrid Approach. In: Verborgh, R., et al. The Semantic Web: ESWC 2021 Satellite Events. ESWC 2021. Lecture Notes in Computer Science(), vol 12739. Springer, Cham. https://doi.org/10.1007/978-3-030-80418-3_32

Download citation

DOI: https://doi.org/10.1007/978-3-030-80418-3_32

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-80417-6

Online ISBN: 978-3-030-80418-3

eBook Packages: Computer ScienceComputer Science (R0)