Abstract

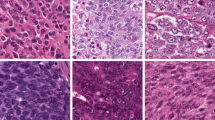

The automated analysis of medical images is currently limited by technical and biological noise and bias. The same source tissue can be represented by vastly different images if the image acquisition or processing protocols vary. For an image analysis pipeline, it is crucial to compensate such biases to avoid misinterpretations. Here, we evaluate, compare, and improve existing generative model architectures to overcome domain shifts for immunofluorescence (IF) and Hematoxylin and Eosin (H&E) stained microscopy images. To determine the performance of the generative models, the original and transformed images were segmented or classified by deep neural networks that were trained only on images of the target bias. In the scope of our analysis, U-Net cycleGANs trained with an additional identity and an MS-SSIM-based loss and Fixed-Point GANs trained with an additional structure loss led to the best results for the IF and H&E stained samples, respectively. Adapting the bias of the samples significantly improved the pixel-level segmentation for human kidney glomeruli and podocytes and improved the classification accuracy for human prostate biopsies by up to \(14\%\).

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Armanious, K., Tanwar, A., Abdulatif, S., Küstner, T., Gatidis, S., Yang, B.: Unsupervised adversarial correction of rigid mr motion artifacts. In: 2020 IEEE 17th International Symposium on Biomedical Imaging (ISBI), pp. 1494–1498 (2020)

Arvaniti, E., et al.: Replication Data for: Automated Gleason grading of prostate cancer tissue microarrays via deep learning (2018)

Arvaniti, E., et al.: Automated Gleason grading of prostate cancer tissue microarrays via deep learning. Scientific Reports (2018)

de Bel, T., Hermsen, M., Jesper Kers, R., van der Laak, J., Litjens, G.: Stain-transforming cycle-consistent generative adversarial networks for improved segmentation of renal histopathology. In: Proceedings of The 2nd International Conference on Medical Imaging with Deep Learning, pp. 151–163 (2019)

Chen, N., Zhou, Q.: The evolving gleason grading system. Chin. J. Cancer Res. 28(1), 58–64 (2016)

Choi, Y., Choi, M., Kim, M., Ha, J.W., Kim, S., Choo, J.: StarGAN: unified generative adversarial networks for multi-domain image-to-image translation. In: Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp. 8789–8797 (2018)

Cohen, J.P., Luck, M., Honari, S.: Distribution matching losses can hallucinate features in medical image translation. In: Frangi, A.F., Schnabel, J.A., Davatzikos, C., Alberola-López, C., Fichtinger, G. (eds.) MICCAI 2018. LNCS, vol. 11070, pp. 529–536. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-00928-1_60

Deng, J., Dong, W., Socher, R., Li, L.J., Li, K., Fei-Fei, L.: ImageNet: a large-scale hierarchical image database. In: 2009 IEEE Conference on Computer Vision and Pattern Recognition, pp. 248–255 (2009)

Dice, L.R.: Measures of the amount of ecologic association between species. Ecology 26(3), 297–302 (1945). https://www.jstor.org/stable/1932409

Egevad, L., et al.: Standardization of Gleason grading among 337 European pathologists. Histopathology 62(2), 247–256 (2013)

Engin, D., Genc, A., Ekenel, H.K.: Cycle-dehaze: enhanced cyclegan for single image dehazing. In: IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, pp. 938–946 (2018)

Gonzalez, R.C., Woods, R.E., Masters, B.R.: Digital Image Processing, 3rd edn. Pearson, London (2007)

Heusel, M., Ramsauer, H., Unterthiner, T., Nessler, B., Hochreiter, S.: GANs trained by a two time-scale update rule converge to a local Nash equilibrium. In: Proceedings of the 31st International Conference on Neural Information Processing Systems, pp. 6629–6640. NIPS’17 (2017)

Isola, P., Zhu, J.Y., Zhou, T., Efros, A.A.: Image-to-image translation with conditional adversarial networks. In: Proceedings - 30th IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, pp. 5967–5976 (2017)

Kohli, M.D., Summers, R.M., Geis, J.R.: Medical image data and datasets in the era of machine learning - whitepaper from the 2016 c-mimi meeting dataset session. J. Digital Imag. 30(4), 392–399 (2017)

Ma, Y., et al.: Cycle structure and illumination constrained GAN for medical image enhancement. In: Martel, A.L., et al. (eds.) MICCAI 2020. LNCS, vol. 12262, pp. 667–677. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-59713-9_64

Manakov, I., Rohm, M., Kern, C., Schworm, B., Kortuem, K., Tresp, V.: Noise as domain shift: denoising medical images by unpaired image translation. In: Wang, Q., et al. (eds.) DART/MIL3ID -2019. LNCS, vol. 11795, pp. 3–10. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-33391-1_1

Reinhard, E., Ashikhmin, M., Gooch, B., Shirley, P.: Color transfer between images. IEEE Comput. Graph. Appl. 21(5), 34–41 (2001)

Ronneberger, O., Fischer, P., Brox, T.: U-Net: convolutional networks for biomedical image segmentation. In: Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F. (eds.) MICCAI 2015. LNCS, vol. 9351, pp. 234–241. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-24574-4_28

Shaban, M.T., Baur, C., Navab, N., Albarqouni, S.: Staingan: stain style transfer for digital histological images. In: 2019 IEEE 16th International Symposium on Biomedical Imaging (ISBI 2019), pp. 953–956 (2019)

Siddiquee, M.M.R., et al.: Learning fixed points in generative adversarial networks: from image-to-image translation to disease detection and localization. In: 2019 IEEE/CVF International Conference on Computer Vision (ICCV), pp. 191–200 (2019)

Wang, Z., Bovik, A.C., Sheikh, H.R., Simoncelli, E.P.: Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process. 13(4), 600–612 (2004)

Wang, Z., Simoncelli, E.P., Bovik, A.C.: Multi-scale structural similarity for image quality assessment. In: The Thirty-Seventh Asilomar Conference on Signals, Systems and Computers 2003, pp. 1398–1402 (2003)

Zhu, J., Park, T., Isola, P., Efros, A.A.: Unpaired image-to-image translation using cycle-consistent adversarial networks. In: 2017 IEEE International Conference on Computer Vision (ICCV), pp. 2242–2251 (2017)

Zimmermann, M., et al.: Deep learning-based molecular morphometrics for kidney biopsies. JCI Insight 6 (2021)

Acknowledgements

This work was supported by DFG (SFB 1192 projects B8, B9 and C3) and by BMBF (eMed Consortia ‘Fibromap’).

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper

Thebille, AK. et al. (2021). Deep Learning-Based Bias Transfer for Overcoming Laboratory Differences of Microscopic Images. In: Papież, B.W., Yaqub, M., Jiao, J., Namburete, A.I.L., Noble, J.A. (eds) Medical Image Understanding and Analysis. MIUA 2021. Lecture Notes in Computer Science(), vol 12722. Springer, Cham. https://doi.org/10.1007/978-3-030-80432-9_25

Download citation

DOI: https://doi.org/10.1007/978-3-030-80432-9_25

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-80431-2

Online ISBN: 978-3-030-80432-9

eBook Packages: Computer ScienceComputer Science (R0)