Abstract

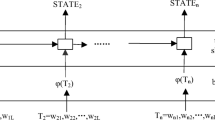

Dialogue State Tracking (DST) is an important part of the task-oriented dialogue system, which is used to predict the current state of the dialogue given all the preceding conversations. In the stage of encoding historical dialogue into context representation, recurrent neural networks (RNNs) have been proven to be highly effective and achieves significant improvements in many tasks. However, hard to model extremely long dependencies and gradient vanishing are two practical and yet concerned studied problems of recurrent neural networks. In this work, based on the recently proposed TRADE model, we have made corresponding improvements in the encoding part, explore a new context representation learning on sequence data, combined convolution and self-attention with recurrent neural networks, striving to extract both local and global features at the same time to learn a better representation on dialogue context. Empirical results demonstrate that our proposed model achieves 50.04% joint goal accuracy for the five domains of MultiWOZ2.0.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Budzianowski, P., et al.: MultiWOZ-a large-scale multi-domain wizard-of-OZ dataset for task-oriented dialogue modelling. arXiv preprint arXiv:1810.00278 (2018)

Chung, J., Gulcehre, C., Cho, K., Bengio, Y.: Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv preprint arXiv:1412.3555 (2014)

Dauphin, Y.N., Fan, A., Auli, M., Grangier, D.: Language modeling with gated convolutional networks. In: International Conference on Machine Learning, pp. 933–941. PMLR (2017)

Gao, S., Sethi, A., Agarwal, S., Chung, T., Hakkani-Tur, D.: Dialog state tracking: a neural reading comprehension approach. arXiv preprint arXiv:1908.01946 (2019)

Goel, R., Paul, S., Hakkani-Tür, D.: HyST: A hybrid approach for flexible and accurate dialogue state tracking. arXiv preprint arXiv:1907.00883 (2019)

Hashimoto, K., Xiong, C., Tsuruoka, Y., Socher, R.: A joint many-task model: growing a neural network for multiple NLP tasks. arXiv preprint arXiv:1611.01587 (2016)

Henderson, M., Thomson, B., Williams, J.D.: The second dialog state tracking challenge. In: Proceedings of the 15th Annual Meeting of the Special Interest Group on Discourse and Dialogue (SIGDIAL), pp. 263–272 (2014)

Kingma, D.P., Ba, J.: Adam: a method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014)

Kirkpatrick, J., et al.: Overcoming catastrophic forgetting in neural networks. Proc. Nat. Aca. Sci. 114(13), 3521–3526 (2017)

Lee, H., Lee, J., Kim, T.Y.: SUMBT: slot-utterance matching for universal and scalable belief tracking. arXiv preprint arXiv:1907.07421 (2019)

Lei, W., Jin, X., Kan, M.Y., Ren, Z., He, X., Yin, D.: Sequicity: simplifying task-oriented dialogue systems with single sequence-to-sequence architectures. In: Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (vol. 1: Long Papers), pp. 1437–1447 (2018)

Mrkšić, N., Séaghdha, D.O., Wen, T.H., Thomson, B., Young, S.: Neural belief tracker: data-driven dialogue state tracking. arXiv preprint arXiv:1606.03777 (2016)

Nouri, E., Hosseini-Asl, E.: Toward scalable neural dialogue state tracking model. arXiv preprint arXiv:1812.00899 (2018)

van den Oord, A., Kalchbrenner, N., Vinyals, O., Espeholt, L., Graves, A., Kavukcuoglu, K.: Conditional image generation with pixelCNN decoders. arXiv preprint arXiv:1606.05328 (2016)

Pennington, J., Socher, R., Manning, C.D.: Glove: global vectors for word representation. In: Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), pp. 1532–1543 (2014)

Ren, L.: Scalable and accurate dialogue state tracking via hierarchical sequence generation. University of California, San Diego (2020)

Vaswani, A., et al.: Attention is all you need. arXiv preprint arXiv:1706.03762 (2017)

Wen, T.H., et al.: A network-based end-to-end trainable task-oriented dialogue system. arXiv preprint arXiv:1604.04562 (2016)

Wen, T.H., et al.: A network-based end-to-end trainable task-oriented dialogue system. In: Proceedings of the 15th Conference of the European Chapter of the Association for Computational Linguistics, Long Papers, vol. 1, pp. 438–449 (2017)

Wu, C.S., Madotto, A., Hosseini-Asl, E., Xiong, C., Socher, R., Fung, P.: Transferable multi-domain state generator for task-oriented dialogue systems. In: Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers). Association for Computational Linguistics (2019)

Xu, P., Hu, Q.: An end-to-end approach for handling unknown slot values in dialogue state tracking. arXiv preprint arXiv:1805.01555 (2018)

Zhong, V., Xiong, C., Socher, R.: Global-locally self-attentive dialogue state tracker. arXiv preprint arXiv:1805.09655 (2018)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper

He, Y., Tang, Y. (2021). A Neural Language Understanding for Dialogue State Tracking. In: Qiu, H., Zhang, C., Fei, Z., Qiu, M., Kung, SY. (eds) Knowledge Science, Engineering and Management. KSEM 2021. Lecture Notes in Computer Science(), vol 12815. Springer, Cham. https://doi.org/10.1007/978-3-030-82136-4_44

Download citation

DOI: https://doi.org/10.1007/978-3-030-82136-4_44

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-82135-7

Online ISBN: 978-3-030-82136-4

eBook Packages: Computer ScienceComputer Science (R0)