Abstract

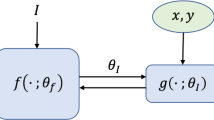

Federated learning (FL) is an emerging distributed machine learning paradigm that avoids data sharing among training nodes so as to protect data privacy. Under the coordination of the FL server, each client conducts model training using its own computing resource and private data set. The global model can be created by aggregating the training results of clients. To cope with highly non-IID data distributions, personalized federated learning (PFL) has been proposed to improve overall performance by allowing each client to learn a personalized model. However, one major drawback of a personalized model is the loss of generalization. To achieve model personalization while maintaining better generalization, in this paper, we propose a new approach, named PFL-MoE, which mixes outputs of the personalized model and global model via the MoE architecture. PFL-MoE is a generic approach and can be instantiated by integrating existing PFL algorithms. Particularly, we propose the PFL-MF algorithm which is an instance of PFL-MoE based on the freeze-base PFL algorithm. We further improve PFL-MF by enhancing the decision-making ability of MoE gating network and propose a variant algorithm PFL-MFE. We demonstrate the effectiveness of PFL-MoE by training the LeNet-5 and VGG-16 models on the Fashion-MNIST and CIFAR-10 datasets with non-IID partitions.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Fallah, A., Mokhtari, A., Ozdaglar, A.E.: Personalized federated learning with theoretical guarantees: A model-agnostic meta-learning approach. In: Annual Conference on Neural Information Processing Systems. MIT Press (2020)

Hsu, H., Qi, H., Brown, M.: Measuring the effects of non-identical data distribution for federated visual classification. In: NIPS Workshop on Federated Learning for Data Privacy and Confidentiality (2019)

McMahan, B., Moore, E., Ramage, D., Hampson, S., y Arcas, B.A.: Communication-efficient learning of deep networks from decentralized data. In: International Conference on Artificial Intelligence and Statistics, vol. 54, pp. 1273–1282. PMLR (2017)

Peterson, D., Kanani, P., Marathe, V.J.: Private federated learning with domain adaptation. In: NIPS Workshop on Federated Learning for Data Privacy and Confidentiality (2019)

Smith, V., Chiang, C.K., Sanjabi, M., Talwalkar, A.S.: Federated multi-task learning. In: Annual Conference on Neural Information Processing Systems, pp. 4424–4434. MIT Press (2017)

Wang, K., Mathews, R., Kiddon, C., Eichner, H., Beaufays, F., Ramage, D.: Federated evaluation of on-device personalization. arXiv preprint arXiv:1910.10252 (2019)

Yu, T., Bagdasaryan, E., Shmatikov, V.: Salvaging federated learning by local adaptation. arXiv preprint arXiv:2002.04758 (2020)

Acknowledgement

This work is supported by The National Key Research and Development Program of China (2018YFB0204303), National Natural Science Foundation of China (U1801266, U1811461), Guangdong Provincial Natural Science Foundation of China (2018B030312002).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper

Guo, B., Mei, Y., Xiao, D., Wu, W. (2021). PFL-MoE: Personalized Federated Learning Based on Mixture of Experts. In: U, L.H., Spaniol, M., Sakurai, Y., Chen, J. (eds) Web and Big Data. APWeb-WAIM 2021. Lecture Notes in Computer Science(), vol 12858. Springer, Cham. https://doi.org/10.1007/978-3-030-85896-4_37

Download citation

DOI: https://doi.org/10.1007/978-3-030-85896-4_37

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-85895-7

Online ISBN: 978-3-030-85896-4

eBook Packages: Computer ScienceComputer Science (R0)