Abstract

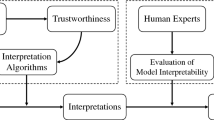

Every day researchers publish works with state-of-the-art results using deep learning models, however as these models become common even in production, ensuring fairness is a main concern of the deep learning models. One way to analyze the model fairness is based on the model interpretability, obtaining the essential features to the model decision. There are many interpretability methods to produce the deep learning model interpretation, such as Saliency, GradCam, Integrated Gradients, Layer-wise relevance propagation, and others. Although those methods make the feature importance map, different methods have different interpretations, and their evaluation relies on qualitative analysis. In this work, we propose the Iterative post hoc attribution approach, which consists of seeing the interpretability problem as an optimization view guided by two objective definitions of what our solution considers important. We solve the optimization problem with a hybrid approach considering the optimization algorithm and the deep neural network model. The obtained results show that our approach can select the features essential to the model prediction more accurately than the traditional interpretability methods.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Devlin, J., Chang, M.W., Lee, K., Toutanova, K.: BERT: pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805 (2018)

Dorigo, M., Birattari, M., Stutzle, T.: Ant colony optimization. IEEE Comput. Intell. Mag. 1(4), 28–39 (2006)

Dosovitskiy, A., et al.: An image is worth 16x16 words: transformers for image recognition at scale. arXiv preprint arXiv:2010.11929 (2020)

Gent, I.P., Walsh, T.: Towards an understanding of hill-climbing procedures for sat. In: AAAI, vol. 93, pp. 28–33 (1993)

Goldberg, D.E.: Genetic algorithms in search. Optim. Mach. Learn. (1989)

Goodfellow, I., Bengio, Y., Courville, A., Bengio, Y.: Deep Learning, vol. 1. MIT Press, Cambridge (2016)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Kennedy, J., Eberhart, R.: Particle swarm optimization. In: Proceedings of ICNN 1995-International Conference on Neural Networks, vol. 4, pp. 1942–1948. IEEE (1995)

Kingma, D.P., Ba, J.: Adam: a method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014)

Krizhevsky, A., Hinton, G., et al.: Learning multiple layers of features from tiny images (2009)

LeCun, Y., Bengio, Y., et al.: Convolutional networks for images, speech, and time series. Handb. Brain Theory Neural Netw. 3361(10), 1995 (1995)

Montavon, G., Binder, A., Lapuschkin, S., Samek, W., Müller, K.-R.: Layer-wise relevance propagation: an overview. In: Samek, W., Montavon, G., Vedaldi, A., Hansen, L.K., Müller, K.-R. (eds.) Explainable AI: Interpreting, Explaining and Visualizing Deep Learning. LNCS (LNAI), vol. 11700, pp. 193–209. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-28954-6_10

Nair, V., Hinton, G.E.: Rectified linear units improve restricted Boltzmann machines. In: Proceedings of the 27th International Conference on Machine Learning (ICML-10), pp. 807–814 (2010)

Sattarzadeh, S., et al.: Explaining convolutional neural networks through attribution-based input sampling and block-wise feature aggregation. ArXiv abs/2010.00672 (2020)

Selvaraju, R.R., Cogswell, M., Das, A., Vedantam, R., Parikh, D., Batra, D.: Grad-CAM: visual explanations from deep networks via gradient-based localization. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 618–626 (2017)

Shrikumar, A., Greenside, P., Kundaje, A.: Learning important features through propagating activation differences. In: Precup, D., Teh, Y.W. (eds.) Proceedings of the 34th International Conference on Machine Learning, ICML 2017, Sydney, NSW, Australia, 6–11 August 2017. Proceedings of Machine Learning Research, vol. 70, pp. 3145–3153. PMLR (2017). http://proceedings.mlr.press/v70/shrikumar17a.html

Simon, M., Rodner, E., Denzler, J.: Part detector discovery in deep convolutional neural networks. In: Cremers, D., Reid, I., Saito, H., Yang, M.-H. (eds.) ACCV 2014. LNCS, vol. 9004, pp. 162–177. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-16808-1_12

Simonyan, K., Vedaldi, A., Zisserman, A.: Deep inside convolutional networks: visualising image classification models and saliency maps. In: Bengio, Y., LeCun, Y. (eds.) 2nd International Conference on Learning Representations, ICLR 2014, Banff, AB, Canada, 14–16 April 2014. Workshop Track Proceedings (2014). http://arxiv.org/abs/1312.6034

Springenberg, J.T., Dosovitskiy, A., Brox, T., Riedmiller, M.A.: Striving for simplicity: the all convolutional net. In: Bengio, Y., LeCun, Y. (eds.) 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, 7–9 May 2015. Workshop Track Proceedings (2015). http://arxiv.org/abs/1412.6806

Strumbelj, E., Kononenko, I.: An efficient explanation of individual classifications using game theory. J. Mach. Learn. Res. 11, 1–18 (2010)

Sudhakar, M., Sattarzadeh, S., Plataniotis, K.N., Jang, J., Jeong, Y., Kim, H.: Ada-SISE: adaptive semantic input sampling for efficient explanation of convolutional neural networks. In: IEEE International Conference on Acoustics, Speech and Signal Processing (2021)

Sundararajan, M., Taly, A., Yan, Q.: Axiomatic attribution for deep networks. In: Precup, D., Teh, Y.W. (eds.) Proceedings of the 34th International Conference on Machine Learning, ICML 2017, Sydney, NSW, Australia, 6–11 August 2017. Proceedings of Machine Learning Research, vol. 70, pp. 3319–3328. PMLR (2017). http://proceedings.mlr.press/v70/sundararajan17a.html

Wu, L., Li, S., Hsieh, C.J., Sharpnack, J.: SSE-PT: sequential recommendation via personalized transformer. In: Fourteenth ACM Conference on Recommender Systems, RecSys 2020, p. 328–337. Association for Computing Machinery, New York (2020). https://doi.org/10.1145/3383313.3412258

Zeiler, M.D., Fergus, R.: Visualizing and understanding convolutional networks. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) ECCV 2014. LNCS, vol. 8689, pp. 818–833. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-10590-1_53

Acknowledgments

This work has been supported by FCT – Fundaçño para a Ciência e Tecnologia within the R&D Units Project Scope: UIDB/00319/2020. The authors also thanks CAPES and CNPq.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper

Santos, F.A.O., Zanchettin, C., Silva, J.V.S., Matos, L.N., Novais, P. (2021). A Hybrid Post Hoc Interpretability Approach for Deep Neural Networks. In: Sanjurjo González, H., Pastor López, I., García Bringas, P., Quintián, H., Corchado, E. (eds) Hybrid Artificial Intelligent Systems. HAIS 2021. Lecture Notes in Computer Science(), vol 12886. Springer, Cham. https://doi.org/10.1007/978-3-030-86271-8_50

Download citation

DOI: https://doi.org/10.1007/978-3-030-86271-8_50

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-86270-1

Online ISBN: 978-3-030-86271-8

eBook Packages: Computer ScienceComputer Science (R0)