Abstract

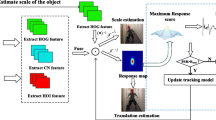

Tracking algorithms based on discriminative correlation filters (DCFs) usually employ fixed weights to integrate feature response maps from multiple templates. However, they fail to exploit the complementarity of multi-feature. These features are against tracking challenges, e.g., deformation, illumination variation, and occlusion. In this work, we propose a novel adaptive feature fusion learning DCFs-based tracker (AFLCF). Specifically, AFLCF can learn the optimal fusion weights for handcrafted and deep feature responses online. The fused response map owns the complementary advantages of multiple features, obtaining a robust object representation. Furthermore, the adaptive temporal smoothing penalty adapts to the tracking scenarios with motion variation, avoiding model corruption and ensuring reliable model updates. Extensive experiments on five challenging visual tracking benchmarks demonstrate the superiority of AFLCF over other state-of-the-art methods. For example, AFLCF achieves a gain of 1.9\(\%\) and 4.4\(\%\) AUC score on LaSOT compared to ECO and STRCF, respectively.

This work was supported by the National Key Research and Development Program of China under Grants 2019YFB2101904, the National Natural Science Foundation of China under Grants 61732011 and 61876127, the Natural Science Foundation of Tianjin under Grant 17JCZDJC30800, and the Applied Basic Research Program of Qinghai under Grant 2019-ZJ-7017.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Bolme, D.S., Beveridge, J.R., Draper, B.A., Lui, Y.M.: Visual object tracking using adaptive correlation filters. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 2544–2550 (2010). https://doi.org/10.1109/CVPR.2010.5539960

Henriques, J.F., Caseiro, R., Martins, P., Batista, J.: High-speed tracking with kernelized correlation filters. IEEE Trans. Pattern Anal. Mach. Intell. 37(3), 583–596 (2015). https://doi.org/10.1109/TPAMI.2014.2345390

Danelljan, M., Häger, G., Khan, F.S., Felsberg, M.: Learning spatially regularized correlation filters for visual tracking. In: IEEE International Conference on Computer Vision, pp. 4310–4318 (2015). https://doi.org/10.1109/ICCV.2015.490

Galoogahi, H.K., Fagg, A., Lucey, S.: Learning background-aware correlation filters for visual tracking. In: IEEE International Conference on Computer Vision, pp. 1144–1152 (2017). https://doi.org/10.1109/ICCV.2017.129

Dai, K., Wang, D., Lu, H., Sun, C., Li, J.: Visual tracking via adaptive spatially-regularized correlation filters. In: CVPR, pp. 4670–4679 (2019). https://doi.org/10.1109/CVPR.2019.00480

Li, F., Tian, C., Zuo, W., Zhang, L., Yang, M.: Learning spatial-temporal regularized correlation filters for visual tracking. In: CVPR, pp. 4904–4913 (2018). https://doi.org/10.1109/CVPR.2018.00515

Huang, Z., Fu, C., Li, Y., Lin, F., Lu, P.: Learning aberrance repressed correlation filters for real-time UAV tracking. In: ICCV, pp. 2891–2900 (2019). https://doi.org/10.1109/ICCV.2019.00298

Danelljan, M., Häger, G., Khan, F.S., Felsberg, M.: Discriminative scale space tracking. IEEE Trans. Pattern Anal. Mach. Intell. 39(8), 1561–1575 (2016). https://doi.org/10.1109/TPAMI.2016.2609928

Danelljan, M., Bhat, G., Khan, F.S., Felsberg, M.: ECO: efficient convolution operators for tracking. In: CVPR, pp. 6931–6939 (2017). https://doi.org/10.1109/CVPR.2017.733

Ma, C., Huang, J., Yang, X., Yang, M.: Hierarchical convolutional features for visual tracking. In: ICCV, pp. 3074–3082 (2015). https://doi.org/10.1109/ICCV.2015.352

Ma, C., Huang, J., Yang, X., Yang, M.: Robust visual tracking via hierarchical convolutional features. IEEE Trans. Pattern Anal. Mach. Intell. 41(11), 2709–2723 (2019). https://doi.org/10.1109/TPAMI.2018.2865311

Wu, Y., Lim, J., Yang, M.: Object tracking benchmark. IEEE Trans. Pattern Anal. Mach. Intell. 37(9), 1834–1848 (2015). https://doi.org/10.1109/TPAMI.2014.2388226

Zhu, P., Wen, L., Du, D., Bian, X., Hu, Q., Ling H.: Vision Meets Drones: Past, Present and Future. arXiv preprint arXiv:1804.07437 (2020)

Galoogahi, H.K., Fagg, A., Huang, C., Ramanan, D., Lucey, S.: Need for speed: a benchmark for higher frame rate object tracking. In: ICCV, pp. 1134–1143 (2017). https://doi.org/10.1109/ICCV.2017.128

Li, S., Yeung, D.: Visual object tracking for unmanned aerial vehicles: a benchmark and new motion models. In: AAAI, pp. 4140–4146 (2017)

Fan, H., et al.: LaSOT: a high-quality benchmark for large-scale single object tracking. In: CVPR, pp. 5374–5383 (2019). https://doi.org/10.1109/CVPR.2019.00552

Fan, H., Ling, H.: Parallel tracking and verifying: a framework for real-time and high accuracy visual tracking. In: ICCV, pp. 5487–549 (2017). https://doi.org/10.1109/ICCV.2017.585

Li, F., Wu, X., Zuo, W., Zhang, D., Zhang, L.: Remove cosine window from correlation filter-based visual trackers: when and how. IEEE Trans. Image Process. 29, 7045–7060 (2020). https://doi.org/10.1109/TIP.2020.2997521

Li, Y., Fu, C., Ding, F., Huang, Z., Lu, G.: AutoTrack: towards high-performance visual tracking for UAV with automatic spatio-temporal regularization. In: CVPR, pp. 11920–11929 (2020). https://doi.org/10.1109/CVPR42600.2020.01194

Xu, T., Feng, Z., Wu, X., Kittler, J.: Learning adaptive discriminative correlation filters via temporal consistency preserving spatial feature selection for robust visual object tracking. IEEE Trans. Image Process. 28, 7949–7959 (2019). https://doi.org/10.1109/TIP.2019.2919201

Lukežič, A., Vojíř, T., Čehovin Zajc, L., Matas, J., Kristan, M.: Discriminative correlation filter tracker with channel and spatial reliability. Int. J. Comput. Vis. 126(7), 671–688 (2018). https://doi.org/10.1007/s11263-017-1061-3

Mueller, M., Smith, N., Ghanem, B.: Context-aware correlation filter tracking. In: CVPR, pp. 1387–1395 (2017). https://doi.org/10.1109/CVPR.2017.152

Choi, J., et al.: Context-aware deep feature compression for high-speed visual tracking. In: CVPR, pp. 479–488 (2018). https://doi.org/10.1109/CVPR.2018.00057

Guo, Q., Feng, W., Zhou, C., Huang, R., Wan, L., Wang, S.: Learning dynamic Siamese network for visual object tracking. In: ICCV, pp. 1781–1789 (2017). https://doi.org/10.1109/ICCV.2017.196

Bertinetto, L., Valmadre, J., Henriques, J.F., Vedaldi, A., Torr, P.H.S.: Fully-convolutional Siamese networks for object tracking. In: Hua, G., Jégou, H. (eds.) ECCV 2016. LNCS, vol. 9914, pp. 850–865. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-48881-3_56

Wang, N., Zhou, W., Tian, Q., Hong, R., Wang, M., Li, H.: Multi-cue correlation filters for robust visual tracking. In: CVPR, pp. 4844–4853 (2018). https://doi.org/10.1109/CVPR.2018.00509

Bhat, G., Johnander, J., Danelljan, M., Khan, F.S., Felsberg, M.: Unveiling the power of deep tracking. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11206, pp. 493–509. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01216-8_30

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: CVPR 2016, pp. 770–778 (2016). https://doi.org/10.1109/CVPR.2016.90

Bertinetto, L., Valmadre, J., Golodetz, S., Miksik, O., Torr, P.H.S.: Complementary learners for real-time tracking. In: CVPR, pp. 1401–1409 (2016). https://doi.org/10.1109/CVPR.2016.156

Ma, C., Yang, X., Zhang, C., Yang, M.: Long-term correlation tracking. In: CVPR, pp. 5388–5396 (2015). https://doi.org/10.1109/CVPR.2015.7299177

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper

Yu, H., Zhu, P. (2021). Adaptive Correlation Filters Feature Fusion Learning for Visual Tracking. In: Farkaš, I., Masulli, P., Otte, S., Wermter, S. (eds) Artificial Neural Networks and Machine Learning – ICANN 2021. ICANN 2021. Lecture Notes in Computer Science(), vol 12895. Springer, Cham. https://doi.org/10.1007/978-3-030-86383-8_52

Download citation

DOI: https://doi.org/10.1007/978-3-030-86383-8_52

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-86382-1

Online ISBN: 978-3-030-86383-8

eBook Packages: Computer ScienceComputer Science (R0)