Abstract

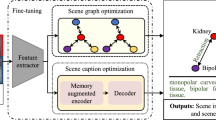

Generating surgical reports aimed at surgical scene understanding in robot-assisted surgery can contribute to documenting entry tasks and post-operative analysis. Despite the impressive outcome, the deep learning model degrades the performance when applied to different domains encountering domain shifts. In addition, there are new instruments and variations in surgical tissues appeared in robotic surgery. In this work, we propose class-incremental domain adaptation (CIDA) with a multi-layer transformer-based model to tackle the new classes and domain shift in the target domain to generate surgical reports during robotic surgery. To adapt incremental classes and extract domain invariant features, a class-incremental (CI) learning method with supervised contrastive (SupCon) loss is incorporated with a feature extractor. To generate caption from the extracted feature, curriculum by one-dimensional gaussian smoothing (CBS) is integrated with a multi-layer transformer-based caption prediction model. CBS smoothes the features embedding using anti-aliasing and helps the model to learn domain invariant features. We also adopt label smoothing (LS) to calibrate prediction probability and obtain better feature representation with both feature extractor and captioning model. The proposed techniques are empirically evaluated by using the datasets of two surgical domains, such as nephrectomy operations and transoral robotic surgery. We observe that domain invariant feature learning and the well-calibrated network improves the surgical report generation performance in both source and target domain under domain shift and unseen classes in the manners of one-shot and few-shot learning. The code is publicly available at https://github.com/XuMengyaAmy/CIDACaptioning.

M. Xu and M. Islam—Equal contributions.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

https://youtu.be/bwpEul4KCSc

References

Allan, M., et al.: 2018 robotic scene segmentation challenge. arXiv preprint arXiv:2001.11190 (2020)

Ashukha, A., Lyzhov, A., Molchanov, D., Vetrov, D.: Pitfalls of in-domain uncertainty estimation and ensembling in deep learning. arXiv preprint arXiv:2002.06470 (2020)

Banerjee, S., Lavie, A.: Meteor: an automatic metric for MT evaluation with improved correlation with human judgments. In: Proceedings of the ACL Workshop on Intrinsic and Extrinsic Evaluation Measures for Machine Translation and/or Summarization, pp. 65–72 (2005)

Castro, F.M., Marín-Jiménez, M.J., Guil, N., Schmid, C., Alahari, K.: End-to-end incremental learning. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 233–248 (2018)

Chen, J., et al.: Transunet: transformers make strong encoders for medical image segmentation. arXiv preprint arXiv:2102.04306 (2021)

Cornia, M., Stefanini, M., Baraldi, L., Cucchiara, R.: Meshed-memory transformer for image captioning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10578–10587 (2020)

Dosovitskiy, A., et al.: An image is worth 16x16 words: transformers for image recognition at scale. arXiv preprint arXiv:2010.11929 (2020)

Ganin, Y., et al.: Domain-adversarial training of neural networks. J. Mach. Learn. Res. 17(1), 2096–2030 (2016)

Gunel, B., Du, J., Conneau, A., Stoyanov, V.: Supervised contrastive learning for pre-trained language model fine-tuning. arXiv preprint arXiv:2011.01403 (2020)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Hinton, G., Vinyals, O., Dean, J.: Distilling the knowledge in a neural network. arXiv preprint arXiv:1503.02531 (2015)

Islam, M., Seenivasan, L., Ming, L.C., Ren, H.: Learning and reasoning with the graph structure representation in robotic surgery. In: Martel, A.L., et al. (eds.) MICCAI 2020. LNCS, vol. 12263, pp. 627–636. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-59716-0_60

Khosla, P., et al.: Supervised contrastive learning. arXiv preprint arXiv:2004.11362 (2020)

Kundu, J.N., Venkatesh, R.M., Venkat, N., Revanur, A., Babu, R.V.: Class-incremental domain adaptation. arXiv preprint arXiv:2008.01389 (2020)

Lin, C.Y.: Rouge: a package for automatic evaluation of summaries. In: Text Summarization Branches Out, pp. 74–81 (2004)

Müller, R., Kornblith, S., Hinton, G.E.: When does label smoothing help? In: Advances in Neural Information Processing Systems, pp. 4694–4703 (2019)

Nair, V., Hinton, G.E.: Rectified linear units improve restricted Boltzmann machines. In: ICML (2010)

Nixon, J., Dusenberry, M.W., Zhang, L., Jerfel, G., Tran, D.: Measuring calibration in deep learning. In: CVPR Workshops, pp. 38–41 (2019)

Pan, Y., Yao, T., Li, Y., Mei, T.: X-linear attention networks for image captioning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10971–10980 (2020)

Papineni, K., Roukos, S., Ward, T., Zhu, W.J.: Bleu: a method for automatic evaluation of machine translation. In: Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics, pp. 311–318 (2002)

Sahu, M., Strömsdörfer, R., Mukhopadhyay, A., Zachow, S.: Endo-Sim2Real: consistency learning-based domain adaptation for instrument segmentation. In: Martel, A.L., et al. (eds.) MICCAI 2020. LNCS, vol. 12263, pp. 784–794. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-59716-0_75

Sinha, S., Garg, A., Larochelle, H.: Curriculum by smoothing. arXiv e-prints pp. arXiv-2003 (2020)

Vedantam, R., Lawrence Zitnick, C., Parikh, D.: Cider: consensus-based image description evaluation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4566–4575 (2015)

Xu, M., Islam, M., Lim, C.M., Ren, H.: Learning domain adaptation with model calibration for surgical report generation in robotic surgery. arXiv preprint arXiv:2103.17120 (2021)

Zia, A., et al.: Surgical visual domain adaptation: results from the MICCAI 2020 SurgVisDom challenge (2021)

Acknowledgements

This work was supported by the Shun Hing Institute of Advanced Engineering (SHIAE project, 8115064#BME-p1-21) at the Chinese University of Hong Kong (CUHK) and Singapore Academic Research Fund under Grant R397000353114. We would like to express sincere thanks to Lalithkumar Seenivasan for his help on incremental learning of our work.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper

Xu, M., Islam, M., Lim, C.M., Ren, H. (2021). Class-Incremental Domain Adaptation with Smoothing and Calibration for Surgical Report Generation. In: de Bruijne, M., et al. Medical Image Computing and Computer Assisted Intervention – MICCAI 2021. MICCAI 2021. Lecture Notes in Computer Science(), vol 12904. Springer, Cham. https://doi.org/10.1007/978-3-030-87202-1_26

Download citation

DOI: https://doi.org/10.1007/978-3-030-87202-1_26

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-87201-4

Online ISBN: 978-3-030-87202-1

eBook Packages: Computer ScienceComputer Science (R0)