Abstract

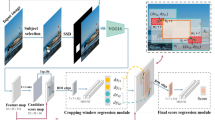

Numerous factors can impact the aesthetic quality of images: composition, resolution, exposure, color saturation and so on. Image cropping is to improve the aesthetic quality by recomposing the images. When the only consideration of an image cropping system is composition, the automatic image cropping algorithm should be single input single output system (SISOS). However, most of the existing approaches are multiple input multiple output systems (MIMOS) which consider image composition and image aesthetics synchronously. In these MIMOSs, cropping result may change when composition irrelevant factors (e.g., resolution, exposure, color saturation) varies, which is undesirable to users. Based on this observation, we try to discriminate image composition and aesthetics to get a SISOS based on composition by the saliency map. From our observation, although the saliency map is robust to the composition irrelevant factors, it is a less informative data format for composition. Hence, it is transformed to the salient cluster that is similar to point cloud. The salient points in salient cluster can directly describe the spatial structure of an image, so the salient cluster can be treated as an expression of composition and serves as the only input of the proposed model. Our model is designed based on PointNet and made up of content screening module (CSM) and composition regression module (CRM). CSM extracts the points of interest and CRM outputs a cropping box. The experimental results on public datasets shows that, compared with prior arts, our network is more robust to composition irrelevant factor with a comparable or better performance.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Chen, J., Bai, G., Liang, S., Li, Z.: Automatic image cropping: a computational complexity study. In: CVPR, pp. 507–515 (2016)

Chen, Y.L., Huang, T.W., Chang, K.H., Tsai, Y.C., Chen, H.T., Chen, B.Y.: Quantitative analysis of automatic image cropping algorithms: a dataset and comparative study. In: WACV, pp. 226–234. IEEE (2017)

Chen, Y.L., Klopp, J., Sun, M., Chien, S.Y., Ma, K.L.: Learning to compose with professional photographs on the web. In: MM, pp. 37–45 (2017)

Fang, C., Lin, Z., Mech, R., Shen, X.: Automatic image cropping using visual composition, boundary simplicity and content preservation models. In: MM, pp. 1105–1108 (2014)

Greco, L., La Cascia, M.: Saliency based aesthetic cut of digital images. In: Petrosino, A. (ed.) ICIAP 2013. LNCS, vol. 8157, pp. 151–160. Springer, Heidelberg (2013). https://doi.org/10.1007/978-3-642-41184-7_16

Li, D., Wu, H., Zhang, J., Huang, K.: A2-rl: aesthetics aware reinforcement learning for image cropping. In: CVPR, pp. 8193–8201 (2018)

Li, Y., Pirk, S., Su, H., Qi, C.R., Guibas, L.J.: Fpnn: field probing neural networks for 3d data. In: NIPS, pp. 307–315 (2016)

Lu, P., Zhang, H., Peng, X., Peng, X.: Aesthetic guided deep regression network for image cropping. Signal Processing: Image Communication, pp. 1–10 (2019)

Maturana, D., Scherer, S.: Voxnet: a 3d convolutional neural network for real-time object recognition. In: IROS, pp. 922–928. IEEE (2015)

Park, J., Lee, J.Y., Tai, Y.W., Kweon, I.S.: Modeling photo composition and its application to photo re-arrangement. In: ICIP, pp. 2741–2744. IEEE (2012)

Qi, C.R., Litany, O., He, K., Guibas, L.J.: Deep hough voting for 3d object detection in point clouds. In: ICCV, pp. 9277–9286 (2019)

Qi, C.R., Liu, W., Wu, C., Su, H., Guibas, L.J.: Frustum pointnets for 3d object detection from rgb-d data. In: CVPR, pp. 918–927 (2018)

Qi, C.R., Su, H., Mo, K., Guibas, L.J.: Pointnet: deep learning on point sets for 3d classification and segmentation. In: CVPR, pp. 652–660 (2017)

Qi, C.R., Su, H., Nießner, M., Dai, A., Yan, M., Guibas, L.J.: Volumetric and multi-view cnns for object classification on 3d data. In: CVPR, pp. 5648–5656 (2016)

Qi, C.R., Yi, L., Su, H., Guibas, L.J.: Pointnet++: deep hierarchical feature learning on point sets in a metric space. In: NIPS, pp. 5099–5108 (2017)

Santella, A., Agrawala, M., DeCarlo, D., Salesin, D., Cohen, M.: Gaze-based interaction for semi-automatic photo cropping. In: CHI, pp. 771–780 (2006)

Suh, B., Ling, H., Bederson, B.B., Jacobs, D.W.: Automatic thumbnail cropping and its effectiveness. In: UIST, pp. 95–104 (2003)

Wang, P.S., Liu, Y., Guo, Y.X., Sun, C.Y., Tong, X.: O-cnn: octree-based convolutional neural networks for 3d shape analysis. TOG 36(4), 1–11 (2017)

Wang, W., Shen, J.: Deep cropping via attention box prediction and aesthetics assessment. In: ICCV, pp. 2186–2194 (2017)

Wang, W., Shen, J., Ling, H.: A deep network solution for attention and aesthetics aware photo cropping. TPAMI 41(7), 1531–1544 (2018)

Wei, Z., et al.: Good view hunting: Learning photo composition from dense view pairs. In: CVPR, pp. 5437–5446 (2018)

Wu, Z., et al.: 3d shapenets: a deep representation for volumetric shapes. In: CVPR, pp. 1912–1920 (2015)

Zeng, H., Li, L., Cao, Z., Zhang, L.: Grid anchor based image cropping: a new benchmark and an efficient model. arXiv preprint arXiv:1909.08989 (2019)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper

Pan, Z., Xian, K., Lu, H., Cao, Z. (2021). Robust Image Cropping by Filtering Composition Irrelevant Factors. In: Peng, Y., Hu, SM., Gabbouj, M., Zhou, K., Elad, M., Xu, K. (eds) Image and Graphics. ICIG 2021. Lecture Notes in Computer Science(), vol 12890. Springer, Cham. https://doi.org/10.1007/978-3-030-87361-5_23

Download citation

DOI: https://doi.org/10.1007/978-3-030-87361-5_23

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-87360-8

Online ISBN: 978-3-030-87361-5

eBook Packages: Computer ScienceComputer Science (R0)