Abstract

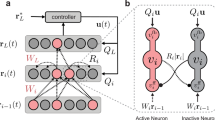

Human brain effectively integrates prior knowledge to new skills by transferring experience across tasks without suffering from catastrophic forgetting. In this study, to continuously learn a visual classification task sequence, we employed a neural network model with lateral connections called Progressive Neural Networks (PNN). We sparsified PNNs with sparse group Least Absolute Shrinkage and Selection Operator (LASSO) and trained conventional PNNs with recursive connections. Later, the effect of the task prior on current performance is investigated with various task orders. The proposed approach is evaluated on permutedMNIST and selected subtasks from CIFAR-100 dataset. Results show that sparse Group LASSO regularization effectively sparsifies the progressive neural networks and the task sequence order affects the performance.

Supported by the Vodafone Future Laboratory, Istanbul Technical University (ITU), under Grant ITUVF20180901P04.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Chaudhry, A., Ranzato, M., Rohrbach, M., Elhoseiny, M.: Efficient lifelong learning with a-gem. arXiv preprint arXiv:1812.00420 (2018)

d’Autume, C.d.M., Ruder, S., Kong, L., Yogatama, D.: Episodic memory in lifelong language learning. arXiv preprint arXiv:1906.01076 (2019)

Goodfellow, I.J., Mirza, M., Xiao, D., Courville, A., Bengio, Y.: An empirical investigation of catastrophic forgetting in gradient-based neural networks. arXiv preprint arXiv:1312.6211 (2013)

Han, S., Pool, J., Tran, J., Dally, W.: Learning both weights and connections for efficient neural network. In: Advances in Neural Information Processing Systems, pp. 1135–1143 (2015)

Kirkpatrick, J., et al.: Overcoming catastrophic forgetting in neural networks. Proc. Nat. Acad. Sci. 114(13), 3521–3526 (2017)

Li, X., Zhou, Y., Wu, T., Socher, R., Xiong, C.: Learn to grow: a continual structure learning framework for overcoming catastrophic forgetting. In: International Conference on Machine Learning, pp. 3925–3934. PMLR (2019)

Lopez-Paz, D., Ranzato, M.: Gradient episodic memory for continual learning. Adv. Neural Inf. Process. Syst. 30, 6467–6476 (2017)

Nguyen, C.V., Li, Y., Bui, T.D., Turner, R.E.: Variational continual learning. In: International Conference on Learning Representations (2018). https://openreview.net/forum?id=BkQqq0gRb

Paszke, A., et al.: Automatic differentiation in Pytorch (2017)

Riemer, M., et al.: Learning to learn without forgetting by maximizing transfer and minimizing interference. In: International Conference on Learning Representations (2019). https://openreview.net/forum?id=B1gTShAct7

Ritter, H., Botev, A., Barber, D.: Online structured Laplace approximations for overcoming catastrophic forgetting. In: Advances in Neural Information Processing Systems, pp. 3738–3748 (2018)

Rusu, A.A., et al.: Progressive neural networks. arXiv preprint arXiv:1606.04671 (2016)

Scardapane, S., Comminiello, D., Hussain, A., Uncini, A.: Group sparse regularization for deep neural networks. Neurocomputing 241, 81–89 (2017)

Serra, J., Suris, D., Miron, M., Karatzoglou, A.: Overcoming catastrophic forgetting with hard attention to the task. In: International Conference on Machine Learning, pp. 4548–4557. PMLR (2018)

Strannegård, C., Carlström, H., Engsner, N., Mäkeläinen, F., Slottner Seholm, F., Haghir Chehreghani, M.: Lifelong learning starting from zero. In: Hammer, P., Agrawal, P., Goertzel, B., Iklé, M. (eds.) AGI 2019. LNCS (LNAI), vol. 11654, pp. 188–197. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-27005-6_19

Xu, J., Ma, J., Zhu, Z.: Bayesian optimized continual learning with attention mechanism. arXiv preprint arXiv:1905.03980 (2019)

Xu, J., Zhu, Z.: Reinforced continual learning. In: Advances in Neural Information Processing Systems, pp. 899–908 (2018)

Yoon, J., Yang, E., Lee, J., Hwang, S.J.: Lifelong learning with dynamically expandable networks. In: 6th International Conference on Learning Representations, ICLR 2018, Vancouver, BC, Canada, 30 April–3 May 2018, Conference Track Proceedings. OpenReview.net (2018). https://openreview.net/forum?id=Sk7KsfW0-

Zenke, F., Poole, B., Ganguli, S.: Continual learning through synaptic intelligence. In: Proceedings of the 34th International Conference on Machine Learning, vol. 70, pp. 3987–3995 (2017)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper

Ergün, E., Töreyin, B.U. (2021). Sparse Progressive Neural Networks for Continual Learning. In: Wojtkiewicz, K., Treur, J., Pimenidis, E., Maleszka, M. (eds) Advances in Computational Collective Intelligence. ICCCI 2021. Communications in Computer and Information Science, vol 1463. Springer, Cham. https://doi.org/10.1007/978-3-030-88113-9_58

Download citation

DOI: https://doi.org/10.1007/978-3-030-88113-9_58

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-88112-2

Online ISBN: 978-3-030-88113-9

eBook Packages: Computer ScienceComputer Science (R0)