Abstract

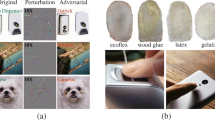

Well-trained deep neural networks (DNN) are an indispensable part of the intellectual property of the model owner. However, the confidentiality of models are threatened by model piracy, which steals a DNN and obfuscates the pirated model with post-processing techniques. To counter model piracy, recent works propose several model fingerprinting methods, which are commonly based on a special set of adversarial examples of the owner’s classifier as the fingerprints, and verify whether a suspect model is pirated based on whether the predictions on the fingerprints from the suspect model and from the owner’s model match with one another. However, existing fingerprinting schemes are limited to models for classification and usually require access to the training data. In this paper, we propose the first Task-Agnostic Fingerprinting Algorithm (TAFA) for the broad family of neural networks with rectified linear units. Compared with existing adversarial example-based fingerprinting algorithms, TAFA enables model fingerprinting for DNNs on a variety of downstream tasks including but not limited to classification, regression and generative modeling, with no assumption on training data access. Extensive experimental results on three typical scenarios strongly validate the effectiveness and the robustness of TAFA.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

Without loss of generality, we only formalize a convolutional layer with its stride equal to 1, its padding equal to 0 and its kernel of a square shape.

References

He, K., Zhang, X., et al.: Deep residual learning for image recognition. In: CVPR, pp. 770–778 (2016)

Devlin, J., Chang, M.W., et al.: Bert: pre-training of deep bidirectional transformers for language understanding. In: NAACL-HLT (2019)

Cao, Y., Xiao, C., et al.: Adversarial sensor attack on lidar-based perception in autonomous driving. In: CCS (2019)

Heaton, J.B., Polson, N.G., et al.: Deep learning for finance: Deep portfolios. iN: Econometric Modeling: Capital Markets - Portfolio Theory eJournal (2016)

Esteva, A., Kuprel, B., et al.: Dermatologist-level classification of skin cancer with deep neural networks. In: Nature (2017)

Wenskay, D.L.: Intellectual property protection for neural networks. In: Neural Networks (1990)

Uchida, Y., Nagai, Y., et al.: Embedding watermarks into deep neural networks. In: ICMR (2017)

Adi, Y., Baum, C., et al.: Turning your weakness into a strength: watermarking deep neural networks by backdooring. In: USENIX Security Symposium (2018)

Cao, X., Jia, J., et al.: Ipguard: protecting the intellectual property of deep neural networks via fingerprinting the classification boundary. In: AsiaCCS (2021)

Cox, I., Miller, M., et al.: Digital watermarking. In: Lecture Notes in Computer Science (2003)

Wang, J., Wu, H., et al.: Watermarking in deep neural networks via error back-propagation. In: Electronic Imaging (2020)

Szegedy, C., Zaremba, W., Sutskever, I., Bruna, J., et al.: Intriguing properties of neural networks. ArXiv (2014)

Lukas, N., Zhang, Y., et al.: Deep neural network fingerprinting by conferrable adversarial examples. ArXiv (2019)

Zhao, J., Qingyue, H., et al.: Afa: adversarial fingerprinting authentication for deep neural networks. Comput. Commun. 150, 488–497 (2020)

Pytorch hub. https://pytorch.org/hub/, Accessed 01 Feb 2021

Amazon aws. https://aws.amazon.com/, Accessed 01 Feb 2021

Nair, V., Hinton, G.E.: Rectified linear units improve restricted boltzmann machines. In: ICML (2010)

Tramèr, F., Zhang, F., et al.: Stealing machine learning models via prediction apis. In: USENIX Security (2016)

Jagielski, M., Carlini, N., et al.: High accuracy and high fidelity extraction of neural networks. In: USENIX Security Symposium (2020)

Rolnick, D., Kording, K.P.: Reverse-engineering deep relu networks. In: ICML (2020)

Montúfar, G., Pascanu, R., et al.: On the number of linear regions of deep neural networks. In: NIPS (2014)

Hanin, B., Rolnick, D.: Complexity of linear regions in deep networks. In: ICML (2019)

Goodfellow, I., Bengio, Y., et al.: Deep Learning. MIT Press, Cambridge (2016)

Jarrett, K., Kavukcuoglu, K., et al.: What is the best multi-stage architecture for object recognition? In: ICCV (2009)

Szegedy, C., Liu, W., et al.: Going deeper with convolutions. In: CVPR (2015)

Pan, S.J., Yang, Q.: A survey on transfer learning. In: TKDE (2010)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. ArXiv (2015)

Ren, J., Xu, L.: On vectorization of deep convolutional neural networks for vision tasks. In: AAAI (2015)

Serra, T., Tjandraatmadja, C., et al.: Bounding and counting linear regions of deep neural networks. In: ICML (2018)

Wang, S., Chang, C.H.: Fingerprinting deep neural networks - a deepfool approach. In: ISCAS (2021)

Li, Y., Zhang, Z., et al.: Modeldiff: testing-based DNN similarity comparison for model reuse detection. In: ISSTA (2021)

Han, S., Pool, J., et al.: Learning both weights and connections for efficient neural network. ArXiv (2015)

Li, H., Kadav, A., et al.: Pruning filters for efficient convnets. ArXiv (2017)

Juuti, M., Szyller, S., et al.: Prada: protecting against dnn model stealing attacks. In: EuroS&P (2019)

Gurobi linear programming optimizer. https://www.gurobi.com/, Accessed 01 Feb 2021

Yang, J., Shi, R., et al.: Medmnist classification decathlon: a lightweight automl benchmark for medical image analysis. ArXiv (2020)

Whirl-Carrillo, M., McDonagh, E., et al.: Pharmacogenomics knowledge for personalized medicine. In: Clinical Pharmacology & Therapeutics (2012)

Xiao, H., Rasul, K., et al.: Fashion-mnist: a novel image dataset for benchmarking machine learning algorithms. ArXiv (2017)

Moosavi-Dezfooli, S.M., Fawzi, A., et al.: Deepfool: a simple and accurate method to fool deep neural networks. In: CVPR (2016)

Boenisch, F.: A survey on model watermarking neural networks. ArXiv (2020)

Regazzoni, F., Palmieri, P., et al.: Protecting artificial intelligence ips: a survey of watermarking and fingerprinting for machine learning. In: CAAI Transactions on Intelligence Technology (2021)

Rouhani, B., Chen, H., et al.: Deepsigns: a generic watermarking framework for ip protection of deep learning models. ArXiv (2018)

Xu, X., Li, Y., et al.: “identity bracelets” for deep neural networks. IEEE Access (2020)

Goodfellow, I., Pouget-Abadie, J., et al.: Generative adversarial nets. In: NeurIPS (2014)

Truda, G., Marais, P.: Warfarin dose estimation on multiple datasets with automated hyperparameter optimisation and a novel software framework. ArXiv (2019)

Arjovsky, M., Chintala, S., et al.: Wasserstein generative adversarial networks. In: ICML (2017)

Acknowledgement

We sincerely appreciate the shepherding from Kyu Hyung Lee. We would also like to thank the anonymous reviewers for their constructive comments and input to improve our paper. This work was supported in part by National Natural Science Foundation of China (61972099, U1836213,U1836210, U1736208), and Natural Science Foundation of Shanghai (19ZR1404800). Min Yang is a faculty of Shanghai Institute of Intelligent Electronics & Systems, Shanghai Institute for Advanced Communication and Data Science, and Engineering Research Center of CyberSecurity Auditing and Monitoring, Ministry of Education, China.

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

Appendices

A More Backgrounds on Deep Learning

\(\bullet \) Learning Tasks. In a general deep learning scenario, we denote a deep neural network (DNN) as \(F(\cdot ; \varTheta )\), a parametric model which maps a data input x in \(\mathcal {X} \subset \mathbb {R}^{d_{\text {in}}}\) to prediction \(y = F(x; \varTheta )\) in \(\mathcal {Y} \subset {R}^{d_\text {out}}\). The concrete choice of the prediction space \(\mathcal {Y}\) depends on the nature of the downstream task to which the DNN is applied. In this paper, we mainly evaluate our proposed fingerprinting algorithm on the following three popular learning tasks.

(1) Classification: In a K-class classification task, a DNN learns to classify a data input x (e.g., an image) into one of the N classes (e.g., based on the contained object). Usually, a DNN for classification has its prediction space as a N-dimensional simplex  , where each prediction

, where each prediction  is called a probability vector with \(p_i\) giving the predicted probability of x belonging to the i-th class.

is called a probability vector with \(p_i\) giving the predicted probability of x belonging to the i-th class.

(2) Regression: In a regression task, a DNN learns to predict the corresponding \(d_\text {out}\)-dimensional value y (e.g., drug dosing) based on the \(d_\text {in}\)-dimensional input x (e.g., demographic information).

(3) Generative Modeling: In a generative modeling task, a DNN learns to model the distribution of a given dataset (e.g., hand-written digits) for the purpose of data generation (e.g., generative adversarial nets [44]). Once trained, the DNN is able to output a prediction (e.g., a realistic hand-written digit) which has the same shape as the real samples in the dataset, when a random noise is input to the model. Although there are a few more typical learning tasks (e.g., ranking and information retrieval) in nowadays deep learning practices, the above three tasks already cover a majority of use cases of DNNs [23].

\(\bullet \) Formulation of Layer Structures. To be self-contained, we formalize the two typical neural network layers below.

Definition

A1 (Fully-Connected Layer). A fully-connected layer \(f_{\text {FC}}\) is composed of a weight matrix \(W_0 \in \mathbb {R}^{d_{1}\times {d_\text {in}}}\) and a bias vector \(b_0 \in \mathbb {R}^{d_{1}}\). Applying the fully-connected layer to an input \(x \in \mathbb {R}^{d_{\text {in}}}\) computes \(f_{\text {FC}}(x) := W_{0}x + b_0.\)

Definition

A2 (Convolutional Layer). For the simplest caseFootnote 1, a convolutional layer \(f_{\text {Conv}}\) is composed of \(C_\text {out}\) filters, i.e., \(W^c \in \mathbb {R}^{{C_\text {in}}\times {K}\times {K}}\), and a bias vector \(b \in \mathbb {R}^{C_\text {out}}\). For each output channel  , applying the convolutional layer to an input \(x\in \mathbb {R}^{C_\text {in}\times {H}_\text {in}\times {W_{\text {in}}}}\) computes \( f_{\text {Conv}, c}(x) = \sum _{k=1}^{C_\text {in}}W^c * x^k + b_c,\) where \([W^c * x^k]_{ij} := \sum _{m=1}^{K}\sum _{n=1}^{K}[W^c]_{m, n}[x^{k}]_{i-m,j-n}\), the cross-correlation between the c-th filter \(W^c\) and the k-th input channel \(x^k\).

, applying the convolutional layer to an input \(x\in \mathbb {R}^{C_\text {in}\times {H}_\text {in}\times {W_{\text {in}}}}\) computes \( f_{\text {Conv}, c}(x) = \sum _{k=1}^{C_\text {in}}W^c * x^k + b_c,\) where \([W^c * x^k]_{ij} := \sum _{m=1}^{K}\sum _{n=1}^{K}[W^c]_{m, n}[x^{k}]_{i-m,j-n}\), the cross-correlation between the c-th filter \(W^c\) and the k-th input channel \(x^k\).

B More Evaluation Details and Results

1.1 B.1 Details of Scenarios

We introduce the details of the tasks, the datasets and the model architectures studied in our work.

(1) Skin Cancer Classification (abbrev. Skin). The first scenario covers the usage of deep CNN for skin cancer diagnosis. According to [36], we train a ResNet-18 [1] as the target model on DermaMNIST [36], which consists of 10005 multi-source dermatoscopic images of common pigmented skin lesions imaging dataset. The input size is originally \(3\times {28}\times {28}\), which is upsampled to \(3\times {224}\times {224}\) to fit the input shape of a standard ResNet-18 architecture implemented in torchvision. The task is a 7-class classification task.

(2) Warfarin Dose Prediction (abbrev. Warfarin). The second scenario covers the usage of FCN for warfarin dose prediction, which is a safety-critical regression task that helps predict the proper individualised warfarin dosing according to the demographic and physiological record of the patients (e.g., weight, age and genetics). We use the International Warfarin Pharmacogenetics Consortium (IWPC) dataset [37], which is a public dataset composed of 31-dimensional features of 6256 patients and is widely used for researches in automated warfarin dosing. According to [45], we use a three-layer fully-connected neural network with ReLU as the target model, with its hidden layer composed of 100 neurons. The target model learns to predict the value of proper warfarin dosing, a non-negative real-valued scalar in (0, 300.0].

(3) Fashion Generation (abbrev. Fashion). The final scenario covers the usage of FCN for generative modeling. We choose [38], which consists of \(28\times {28}\) images for 60000 articles of clothing. Following the paradigm of Wasserstein generative adversarial networks (WGAN [46]), we train a 5-layer FCN of architecture \((64-128-256-512-768)\) as the generator and a 4-layer FCN of architecture \((768-512-256-1)\). We view the FCN-based generator as the target model, because a well-trained generator represents more the IP of the model owner as it can be directly used to generate realistic images without the aid of the discriminator.

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper

Pan, X., Zhang, M., Lu, Y., Yang, M. (2021). TAFA: A Task-Agnostic Fingerprinting Algorithm for Neural Networks. In: Bertino, E., Shulman, H., Waidner, M. (eds) Computer Security – ESORICS 2021. ESORICS 2021. Lecture Notes in Computer Science(), vol 12972. Springer, Cham. https://doi.org/10.1007/978-3-030-88418-5_26

Download citation

DOI: https://doi.org/10.1007/978-3-030-88418-5_26

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-88417-8

Online ISBN: 978-3-030-88418-5

eBook Packages: Computer ScienceComputer Science (R0)