Abstract

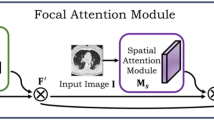

The coronavirus disease (COVID-19) pandemic has affected billions of lives around the world since its first outbreak in 2019. The computed tomography (CT) is a valuable tool for the COVID-19 associated clinical diagnosis, and deep learning has been extensively used to improve the analysis of CT images. However, owing to the limitation of the publicly available COVID-19 imaging datasets and the randomness and variability of the infected areas, it is challenging for the current segmentation methods to achieve satisfactory performance. In this paper, we propose a novel boundary-assisted and discriminative feature extraction network (BDFNet), which can be used to improve the accuracy of segmentation. We adopt the triplet attention (TA) module to extract the discriminative image representation, and the adaptive feature fusion (AFF) module to fuse the texture information and shape information. In addition to the channel and spatial dimensions that are mainly used in previous models, the cross channel-special context is also obtained in our model via the TA module. Moreover, fused hierarchical boundary information is integrated through the application of the AFF module. According to experiments conducted on two publicly accessible COVID-19 datasets, COVID-19-CT-Seg and CC-CCII, BDFNet performs better than most cutting-edge segmentation algorithms in six widely used segmentation metrics.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Wang, C., Horby, P.W., Hayden, F.G., Gao, G.F.: A novel coronavirus outbreak of global health concern. J. Lancet 395, 470–473 (2020). https://doi.org/10.1016/S0140-6736(20)30185-9

Zhao, W., Zhong, Z., Xie, X., Yu, Q., Liu, J.: Relation between chest CT findings and clinical conditions of coronavirus disease (COVID-19) pneumonia: a multicenter study. J. AJR Am. J. Roentgenol. 214, 1072–1077 (2020). https://doi.org/10.2214/ajr.20.22976

Li, C., et al.: Asymptomatic novel coronavirus pneumonia patient outside Wuhan: the value of CT images in the course of the disease. J. Clin. Imaging 63, 7–9(2020). 101016/j.clinimag.2020.02.008

Chen, Z., Wang, R.: Application of CT in the diagnosis and differential diagnosis of novel coronavirus pneumonia. J. CT Theor. Appl. 29(3), 273–279 (2020). https://doi.org/10.15953/j.1004-4140.2020.29.03.02

Fan, D., et al.: Inf-Net: automatic COVID-19 lung infection segmentation. J. CT Images IEEE Trans. Med. Imaging 39, 2626–2637 (2020). https://doi.org/10.1109/tmi.2020.2996645

Wang, G., et al.: A noise-robust framework for automatic segmentation of COVID-19 pneumonia lesions from CT images. J. IEEE Trans. Med. Imaging 39, 2653–2663 (2020). https://doi.org/10.1109/tmi.2020.3000314

Qiu, Y., Liu, Y., Xu, J.: MiniSeg: an extremely minimum network for efficient COVID-19 segmentation. arXiv preprint arXiv:2004.09750 (2020)

He, K., Zhang, X., Ren, S., Sun, J.: Identity mappings in deep residual networks. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9908, pp. 630–645. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46493-0_38

Hariharan, B., Arbelaez, P., Girshick R., et al.: Hypercolumns for object segmentation and fine-grained localization. In: 2015 IEEE Conference on Computer Vision and Pattern Recognition, pp. 447–456, IEEE Press, Boston (2015). https://doi.org/10.1109/CVPR.2015.7298642

Mostajabi, M., Yadollahpour, P., Shakhnarovich, G.: Feedforward semantic segmentation with zoom-out features. In: 2015 IEEE Conference on Computer Vision and Pattern Recognition, pp. 3376–3385, IEEE Press, Boston (2015). https://doi.org/10.1109/CVPR.2015.7298959

Wu, Z., Su, L., Huang, Q.: Cascaded partial decoder for fast and accurate salient object detection. In: 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 3902–3911. IEEE Press, Long Beach (2019). https://doi.org/10.1109/CVPR.2019.00403

Misra, D., Nalamada, T., Arasanipalai, A.U., et al.: Rotate to attend: convolutional triplet attention module. arXiv preprint, arXiv:2010.03045 (2020)

Fu, J., et al.: Dual attention network for scene segmentation. In: 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 3141–3149, IEEE Press, Long Beach (2019). https://doi.org/10.1109/CVPR.2019.00326

Zhang, K., Liu, X., et al.: Clinically applicable AI system for accurate diagnosis, quantitative measurements, and prognosis of COVID-19 pneumonia using computed tomography. J. Cell 181, 1423–1433 (2020). https://doi.org/10.1016/j.cell.2020.04.045

Fan, D., Cheng, M., Liu, Y., Li, T., Borji, A.: Structure-measure: a new way to evaluate foreground maps. In: 2017 IEEE International Conference on Computer Vision, pp. 4558–4567, IEEE CS Press, Venice, Italy (2017). https://doi.org/10.1109/ICCV.2017.487

Zhang, J., et al.: UC-Net: uncertainty inspired RGB-D saliency detection via conditional variational autoencoders. In: 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 8579–8588, IEEE Press, Seattle (2020). https://doi.org/10.1109/CVPR42600.2020.00861

Fan, D.-P., et al.: PraNet: parallel reverse attention network for Polyp segmentation. In: Martel, A.L., et al. (eds.) MICCAI 2020. LNCS, vol. 12266, pp. 263–273. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-59725-2_26

Badrinarayanan, V., Kendall, A., Cipolla, R.: SegNet: a deep convolutional encoder-decoder architecture for image segmentation. J. IEEE Trans. Pattern Anal. Mach. Intell. 39, 2481–2495 (2017). https://doi.org/10.1109/TPAMI.2016.2644615

Xue, Y., Xu, T., Zhang, H., Long, L.R., Huang, X.: SegAN: adversarial network with multi-scale L1 loss for medical image segmentation. Neuroinformatics 16(3–4), 383–392 (2018). https://doi.org/10.1007/s12021-018-9377-x

Selvaraju, R.R., Cogswell, M., Das, A., et al.: Grad-CAM: visual explanations from deep networks via gradient-based localization. In: 2017 IEEE International Conference on Computer Vision, pp. 618–626, IEEE CS Press, Venice, Italy (2017). https://doi.org/10.1109/ICCV.2017.74

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendix

Appendix

In this section, we provide additional experiments and visualizations for the BDFNet model. Part A shows the visualization results of the heat map of the TA module and the AFF module. Part B compares the robustness differences between the TA module and other attention mechanisms. Part C shows the visual comparison of segmentation results between BDFNet and other advanced segmentation algorithms.

-

A. The Visualization Results on TA Module and AFF Module

In Sect. 3.2, the ablation experiments on the TA module and the AFF module demonstrate the effectiveness of the proposed module. To further validate the proposed TA module and AFF module’s effectiveness intuitively, we randomly select some samples from COVID-19-CT-Seg dataset and use Grad-CAM [20] technology to visualize the gradients of segment prediction as a heat map, the bright area in the figure indicates the area participating in the segmentation.

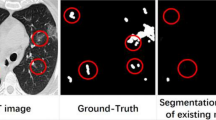

As shown in Fig. 5, compared with the ground truth map, the TA module is able to capture more accurate and comprehensive COVID-19 infection regions from CT images. In some cases, the network with the TA module can correctly identify the infected area that the original network misidentified.

Figure 6 shows the shape information captured by the network added with the AFF module. It can be seen from the figure that the obtained shape information basically depicts the outline of the infection area, which proves the AFF module’s capability to capture richer and more precise shape information from infection regions.

-

B. The Compare of the Robustness for TA, PA, CA and IA

In Table 4 in Sect. 3.2, from the ablation experiment inside the TA module, it can be seen that although the TA module has achieved the best performance in multiple evaluation indicators, it does not lead too much. In order to verify the superiority of the proposed TA module, we performed a statistical analysis of the experimental results on the COVID-19-CT-Seg dataset, and the results are shown in Fig. 7.

The distribution of the results of different evaluation indicators of the internal ablation experiment in the TA module. The horizontal axis represents the network model that contains different attention mechanism modules, including TA module, PA module, CA module, IA module, and the vertical axis represents different evaluation indicators.

The fact is that the TA module achieves the best overall performance in COVID-19 infection regions segmentation, especially in terms of the metric of \({S}_{\alpha }\), \({E}_{\phi }\), and MAE. Figure 7 shows the evaluation distribution of the segmentation results of the four attention mechanism modules under dfferent evaluation metrics. From the figure, it can be seen that with the same evaluation parameters, the segmentation result obtained by the TA module is more stable. This shows that the TA module has a more robust segmentation ability for different test data. And it is not easy to be affected by extreme values in the segmentation results.

-

C. Visual Comparison of Segmentation Results

In Sect. 3.3, Fig. 4 and Fig. 5 show that no networks can lead consistently on all evaluation metrics. Therefore, by visually comparing the segmentation results, we can more intuitively verify the pros and cons of the segmentation results.

Figure 8 provide some comparison of COVID-19 infection regions segmentation results from different networks on COVID-19-CT-Seg dataset. As can be observed, the BDFNet yields segmentation results with more precise boundaries, especially in the subtle infected areas. From a medical point of view, it is very important to accurately distinguish the boundaries of the lesion area. It can help doctors determine the location and extent of the patient's infection and assist the doctor in diagnosis.

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper

Ding, H., Niu, Q., Nie, Y., Shang, Y., Chen, N., Liu, R. (2021). BDFNet: Boundary-Assisted and Discriminative Feature Extraction Network for COVID-19 Lung Infection Segmentation. In: Magnenat-Thalmann, N., et al. Advances in Computer Graphics. CGI 2021. Lecture Notes in Computer Science(), vol 13002. Springer, Cham. https://doi.org/10.1007/978-3-030-89029-2_27

Download citation

DOI: https://doi.org/10.1007/978-3-030-89029-2_27

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-89028-5

Online ISBN: 978-3-030-89029-2

eBook Packages: Computer ScienceComputer Science (R0)