Abstract

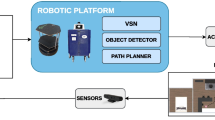

The research field of Embodied AI has witnessed substantial progress in visual navigation and exploration thanks to powerful simulating platforms and the availability of 3D data of indoor and photorealistic environments. These two factors have opened the doors to a new generation of intelligent agents capable of achieving nearly perfect PointGoal Navigation. However, such architectures are commonly trained with millions, if not billions, of frames and tested in simulation. Together with great enthusiasm, these results yield a question: how many researchers will effectively benefit from these advances? In this work, we detail how to transfer the knowledge acquired in simulation into the real world. To that end, we describe the architectural discrepancies that damage the Sim2Real adaptation ability of models trained on the Habitat simulator and propose a novel solution tailored towards the deployment in real-world scenarios. We then deploy our models on a LoCoBot, a Low-Cost Robot equipped with a single Intel RealSense camera. Different from previous work, our testing scene is unavailable to the agent in simulation. The environment is also inaccessible to the agent beforehand, so it cannot count on scene-specific semantic priors. In this way, we reproduce a setting in which a research group (potentially from other fields) needs to employ the agent visual navigation capabilities as-a-Service. Our experiments indicate that it is possible to achieve satisfying results when deploying the obtained model in the real world. Our code and models are available at https://github.com/aimagelab/LoCoNav.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Bigazzi, R., Landi, F., Cornia, M., Cascianelli, S., Baraldi, L., Cucchiara, R.: Explore and explain: self-supervised navigation and recounting. In: ICPR (2020)

Cascianelli, S., Costante, G., Bellocchio, E., Valigi, P., Fravolini, M.L., Ciarfuglia, T.A.: A robust semi-semantic approach for visual localization in urban environment. In: ISC2 (2016)

Chaplot, D.S., Gandhi, D., Gupta, S., Gupta, A., Salakhutdinov, R.: Learning to explore using active neural SLAM. In: ICLR (2019)

Chaplot, D.S., Gandhi, D.P., Gupta, A., Salakhutdinov, R.R.: Object goal navigation using goal-oriented semantic exploration. In: NeurIPS (2020)

Chen, T., Gupta, S., Gupta, A.: Learning exploration policies for navigation. In: ICLR (2019)

Choi, S., Zhou, Q.Y., Koltun, V.: Robust reconstruction of indoor scenes. In: CVPR (2015)

Deitke, M., et al.: RoboTHOR: an open simulation-to-real embodied AI platform. In: CVPR (2020)

Kadian, A., et al.: Sim2Real predictivity: does evaluation in simulation predict real-world performance? IEEE Robot. Autom. Lett. 5(4), 6670–6677 (2020)

Landi, F., Baraldi, L., Cornia, M., Corsini, M., Cucchiara, R.: Multimodal attention networks for low-level vision-and-language navigation. CVIU (2021)

LoCoBot: An Open Source Low Cost Robot. https://locobot-website.netlify.com

Murali, A., et al.: PyRobot: an open-source robotics framework for research and benchmarking. arXiv preprint arXiv:1906.08236 (2019)

Ramakrishnan, S.K., Al-Halah, Z., Grauman, K.: Occupancy anticipation for efficient exploration and navigation. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12350, pp. 400–418. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58558-7_24

Ramakrishnan, S.K., Jayaraman, D., Grauman, K.: An exploration of embodied visual exploration. Int. J. Comput. Vis. 129(5), 1616–1649 (2021). https://doi.org/10.1007/s11263-021-01437-z

Rosano, M., Furnari, A., Gulino, L., Farinella, G.M.: On embodied visual navigation in real environments through habitat. In: ICPR (2020)

Savva, M., et al.: Habitat: a platform for embodied AI research. In: ICCV (2019)

Schulman, J., Wolski, F., Dhariwal, P., Radford, A., Klimov, O.: Proximal policy optimization algorithms. arXiv preprint arXiv:1707.06347 (2017)

Telea, A.: An image inpainting technique based on the fast marching method. J. Graph. Tools 9(1), 23–34 (2004)

Wijmans, E., et al.: DD-PPO: learning near-perfect PointGoal navigators from 2.5 billion frames. In: ICLR (2019)

Xia, F., Zamir, A.R., He, Z., Sax, A., Malik, J., Savarese, S.: Gibson env: real-world perception for embodied agents. In: CVPR (2018)

Acknowledgment

This work has been supported by “Fondazione di Modena” under the project “AI for Digital Humanities” and by the national project “IDEHA” (PON ARS01_00421), cofunded by the Italian Ministry of University and Research.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper

Bigazzi, R., Landi, F., Cornia, M., Cascianelli, S., Baraldi, L., Cucchiara, R. (2021). Out of the Box: Embodied Navigation in the Real World. In: Tsapatsoulis, N., Panayides, A., Theocharides, T., Lanitis, A., Pattichis, C., Vento, M. (eds) Computer Analysis of Images and Patterns. CAIP 2021. Lecture Notes in Computer Science(), vol 13052. Springer, Cham. https://doi.org/10.1007/978-3-030-89128-2_5

Download citation

DOI: https://doi.org/10.1007/978-3-030-89128-2_5

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-89127-5

Online ISBN: 978-3-030-89128-2

eBook Packages: Computer ScienceComputer Science (R0)