Abstract

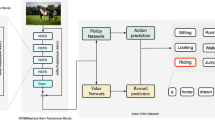

Image captioning methods with attention mechanism are leading this field, especially models with global and local attention. But there are few conventional models to integrate the relationship information between various regions of the image. In this paper, this kind of relationship features are embedded into the fused attention mechanism to explore the internal visual and semantic relations between different object regions. Besides, to alleviate the exposure bias problem and make the training process more efficient, we combine Generative Adversarial Network with Reinforcement Learning and employ the greedy decoding method to generate a dynamic baseline reward for self-critical training. Finally, experiments on MSCOCO datasets show that the model can generate more accurate and vivid image captioning sentences and perform better in multiple prevailing metrics than the previous advanced models.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Anderson, P., et al.: Bottom-up and top-down attention for image captioning and visual question answering. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6077–6086 (2018)

Bahdanau, D., Cho, K., Bengio, Y.: Neural machine translation by jointly learning to align and translate. arXiv preprint arXiv:1409.0473 (2014)

Chen, J., Jin, Q.: Better captioning with sequence-level exploration. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10890–10899 (2020)

Creswell, A., White, T., Dumoulin, V., Arulkumaran, K., Sengupta, B., Bharath, A.A.: Generative adversarial networks: an overview. IEEE Signal Process. Mag. 35(1), 53–65 (2018)

Dai, B., Fidler, S., Urtasun, R., Lin, D.: Towards diverse and natural image descriptions via a conditional GAN. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2970–2979 (2017)

Hochreiter, S., Schmidhuber, J.: Long short-term memory. Neural Comput. 9(8), 1735–1780 (1997)

Huang, F., Li, Z., Wei, H., Zhang, C., Ma, H.: Boost image captioning with knowledge reasoning. Mach. Learn. 109(12), 2313–2332 (2020)

Huang, L., Wang, W., Chen, J., Wei, X.Y.: Attention on attention for image captioning. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 4634–4643 (2019)

Jiang, W., Ma, L., Jiang, Y.-G., Liu, W., Zhang, T.: Recurrent fusion network for image captioning. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11206, pp. 510–526. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01216-8_31

Liu, J., et al.: Interactive dual generative adversarial networks for image captioning. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, pp. 11588–11595 (2020)

Ranzato, M., Chopra, S., Auli, M., Zaremba, W.: Sequence level training with recurrent neural networks. arXiv preprint arXiv:1511.06732 (2015)

Ren, S., He, K., Girshick, R., Sun, J.: Faster R-CNN: towards real-time object detection with region proposal networks. In: Advances in Neural Information Processing Systems, pp. 91–99 (2015)

Rennie, S.J., Marcheret, E., Mroueh, Y., Ross, J., Goel, V.: Self-critical sequence training for image captioning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7008–7024 (2017)

Sutton, R.S., Barto, A.G.: Reinforcement Learning: An Introduction. MIT Press, Cambridge (2018)

Sutton, R.S., McAllester, D.A., Singh, S.P., Mansour, Y., et al.: Policy gradient methods for reinforcement learning with function approximation. In: Advances in Neural Information Processing Systems, pp. 1057–1063 (1999)

Vaswani, A., et al.: Attention is all you need. In: Advances in Neural Information Processing Systems, pp. 5998–6008 (2017)

Wang, J., Wang, W., Wang, L., Wang, Z., Feng, D.D., Tan, T.: Learning visual relationship and context-aware attention for image captioning. Pattern Recogn. 98, 107075 (2020)

Wang, L., Bai, Z., Zhang, Y., Lu, H.: Show, recall, and tell: image captioning with recall mechanism. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, pp. 12176–12183 (2020)

Wang, W., Chen, Z., Hu, H.: Hierarchical attention network for image captioning. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 33, pp. 8957–8964 (2019)

Wang, Z., Huang, Z., Luo, Y.: Human consensus-oriented image captioning. In: IJCAI, pp. 659–665 (2020)

Wei, H., Li, Z., Zhang, C., Ma, H.: The synergy of double attention: combine sentence-level and word-level attention for image captioning. Comput. Vis. Image Underst. 201, 103068 (2020)

Xu, K., et al.: Show, attend and tell: neural image caption generation with visual attention. In: International Conference on Machine Learning, pp. 2048–2057. PMLR (2015)

Yao, T., Pan, Y., Li, Y., Mei, T.: Exploring visual relationship for image captioning. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) Computer Vision – ECCV 2018. LNCS, vol. 11218, pp. 711–727. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01264-9_42

You, Q., Jin, H., Wang, Z., Fang, C., Luo, J.: Image captioning with semantic attention. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4651–4659 (2016)

Yu, L., Zhang, W., Wang, J., Yu, Y.: SeqGAN: sequence generative adversarial nets with policy gradient. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 31, pp. 2852–2858 (2017)

Zhou, Y., Wang, M., Liu, D., Hu, Z., Zhang, H.: More grounded image captioning by distilling image-text matching model. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 4777–4786 (2020)

Acknowledgement

This work is supported by National Natural Science Foundation of China (Nos. 61966004, 61866004, 61762078), Guangxi Natural Science Foundation (Nos. 2019GXNSFDA245018, 2018GXNSFDA281009), Innovation Project of Guangxi Graduate Education (No. XYCBZ2021002), Guangxi “Bagui Scholar” Teams for Innovation and Research Project, Guangxi Talent Highland Project of Big Data Intelligence and Application, and Guangxi Collaborative Innovation Center of Multi-source Information Integration and Intelligent Processing.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper

Chen, T., Li, Z., Zhang, C., Ma, H. (2021). Adversarial Training for Image Captioning Incorporating Relation Attention. In: Pham, D.N., Theeramunkong, T., Governatori, G., Liu, F. (eds) PRICAI 2021: Trends in Artificial Intelligence. PRICAI 2021. Lecture Notes in Computer Science(), vol 13031. Springer, Cham. https://doi.org/10.1007/978-3-030-89188-6_15

Download citation

DOI: https://doi.org/10.1007/978-3-030-89188-6_15

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-89187-9

Online ISBN: 978-3-030-89188-6

eBook Packages: Computer ScienceComputer Science (R0)