Abstract

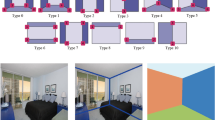

As a fundamental part of indoor scene understanding, the research of indoor room layout estimation has attracted much attention recently. The task is to predict the structure of a room from a single image. In this article, we illustrate that this task can be well solved even without sophisticated post-processing program, by adopting Feature Pyramid Networks (FPN) to solve this problem with adaptive changes. Besides, an optimization step is devised to keep the order of key points unchanged, which is an essential part for improving the model’s performance but has been ignored from the beginning. Our method has demonstrated great performance on the benchmark LSUN dataset on both processing efficiency and accuracy. Compared with the state-of-the-art end-to-end method, our method is two times faster at processing speed (32 ms) than its speed (86 ms), with \(0.71\%\) lower key point error and \(0.2\%\) higher pixel error respectively. Besides, the advanced two-step method is only \(0.02\%\) better than our result on key point error. Both the high efficiency and accuracy make our method a good choice for some real-time room layout estimation tasks.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Badrinarayanan, V., Kendall, A., Cipolla, R.: Segnet: a deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 39(12), 2481–2495 (2017)

Dasgupta, S., Fang, K., Chen, K., Savarese, S.: Delay: robust spatial layout estimation for cluttered indoor scenes. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2016, pp. 616–624 (2016)

Hedau, V., Hoiem, D., Forsyth, D.A.: Recovering the spatial layout of cluttered rooms. In: IEEE 12th International Conference on Computer Vision, ICCV 2009, pp. 1849–1856 (2009)

Hedau, V., Hoiem, D., Forsyth, D.A.: Recovering free space of indoor scenes from a single image. In: 2012 IEEE Conference on Computer Vision and Pattern Recognition, pp. 2807–2814 (2012)

Hirzer, M., Roth, P.M., Lepetit, V.: Smart hypothesis generation for efficient and robust room layout estimation. In: IEEE Winter Conference on Applications of Computer Vision, WACV 2020, pp. 2901–2909 (2020)

Kingma, D.P., Ba, J.: Adam: a method for stochastic optimization. In: Bengio, Y., LeCun, Y. (eds.) Proceedings of 3rd International Conference on Learning Representations, ICLR 2015 (2015)

Kirillov, A., Girshick, R.B., He, K., Dollár, P.: Panoptic feature pyramid networks. In: IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2019, pp. 6399–6408 (2019)

Kruzhilov, I., Romanov, M., Babichev, D., Konushin, A.: Double refinement network for room layout estimation. In: Palaiahnakote, S., Sanniti di Baja, G., Wang, L., Yan, W.Q. (eds.) ACPR 2019. LNCS, vol. 12046, pp. 557–568. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-41404-7_39

Lee, C., Badrinarayanan, V., Malisiewicz, T., Rabinovich, A.: Roomnet: end-to-end room layout estimation. In: IEEE International Conference on Computer Vision, ICCV 2017, pp. 4875–4884 (2017)

Lee, D.C., Gupta, A., Hebert, M., Kanade, T.: Estimating spatial layout of rooms using volumetric reasoning about objects and surfaces. In: Proceedings of the 23rd International Conference on Neural Information Processing Systems, vol. 1, pp. 1288–1296 (2010)

Lin, T., Dollár, P., Girshick, R.B., He, K., Hariharan, B., Belongie, S.J.: Feature pyramid networks for object detection. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, pp. 936–944 (2017)

Lin, T., Goyal, P., Girshick, R.B., He, K., Dollár, P.: Focal loss for dense object detection. In: IEEE International Conference on Computer Vision, ICCV 2017, pp. 2999–3007 (2017)

Mallya, A., Lazebnik, S.: Learning informative edge maps for indoor scene layout prediction. In: 2015 IEEE International Conference on Computer Vision, ICCV 2015, pp. 936–944 (2015)

Paszke, A., et al.: Pytorch: an imperative style, high-performance deep learning library. In: Advances in Neural Information Processing Systems 32: Annual Conference on Neural Information Processing Systems 2019, NeurIPS 2019, pp. 8024–8035 (2019)

Ramalingam, S., Pillai, J.K., Jain, A., Taguchi, Y.: Manhattan junction catalogue for spatial reasoning of indoor scenes. In: 2013 IEEE Conference on Computer Vision and Pattern Recognition, pp. 3065–3072 (2013)

Ren, Y., Li, S., Chen, C., Kuo, C.-C.J.: A coarse-to-fine indoor layout estimation (CFILE) method. In: Lai, S.-H., Lepetit, V., Nishino, K., Sato, Y. (eds.) ACCV 2016. LNCS, vol. 10115, pp. 36–51. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-54193-8_3

Russell, B.C., Torralba, A., Murphy, K.P., Freeman, W.T.: Labelme: a database and web-based tool for image annotation. Int. J. Comput. Vis. 77(1–3), 157–173 (2008)

Srivastava, N., Hinton, G.E., Krizhevsky, A., Sutskever, I., Salakhutdinov, R.: Dropout: a simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 15(1), 1929–1958 (2014)

Xiao, J., Hays, J., Ehinger, K.A., Oliva, A., Torralba, A.: SUN database: large-scale scene recognition from abbey to zoo. In: The Twenty-Third IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2010, pp. 3485–3492 (2010)

Xie, S., Girshick, R.B., Dollár, P., Tu, Z., He, K.: Aggregated residual transformations for deep neural networks. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, pp. 5987–5995 (2017)

Zhang, J., Kan, C., Schwing, A.G., Urtasun, R.: Estimating the 3d layout of indoor scenes and its clutter from depth sensors. In: IEEE International Conference on Computer Vision, ICCV 2013, pp. 1273–1280 (2013)

Zhang, W., Zhang, W., Gu, J.: Edge-semantic learning strategy for layout estimation in indoor environment. CoRR abs/1901.00621 (2019). arXiv:1901.00621

Zhang, Y., Yu, F., Song, S., Xu, P., Seff, A., Xiao, J.: Large-scale scene understanding challenge: room layout estimation. In: CVPR Workshop (2015)

Zhao, H., Lu, M., Yao, A., Guo, Y., Chen, Y., Zhang, L.: Physics inspired optimization on semantic transfer features: an alternative method for room layout estimation. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, pp. 870–878 (2017)

Zou, C., Colburn, A., Shan, Q., Hoiem, D.: Layoutnet: reconstructing the 3d room layout from a single RGB image. In: 2018 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2018, pp. 2051–2059 (2018)

Acknowledgments

The authors would like to thank the data providers of [23] for the testing data sets. This work was partially supported by the Natural Science Foundation of China (No. 61802344), the Ningbo Science and Technology Special Project(No. 2021Z019), the Hebei “One Hundred Plan” Project (No. E2012100006) and National Talent Program (No. G20200218015).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper

Wang, A., Wen, S., Gao, Y., Li, Q., Deng, K., Pang, C. (2021). An Efficient Method for Indoor Layout Estimation with FPN. In: Zhang, W., Zou, L., Maamar, Z., Chen, L. (eds) Web Information Systems Engineering – WISE 2021. WISE 2021. Lecture Notes in Computer Science(), vol 13081. Springer, Cham. https://doi.org/10.1007/978-3-030-91560-5_7

Download citation

DOI: https://doi.org/10.1007/978-3-030-91560-5_7

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-91559-9

Online ISBN: 978-3-030-91560-5

eBook Packages: Computer ScienceComputer Science (R0)