Abstract

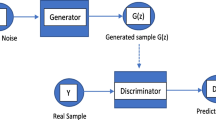

Electroencephalogram (EEG) is one of the most promising modalities in the field of Brain-Computer Interfaces (BCIs) due to its high time-domain resolutions and abundant physiological information. Quality of EEG signal analysis depends on the number of human subjects. However, due to lengthy preparation time and experiments, it is difficult to obtain sufficient human subjects for experiments. One of possible approaches is to employ generative model for EEG signal generation. Unfortunately, existing generative frameworks face issues of insufficient diversity and poor similarity, which may result in low quality of generative EEG. To address the issues above, we propose R\(^{2}\)WaveGAN, a WaveGAN based model with constraints using two correlated regularizers. In details, inspired by WaveGAN that can process time-series signals, we adopt it to fit EEG dataset and then integrate the spectral regularizer and anti-collapse regularizer to minimize the issues of insufficient diversity and poor similarity, respectively, so as to improve generalization of R\(^{2}\)WaveGAN. The proposed model is evaluated on one publicly available dataset - Bi2015a. An ablation study is performed to validate the effectiveness of both regularizers. Compared to the state-of-the-art models, R\(^{2}\)WaveGAN can provide better results in terms of evaluation metrics.

T. Guo and L. Zhang—Contributed equally to the work.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Xu, F., Ren, H.: A linear and nonlinear auto-regressive model and its application in modeling and forecasting. In: Dongnan Daxue Xuebao (Ziran Kexue Ban)/J. Southeast Univ. (Nat. Sci. Ed.) 43(3), 509–514 (2013)

Diederik, P.K., Welling, M.: Auto-encodingVariational Bayes. In: 2nd International Conference on Learning Representations, Banff, Canada, pp. 1–14 (2014)

Goodfellow, I.J., et al.: Generative adversarial nets. In: 28th Annual Conference on Neural Information Processing Systems, Montréal, Canada, pp. 2672–2680. MIT Press (2014)

Kingma, D.P., Dhariwal, P.: Glow: generative flow with invertible 1x1 convolutions. In: 24th Annual Conference on Neural Information Processing Systems, Vancouver, Canada, pp. 10236–10245. MIT Press (2010)

Yu, L.T., Zhang, W.N., Wang, J., Yong, Y.: SeqGAN: sequence generative adversarial nets with policy gradients. In: 31st Conference on Artificial Intelligence, San Francisco, USA, pp. 2852–2858. AAAI Press (2017)

Abdelfattah, S., Abdelrahman, M., Wang, M.: Augmenting the size of EEG datasets using generative adversarial networks. In: 31st International Joint Conference on Neural Networks, Rio de Janeiro, Brazil, pp. 1–6. IEEE Press (2018)

Luo, Y., Lu, B.: EEG data augmentation for emotion recognition using a conditional Wasserstein GAN. In: 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Honolulu, USA, pp. 2535–2538. IEEE Press (2018)

Panwar, S., Rad, P., Jung, T., Huang, Y.: Modeling EEG data distribution with a Wasserstein Generative Adversarial Network to predict RSVP Events. IEEE Trans. Neural Syst. Rehabil. Eng. 1(1), 99 (2020)

Roy, S., Dora, D., Mccreadie, K.: MIEEG-GAN: generating artificial motor imagery electroencephalography signals. In: 33th International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, pp. 1–8. IEEE Press (2020)

Donahue, C., Mcauley, J., Puckette, M.: Adversarial audio synthesis. In: 6th International Conference on Learning Representations, Vancouver, Canada, pp. 1–8. OpenReview.net Press (2018)

Durall, R., Keuper, M., Keuper, J.: Watch your up-convolution: CNN based generative deep neural networks are failing to reproduce spectral distributions. In: 38th Computer Vision and Pattern Recognition, pp. 1–10. IEEE Press (2020)

Li, K., Zhang, Y., Li, K., Fu, Y.: Adversarial feature hallucination networks for few-shot learning. In: 38th Computer Vision and Pattern Recognition, pp. 1–8. IEEE Press (2020)

Donahue, C., Mcauley, J., Puckette, M.: Adversarial audio synthesis. In: International Conference on Learning Representations, Vancouver, Canada, pp. 1–16. OpenReview.net Press (2018)

Korczowski, L., Cederhout, M., Andreev, A., Cattan, G., Congedo, M.: Brain Invaders calibration-less P300-based BCI with modulation of flash duration Dataset (bi2015a) (2019). https://hal.archives-ouvertes.fr/hal-02172347. Accessed 3 July 2019

Luo, P., Wang, X., Shao, W., Peng, Z.: Understanding regularization in batch normalization. In: 7th International Conference on Learning Representations, New Orleans, USA, pp. 1–8 (2019)

Ioffe, S., Szegedy, C.: Batch normalization: accelerating deep network training by reducing internal covariate shift. arXiv preprint arXiv:1502.03167 (2015)

Shmelkov, K., Schmid, C., Alahari, K.: How good is my GAN? In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11206, pp. 218–234. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01216-8_14

Lee, C.Y., Batra, T., Baig, M.H., Ulbricht, D.: Sliced Wasserstein discrepancy for unsupervised domain adaptation. In: 38th Computer Vision and Pattern Recognition, Seattle, USA, pp. 1–8. IEEE Press (2020)

Oudre, L., Jakubowicz, J., Bianchi, P., Simon, C.: Classification of periodic activities using the Wasserstein distance. IEEE Trans. Biomed. Eng. 1610–1619 (2012)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper

Guo, T. et al. (2021). Constrained Generative Model for EEG Signals Generation. In: Mantoro, T., Lee, M., Ayu, M.A., Wong, K.W., Hidayanto, A.N. (eds) Neural Information Processing. ICONIP 2021. Lecture Notes in Computer Science(), vol 13110. Springer, Cham. https://doi.org/10.1007/978-3-030-92238-2_49

Download citation

DOI: https://doi.org/10.1007/978-3-030-92238-2_49

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-92237-5

Online ISBN: 978-3-030-92238-2

eBook Packages: Computer ScienceComputer Science (R0)