Abstract

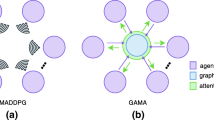

In multi-agent environments, cooperation is crucially important, and the key is to understand the mutual interplay between agents. However, multi-agent environments are highly dynamic, where the complex relationships between agents cause great difficulty for policy learning, and it’s costly to take all coagents into consideration. Besides, agents may not be allowed to share their information with other agents due to communication restrictions or privacy issues, making it more difficult to understand each other. To tackle these difficulties, we propose Attention-Aware Actor (Tri-A), where the graph-based attention mechanism adapts to the dynamics of the mutual interplay of the multi-agent environment. The graph kernels capture the relations between agents, including cooperation and confrontation, within local observation without information exchange between agents or centralized processing, promoting better decision-making of each coagent in a decentralized way. The refined observations produced by attention-aware actors are exploited to learn to focus more on surrounding agents, which makes Tri-A act as a plug for existing multi-agent reinforcement learning (MARL) methods to improve the learning performance. Empirically, we show that our method substantially achieves significant improvement in a variety of algorithms.

This work was supported by the National Key Research and Development Program of China (2017YFB1001901).

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Ba, J., Mnih, V., Kavukcuoglu, K.: Multiple object recognition with visual attention. arXiv preprint arXiv:1412.7755 (2014)

Bahdanau, D., Cho, K., Bengio, Y.: Neural machine translation by jointly learning to align and translate. arXiv preprint arXiv:1409.0473 (2014)

Borji, A., Cheng, M.M., Jiang, H., Li, J.: Salient object detection: a benchmark. IEEE Trans. Image Process. 24(12), 5706–5722 (2015)

Iqbal, S., Sha, F.: Actor-attention-critic for multi-agent reinforcement learning. arXiv preprint arXiv:1810.02912 (2018)

Iqbal, S., Sha, F.: Actor-attention-critic for multi-agent reinforcement learning. In: International Conference on Machine Learning, pp. 2961–2970. PMLR (2019)

Jaques, N., et al.: Social influence as intrinsic motivation for multi-agent deep reinforcement learning. In: International Conference on Machine Learning, pp. 3040–3049. PMLR (2019)

Jiang, J., Dun, C., Huang, T., Lu, Z.: Graph convolutional reinforcement learning. arXiv preprint arXiv:1810.09202 (2018)

Jiang, J., Lu, Z.: Learning attentional communication for multi-agent cooperation. arXiv preprint arXiv:1805.07733 (2018)

Judd, T., Ehinger, K., Durand, F., Torralba, A.: Learning to predict where humans look. In: 2009 IEEE 12th International Conference on Computer Vision, pp. 2106–2113. IEEE (2009)

Lin, Z., et al.: A structured self-attentive sentence embedding. arXiv preprint arXiv:1703.03130 (2017)

Liu, Y., Wang, W., Hu, Y., Hao, J., Chen, X., Gao, Y.: Multi-agent game abstraction via graph attention neural network. arXiv preprint arXiv:1911.10715 (2019)

Liu, Y., Wang, W., Hu, Y., Hao, J., Chen, X., Gao, Y.: Multi-agent game abstraction via graph attention neural network. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, pp. 7211–7218 (2020)

Lowe, R., Wu, Y., Tamar, A., Harb, J., Abbeel, P., Mordatch, I.: Multi-agent actor-critic for mixed cooperative-competitive environments. arXiv preprint arXiv:1706.02275 (2017)

Mnih, V., Heess, N., Graves, A., et al.: Recurrent models of visual attention. In: Advances in Neural Information Processing Systems, pp. 2204–2212 (2014)

Oliehoek, F.A., Amato, C.: A Concise Introduction to Decentralized POMDPs. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-28929-8

Rashid, T., Samvelyan, M., Schroeder, C., Farquhar, G., Foerster, J., Whiteson, S.: QMIX: monotonic value function factorisation for deep multi-agent reinforcement learning. In: International Conference on Machine Learning, pp. 4295–4304. PMLR (2018)

Samvelyan, M., et al.: The starcraft multi-agent challenge. arXiv preprint arXiv:1902.04043 (2019)

Sukhbaatar, S., Szlam, A., Fergus, R.: Learning multiagent communication with backpropagation. arXiv preprint arXiv:1605.07736 (2016)

Sunehag, P., et al.: Value-decomposition networks for cooperative multi-agent learning. arXiv preprint arXiv:1706.05296 (2017)

Vaswani, A., et al.: Attention is all you need. arXiv preprint arXiv:1706.03762 (2017)

Wyart, V., Tallon-Baudry, C.: How ongoing fluctuations in human visual cortex predict perceptual awareness: baseline shift versus decision bias. J. Neurosci. 29(27), 8715–8725 (2009)

Yang, Y., et al.: Qatten: a general framework for cooperative multiagent reinforcement learning. arXiv preprint arXiv:2002.03939 (2020)

Yang, Y., Luo, R., Li, M., Zhou, M., Zhang, W., Wang, J.: Mean field multi-agent reinforcement learning. In: International Conference on Machine Learning, pp. 5571–5580. PMLR (2018)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 ICST Institute for Computer Sciences, Social Informatics and Telecommunications Engineering

About this paper

Cite this paper

Zhao, C., Shi, D., Zhang, Y., Su, Y., Zhang, Y., Yang, S. (2021). Attention-Aware Actor for Cooperative Multi-agent Reinforcement Learning. In: Gao, H., Wang, X. (eds) Collaborative Computing: Networking, Applications and Worksharing. CollaborateCom 2021. Lecture Notes of the Institute for Computer Sciences, Social Informatics and Telecommunications Engineering, vol 407. Springer, Cham. https://doi.org/10.1007/978-3-030-92638-0_22

Download citation

DOI: https://doi.org/10.1007/978-3-030-92638-0_22

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-92637-3

Online ISBN: 978-3-030-92638-0

eBook Packages: Computer ScienceComputer Science (R0)