Abstract

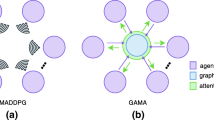

Recent advances have witnessed that value decomposed-based multi-agent reinforcement learning methods make an efficient performance in coordination tasks. Most current methods assume that agents can communicate to assist decisions, which is impractical in some real situations. In this paper, we propose an observation-to-cognition method to enable agents to realize high efficient coordination without communication. Inspired by the neighborhood cognitive consistency (NCC), we introduce the group concept to help agents learn a belief, a type of consensus, to realize that adjacent agents tend to accomplish similar sub-tasks to achieve cooperation. We propose a novel agent structure named Belief in Graph Clustering (BGC) via Graph Attention Network (GAT) to generate agent group belief. In this module, we further utilize an MLP-based module to characterize special agent features to express the unique characteristics of each agent. Besides, to overcome the consistent agent problem of NCC, a split loss is introduced to distinguish different agents and reduce the number of groups. Results reveal that the proposed method makes excellent coordination and achieves a significant improvement in the SMAC benchmark. Due to the group concept, our approach maintains excellent performance with an increase in the number of agents.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Busoniu, L., Babuska, R., De Schutter, B.: A comprehensive survey of multiagent reinforcement learning. IEEE Trans. Syst. Man Cybern. Part C (Appl. Rev.) 38(2), 156–172 (2008)

Cui, J., Liu, Y., Nallanathan, A.: Multi-agent reinforcement learning-based resource allocation for UAV networks. IEEE Trans. Wirel. Commun. 19(2), 729–743 (2019)

Ding, Z., Huang, T., Lu, Z.: Learning individually inferred communication for multi-agent cooperation. arXiv preprint arXiv:2006.06455 (2020)

Doersch, C.: Tutorial on variational autoencoders. arXiv preprint arXiv:1606.05908 (2016)

Foerster, J., Farquhar, G., Afouras, T., Nardelli, N., Whiteson, S.: Counterfactual multi-agent policy gradients. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 32 (2018)

Foerster, J.N., Assael, Y.M., de Freitas, N., Whiteson, S.: Learning to communicate with deep multi-agent reinforcement learning. CoRR abs/1605.06676 (2016). http://arxiv.org/abs/1605.06676

Ha, D., Dai, A., Le, Q.V.: Hypernetworks. arXiv preprint arXiv:1609.09106 (2016)

Hüttenrauch, M., Sosic, A., Neumann, G.: Guided deep reinforcement learning for swarm systems. CoRR abs/1709.06011 (2017). http://arxiv.org/abs/1709.06011

Jiang, J., Dun, C., Lu, Z.: Graph convolutional reinforcement learning for multi-agent cooperation. CoRR abs/1810.09202 (2018). http://arxiv.org/abs/1810.09202

Laurens, V.D.M., Hinton, G.: Visualizing data using t-SNE. J. Mach. Learn. Res. 9(2605), 2579–2605 (2008)

Liu, Y., Wang, W., Hu, Y., Hao, J., Chen, X., Gao, Y.: Multi-agent game abstraction via graph attention neural network. In: AAAI, pp. 7211–7218 (2020)

Long, Q., Zhou, Z., Gupta, A., Fang, F., Wu, Y., Wang, X.: Evolutionary population curriculum for scaling multi-agent reinforcement learning. arXiv preprint arXiv:2003.10423 (2020)

Mao, H., et al.: Neighborhood cognition consistent multi-agent reinforcement learning. arXiv preprint arXiv:1912.01160 (2019)

Oliehoek, F.A., Amato, C., et al.: A Concise Introduction to Decentralized POMDPs, vol. 1. Springer, New York (2016). https://doi.org/10.1007/978-3-319-28929-8

Peng, P., et al.: Multiagent bidirectionally-coordinated nets for learning to play StarCraft combat games. CoRR abs/1703.10069 (2017). http://arxiv.org/abs/1703.10069

Rashid, T., Samvelyan, M., de Witt, C.S., Farquhar, G., Foerster, J.N., Whiteson, S.: QMIX: monotonic value function factorisation for deep multi-agent reinforcement learning. CoRR abs/1803.11485 (2018). http://arxiv.org/abs/1803.11485

Son, K., Kim, D., Kang, W.J., Hostallero, D., Yi, Y.: QTRAN: learning to factorize with transformation for cooperative multi-agent reinforcement learning. CoRR abs/1905.05408 (2019). http://arxiv.org/abs/1905.05408

Sunehag, P., et al.: Value-decomposition networks for cooperative multi-agent learning. CoRR abs/1706.05296 (2017). http://arxiv.org/abs/1706.05296

Van Erven, T., Harremos, P.: Rényi divergence and kullback-leibler divergence. IEEE Trans. Inf. Theor. 60(7), 3797–3820 (2014)

Vaswani, A., et al.: Attention is all you need (2017)

Veličković, P., Cucurull, G., Casanova, A., Romero, A., Lio, P., Bengio, Y.: Graph attention networks. arXiv preprint arXiv:1710.10903 (2017)

Vinyals, O., et al.: StarCraft II: a new challenge for reinforcement learning. CoRR abs/1708.04782 (2017). http://arxiv.org/abs/1708.04782

Wang, X., Ke, L., Qiao, Z., Chai, X.: Large-scale traffic signal control using a novel multiagent reinforcement learning. IEEE Trans. Cybern. 51(1), 174–187 (2020)

Watanabe, T.: A study on multi-agent reinforcement learning problem based on hierarchical modular fuzzy model. In: 2009 IEEE International Conference on Fuzzy Systems, pp. 2041–2046. IEEE (2009)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 Springer Nature Switzerland AG

About this paper

Cite this paper

Zhou, T., Zhang, F., Tang, P., Wang, C. (2022). BGC: Multi-agent Group Belief with Graph Clustering. In: Chen, J., Lang, J., Amato, C., Zhao, D. (eds) Distributed Artificial Intelligence. DAI 2021. Lecture Notes in Computer Science(), vol 13170. Springer, Cham. https://doi.org/10.1007/978-3-030-94662-3_4

Download citation

DOI: https://doi.org/10.1007/978-3-030-94662-3_4

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-94661-6

Online ISBN: 978-3-030-94662-3

eBook Packages: Computer ScienceComputer Science (R0)