Abstract

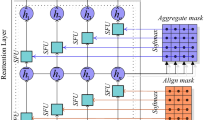

With the development of technology and the popularization of the Internet, the use of online platforms is gradually rising in all walks of life. People participate in the use of the platform and post comments, and the information interaction generated by this will affect other people’s views on the matter in the future. It can be seen that the analysis of these subjective evaluation information is particularly important. Sentiment analysis research has gradually developed into specific aspects of sentiment judgment, which is called fine-grained sentiment classification. Nowadays, China has a large population of potential customers and Chinese fine-grained sentiment classification has become a current research hotspot. Aiming at the problem of low accuracy and poor classification effect of existing models in deep learning, this paper conducts experimental research based on the merchant review information data set of Dianping. The BERT-ftfl-SA model is proposed and integrate the attention mechanism to further strengthen the data characteristics. Compared with traditional models such as SVM and FastText, its classification effect is significantly improved. It is concluded that the improved BERT-ftfl-SA fine-grained sentiment classification model can achieve efficient sentiment classification of Chinese text.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Gao, Y., Iqbal, S., et al.: Performance and power analysis of high-density multi-GPGPU architectures: a preliminary case study. In: IEEE 17th HPCC (2015)

Qiu, M., Xue, C., et al.: Energy minimization with soft real-time and DVS for uniprocessor and multiprocessor embedded systems. In: IEEE DATE Conference, pp. 1–6 (2007)

Zhao, H., Chen, M., et al.: A novel pre-cache schema for high performance Android system. Futur. Gener. Comput. Syst. 56, 766–772 (2016)

Liu, M., Zhang, S., et al.: H infinite state estimation for discrete-time chaotic systems based on a unified model. IEEE TSMC (B) (2012)

Qiu, L., Gai, K., Qiu, M.: Optimal big data sharing approach for tele-health in cloud computing. In: IEEE SmartCloud, pp. 184–189 (2016)

Zhang, Z., Wu, J., et al.: Jamming ACK attack to wireless networks and a mitigation approach. In: IEEE GLOBECOM Conference, pp. 1–5 (2008)

Li, H.Y., Li, Q., Zhou, P.F.: Sentiment analysis and mining for product review text. Inf. Sci. 35(001), 51–55 (2017)

Ren, Z.J., Zhang, P., Li, S.C., et al.: Analysis on the emotional situation evolution of emergencies in emergencies based on Weibo data mining. J. Inf. 38(02), 140–148 (2019)

Tian, W.G.: The mechanism and strategy of Netizens’ emotion communication in Weibo comments. Contemp. Commun. (01), 66–69 (2019)

Yan, Q., Ma, L.Y., Wu, S.: The impact of electronic word-of-mouth publishing platform differences on consumers’ perceived usefulness. Manag. Sci. 32(03), 80–91 (2019)

Lu, R., Jin, X., Zhang, S., Qiu, M., Wu, X.: A study on big knowledge and its engineering issues. IEEE TKDE 31(9), 1630–1644 (2018)

Qiu, M., Cao, D., et al.: Data transfer minimization for financial derivative pricing using Monte Carlo simulation with GPU in 5G. J. Commun. Syst. 29(16), 2364–2374 (2016)

Thakur, K., Qiu, M., Gai, K., Ali, M.: An investigation on cyber security threats and security models. In: IEEE CSCloud (2015)

Gai, K., Qiu, M., Sun, X., Zhao, H.: Security and privacy issues: a survey on FinTech. In: SmartCom, pp. 236–247 (2016)

Li, R., Lin, Z., Lin, H.L., et al.: A review of text sentiment analysis. Comput. Res. Dev. 55(001), 30–52 (2018)

Qiu, H., Qiu, M., Lu, Z.: Selective encryption on ECG data in body sensor network based on supervised machine learning. Inf. Fusion 55, 59–67 (2020)

Qiu, H., Qiu, M., Memmi, G., Ming, Z., Liu, M.: A dynamic scalable blockchain based communication architecture for IoT. In: SmartBlock, pp. 159–166 (2018)

Devlin, J., Chang, M., Lee, K., et al.: BERT: pre-trained of deep bidirectional transformers for language understanding (2018)

Sun, C., Qiu, X., Xu, Y., Huang, X.: How to fine-tune BERT for text classification? In: Sun, M., Huang, X., Ji, H., Liu, Z., Liu, Y. (eds.) CCL 2019. LNCS (LNAI), vol. 11856, pp. 194–206. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-32381-3_16

Lin, T.Y., Goyal, P., Girshick, R., et al.: Focal loss for dense object detection. IEEE Trans. Pattern Anal. Mach. Int. PP(99), 2999–3007 (2017)

Mikolov, T., Chen, K., Corrado, G., et al.: Efficient estimation of word representations in vector space. Computer Science (2013)

Pennington, J., Socher, R., Manning, C.: Glove: global vectors for word representation. In: Conference on Empirical Methods in NLP (2014)

Peters, M., Neumann, M., Iyyer, M., et al.: Deep contextualized word representations. North American Chapter of the Association for Computational Linguistics, vol. 1 (2018)

Joulin, A., Grave, E., Bojanowski, P., et al.: Bag of tricks for efficient text classification. European Chapter of the Association for Computational Linguistics, vol. 2 (2017)

Lecun, Y., Boser, B., Denker, J.S., et al.: Backpropagation applied to handwritten zip code recognition. Neural Comput. 1(4), 541–551 (1989)

Kim, Y.: Convolutional neural networks for sentence classification. In: Conference on Empirical Methods in NLP, Doha, Qatar, pp. 1746–1751 (2014)

Qian, Q., Huang, M., Lei, J., et al.: Linguistically regularized LSTMs for sentiment classification. arXiv preprint arXiv:1611.03949 (2016)

Li, X., Zhao, B., Lu, X.: MAM-RNN: multi-level attention model based RNN for video captioning. In: IJCAI, vol. 2017, pp. 2208–2214 (2017)

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Zhou, F., Zhang, J., Song, Y. (2022). Chinese Fine-Grained Sentiment Classification Based on Pre-trained Language Model and Attention Mechanism. In: Qiu, M., Gai, K., Qiu, H. (eds) Smart Computing and Communication. SmartCom 2021. Lecture Notes in Computer Science, vol 13202. Springer, Cham. https://doi.org/10.1007/978-3-030-97774-0_4

Download citation

DOI: https://doi.org/10.1007/978-3-030-97774-0_4

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-97773-3

Online ISBN: 978-3-030-97774-0

eBook Packages: Computer ScienceComputer Science (R0)