Abstract

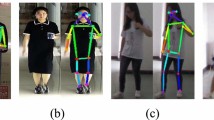

Great advances have been observed in conventional person re-identification (Re-ID), which heavily relies on the assumption that the cloth remains unchanged. However, this dramatically limits their applicability in practical cloth-changed scenarios, leading to dramatic performance drop. Existing cloth-changed methods mainly exploit the body shape information, ignoring the relation between different clothes of the same identity. In this paper, we present a powerful semantic-aware patching strategy for clothes augmentation. It greatly enriches the cloth styles by randomly assembling the semantic cloth patches, simulating the appearances of the same person under different clothes. This augmentation strategy has two primary advantages: 1) It significantly reinforces the robustness against clothes variations without additional cloth collection. 2) It does not damage semantic structure, fitting well with cloth-unchanged scenarios. To further address the uncertainty in cloth changed, a Semantic Part-aware Feature Learning scheme is incorporated to mine fine-grained granularities, addressing the misalignment issue under changed clothes. Extensive experiments conducted on both clothing-changed and cloth-unchanged tasks demonstrate our proposed method’s superiority, consistently improving the performance over various baselines.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Fang, H., Sun, J., Wang, R., Gou, M., Li, Y., Lu, C.: Instaboost: boosting instance segmentation via probability map guided copy-pasting. In: ICCV (2019)

Gong, K., Liang, X., Zhang, D., Shen, X., Lin, L.: Look into person: self-supervised structure-sensitive learning and a new benchmark for human parsing. In: CVPR (2017)

Gray, D., Brennan, S., Tao, H.: Evaluating appearance models for recognition, reacquisition, and tracking. In: PETS (2007)

Hanjun Li, Gaojie Wu, W.S.Z.: Combined depth space based architecture search for person re-identification. In: CVPR (2021)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: CVPR (2016)

He, L., Liao, X., Liu, W., Liu, X., Cheng, P., Mei, T.: FastReID: a pytorch toolbox for general instance re-identification. arXiv:2006.02631 (2020)

Hong, P., Wu, T., Wu, A., Han, X., Zheng, W.S.: Fine-grained shape-appearance mutual learning for cloth-changing person re-identification. In: CVPR (2021)

Huang, Y., Wu, Q., Xu, J., Zhong, Y.: Celebrities-ReID: a benchmark for clothes variation in long-term person re-identification. In: IJCNN (2019)

Huang, Y., Xu, J., Wu, Q., Zhong, Y., Zhang, P., Zhang, Z.: Beyond scalar neuron: adopting vector-neuron capsules for long-term person re-identification. TCSVT 30(10), 3459–3471 (2020)

Ioffe, S., Szegedy, C.: Batch normalization: Accelerating deep network training by reducing internal covariate shift. In: ICLR (2015)

Jin, X., et al.: Cloth-changing person re-identification from a single image with gait prediction and regularization. arXiv:2103.15537 (2021)

Li, Y., Luo, Z., Weng, X., Kitani, K.M.: Learning shape representations for clothing variations in person re-identification. In: WACV (2020)

Luo, H., Gu, Y., Liao, X., Lai, S., Jiang, W.: Bag of tricks and a strong baseline for deep person re-identification. In: CVPR Workshops (2019)

Qian, X., et al.: Long-term cloth-changing person re-identification. In: ACCV (2020)

Radenovic, F., Tolias, G., Chum, O.: Fine-tuning CNN image retrieval with no human annotation. TPAMI 41(7), 1655–1668 (2019)

Ristani, E., Solera, F., Zou, R., Cucchiara, R., Tomasi, C.: Performance measures and a data set for multi-target, multi-camera tracking. In: Hua, G., Jégou, H. (eds.) ECCV 2016. LNCS, vol. 9914, pp. 17–35. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-48881-3_2

Sun, Y., Zheng, L., Yang, Y., Tian, Q., Wang, S.: Beyond part models: person retrieval with refined part pooling (and a strong convolutional baseline). In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11208, pp. 501–518. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01225-0_30

Wang, G., Yuan, Y., Chen, X., Li, J., Zhou, X.: Learning discriminative features with multiple granularities for person re-identification. In: ACM MM (2018)

Wang, X., Girshick, R.B., Gupta, A., He, K.: Non-local neural networks. In: CVPR (2018)

Yang, Q., Wu, A., Zheng, W.: Person re-identification by contour sketch under moderate clothing change. TPAMI 43(6), 2029–2046 (2020)

Ye, M., Shen, J., Lin, G., Xiang, T., Shao, L., Hoi, S.C.H.: Deep learning for person re-identification: a survey and outlook. TPAMI (2021, early access). https://doi.org/10.1109/TPAMI.2021.3054775

Yu, S., Li, S., Chen, D., Zhao, R., Yan, J., Qiao, Y.: COCAS: a large-scale clothes changing person dataset for re-identification. In: CVPR (2020)

Yun, S., Han, D., Chun, S., Oh, S.J., Yoo, Y., Choe, J.: CutMix: regularization strategy to train strong classifiers with localizable features. In: ICCV (2019)

Zhao, H., Shi, J., Qi, X., Wang, X., Jia, J.: Pyramid scene parsing network. In: CVPR (2017)

Zheng, L., Shen, L., Tian, L., Wang, S., Wang, J., Tian, Q.: Scalable person re-identification: a benchmark. In: ICCV (2015)

Zheng, Z., Yang, X., Yu, Z., Zheng, L., Yang, Y., Kautz, J.: Joint discriminative and generative learning for person re-identification. In: CVPR (2019)

Zhong, X., Lu, T., Huang, W., Ye, M., Jia, X., Lin, C.: Grayscale enhancement colorization network for visible-infrared person re-identification. TCSVT (2021, early access). https://doi.org/10.1109/TCSVT.2021.3072171

Zhong, Z., Zheng, L., Kang, G., Li, S., Yang, Y.: Random erasing data augmentation. In: AAAI (2020)

Zhou, K., Yang, Y., Cavallaro, A., Xiang, T.: Omni-scale feature learning for person re-identification. In: ICCV (2019)

Zhu, K., Guo, H., Liu, Z., Tang, M., Wang, J.: Identity-guided human semantic parsing for person re-identification. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12348, pp. 346–363. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58580-8_21

Acknowledgement

This work was supported in part by the Department of Science and Technology, Hubei Provincial People’s Government under Grant 2021CFB513, in part by the Hubei Key Laboratory of Transportation Internet of Things under Grant 2020III026GX, and in part by the Fundamental Research Funds for the Central Universities under Grant 191010001.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 Springer Nature Switzerland AG

About this paper

Cite this paper

Jia, X., Zhong, X., Ye, M., Liu, W., Huang, W., Zhao, S. (2022). Patching Your Clothes: Semantic-Aware Learning for Cloth-Changed Person Re-Identification. In: Þór Jónsson, B., et al. MultiMedia Modeling. MMM 2022. Lecture Notes in Computer Science, vol 13142. Springer, Cham. https://doi.org/10.1007/978-3-030-98355-0_11

Download citation

DOI: https://doi.org/10.1007/978-3-030-98355-0_11

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-98354-3

Online ISBN: 978-3-030-98355-0

eBook Packages: Computer ScienceComputer Science (R0)