Abstract

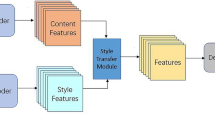

Arbitrary style transfer aims to obtain a brand new stylized image by adding arbitrary artistic style elements to the original content image. It is difficult for recent arbitrary style transfer algorithms to recover enough content information while maintaining good stylization characteristics. The balance between style information and content information is the main difficulty. Moreover, these algorithms tend to generate fuzzy blocks, color spots and other defects in the image. In this paper, we propose an arbitrary style transfer algorithm based on adaptive channel network (AdaCNet), which can flexibly select specific channels for style conversion to generate stylized images. In our algorithm, we introduce a content reconstruction loss to maintain local structure invariance, and a new style consistency loss that improves the stylization effect and style generalization ability. Experimental results show that, compared with other advanced methods, our algorithm maintains the balance between style information and content information, eliminates some defects such as blurry blocks, and also achieves good performance on the task of style generalization and transferring high-resolution images.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Azadi, S., Fisher, M., Kim, V., Wang, Z., Shechtman, E., Darrell, T.: Multi-content gan for few-shot font style transfer. In: Conference on Computer Vision and Pattern Recognition, pp. 7564–7573 (2018)

Bousmalis, K., Silberman, N., Dohan, D., Erhan, D., Krishnan, D.: Unsupervised pixel-level domain adaptation with generative adversarial networks. In: Conference on Computer Vision and Pattern Recognition (CVPR), pp. 95–104 (2017)

Chen, D., Yuan, L., Liao, J., Yu, N., Hua, G.: Stylebank: an explicit representation for neural image style transfer. In: Conference on Computer Vision and Pattern Recognition (CVPR), pp. 2770–2779 (2017)

Chen, T., Schmidt, M.: Fast patch-based style transfer of arbitrary style. In: NeurIPS (2016)

Chen, X., Duan, Y., Houthooft, R., Schulman, J., Sutskever, I., Abbeel, P.: Infogan: interpretable representation learning by information maximizing generative adversarial nets. In: NeurIPS (2016)

Deng, J., Dong, W., Socher, R., Li, L., Li, K., Fei-Fei, L.: Imagenet: a large-scale hierarchical image database. In: Conference on Computer Vision and Pattern Recognition, pp. 248–255 (2009)

Dumoulin, V., Shlens, J., Kudlur, M.: A learned representation for artistic style. ICLR (2017)

Gatys, L.A., Ecker, A.S., Bethge, M.: Image style transfer using convolutional neural networks. In: Conference on Computer Vision and Pattern Recognition (CVPR), pp. 2414–2423 (2016)

Goodfellow, I.J., et al.: Generative adversarial nets. In: NeurIPS (2014)

Huang, X., Belongie, S.: Arbitrary style transfer in real-time with adaptive instance normalization. In: International Conference on Computer Vision (ICCV), pp. 1510–1519 (2017)

Isola, P., Zhu, J., Zhou, T., Efros, A.A.: Image-to-image translation with conditional adversarial networks. In: Conference on Computer Vision and Pattern Recognition (CVPR), pp. 5967–5976 (2017)

Jimenez-Arredondo, V.H., Cepeda-Negrete, J., Sanchez-Yanez, R.E.: Multilevel color transfer on images for providing an artistic sight of the world. In: IEEE Access 5, pp. 15390–15399 (2017)

Johnson, J., Alahi, A., Fei-Fei, L.: Perceptual losses for real-time style transfer and super-resolution. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9906, pp. 694–711. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46475-6_43

Kotovenko, D., Sanakoyeu, A., Lang, S., Ommer, B.: Content and style disentanglement for artistic style transfer. In: International Conference on Computer Vision (ICCV), pp. 4421–4430 (2019)

Kunfeng, W., Yue, L., Yutong, W., Fei-Yue, W.: Parallel imaging: a unified theoretical framework for image generation. In: 2017 Chinese Automation Congress (CAC), pp. 7687–7692 (2017)

Li, N., Zheng, Z., Zhang, S., Yu, Z., Zheng, H., Zheng, B.: The synthesis of unpaired underwater images using a multistyle generative adversarial network. IEEE Access 6, 54241–54257 (2018)

Li, Y., Fang, C., Yang, J., Wang, Z., Lu, X., Yang, M.: Diversified texture synthesis with feed-forward networks. In: Conference on Computer Vision and Pattern Recognition (CVPR), pp. 266–274 (2017)

Li, Y., Fang, C., Yang, J., Wang, Z., Lu, X., Yang, M.: Universal style transfer via feature transforms. In: Neural Information Processing Systems, vol. 30, pp. 386–396. Curran Associates, Inc. (2017)

Li, Y., Wang, N., Liu, J. and Hou, X.: Demystifying neural style transfer. In: Proceedings of the Twenty-Sixth International Joint Conference on Artificial Intelligence, IJCAI-17, pp. 2230–2236 (2017)

Liu, M., et al.: Few-shot unsupervised image-to-image translation. In: International Conference on Computer Vision (ICCV), pp. 10550–10559 (2019)

Park, D.Y., Lee, K.H.: Arbitrary style transfer with style-attentional networks. In: Conference on Computer Vision and Pattern Recognition (CVPR), pp. 5873–5881 (2019)

Phillips, F., Mackintosh, B.: Wiki Art Gallery Inc: a case for critical thinking. Issues Account. Educ. 26(3), 593–608 (2011)

Sheng, L., Lin, Z., Shao, J., Wang, X.: Avatar-net: multi-scale zero-shot style transfer by feature decoration. In: Conference on Computer Vision and Pattern Recognition, pp. 8242–8250 (2018)

Shiri, F., Porikli, F., Hartley, R., Koniusz, P.: Identity-preserving face recovery from portraits. In: Winter Conference on Applications of Computer Vision (WACV), pp. 102–111 (2018)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. CoRR abs/1409.1556 (2015)

Song, C., Wu, Z., Zhou, Y., Gong, M., Huang, H.: Etnet: error transition network for arbitrary style transfer. In: NeurIPS (2019)

Svoboda, J., Anoosheh, A., Osendorfer, C., Masci, J.: Two-stage peer-regularized feature recombination for arbitrary image style transfer. In: Conference on Computer Vision and Pattern Recognition (CVPR), pp. 13813–13822 (2020)

Vo, D.M., Le, T., Sugimoto, A.: Balancing content and style with two-stream fcns for style transfer. In: Winter Conference on Applications of Computer Vision (WACV), pp. 1350–1358 (2018)

Wang, W., Shen, W., Guo, S., Zhu, R., Chen, B., Sun, Y.: Image artistic style migration based on convolutional neural network. In: 2018 5th International Conference on Systems and Informatics (ICSAI), pp. 967–972 (2018)

Wu, J., Huang, Z., Thoma, J., Acharya, D., Van Gool, L.: Wasserstein divergence for GANs. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11209, pp. 673–688. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01228-1_40

Sheng, L., Lin, Z., Shao, J., Wang, X.: Separating style and content for generalized style transfer. In: Conference on Computer Vision and Pattern Recognition, pp. 8447–8455 (2018)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 Springer Nature Switzerland AG

About this paper

Cite this paper

Wang, Y., Geng, Y. (2022). Arbitrary Style Transfer with Adaptive Channel Network. In: Þór Jónsson, B., et al. MultiMedia Modeling. MMM 2022. Lecture Notes in Computer Science, vol 13141. Springer, Cham. https://doi.org/10.1007/978-3-030-98358-1_38

Download citation

DOI: https://doi.org/10.1007/978-3-030-98358-1_38

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-98357-4

Online ISBN: 978-3-030-98358-1

eBook Packages: Computer ScienceComputer Science (R0)