Abstract

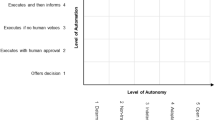

Autonomous machines are more and more capable of executing complex tasks with the support of intelligent algorithms, and they are deploying rapidly at an unprecedented pace. In the meanwhile human-machine teaming is promising to accomplish more and more challenging tasks by integrating strengths and avoiding weaknesses from both sides. However, due to imperfections from both human and machine sides and their interactions, potential safety issues should be considered in advance so that researchers and engineers could prevent or tackle those issues with preparation and make the human-machine system safer and more successful. In this paper, we proposed a framework under the context of human-machine (algorithm) collaboration, and we addressed possible safety issues within and out of the human-machine system. We classified those safety issues into internal safety issues representing the safety issues within the human-machine system and external safety issues representing safety issues out of the human-machine system to organizational and societal levels. To tackle those safety issues, under this proposed framework, we listed possible countermeasures according to the literature so that we could provide pedals to control the autonomous agents and human-machine teaming and enable safer human- machine collaboration in the future.

This study is supported by the National Natural Science Foundation of China under grant numbers 72192824 & 71942005.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Amodei, D., Olah, C., Steinhardt, J., Christiano, P., Schulman, J., Mané, D.: Concrete problems in AI safety, pp. 1–29 (2016)

Baudin, É., Blanquart, J.P., Guiochet, J., Powell, D.: Independent safety systems for autonomy: state of the art and future directions. Ph.D. thesis, LAAS-CNRS (2007)

Begoli, E., Bhattacharya, T., Kusnezov, D.: The need for uncertainty quantification in machine-assisted medical decision making. Nat. Mach. Intell. 1(1), 20–23 (2019). http://dx.doi.org/10.1038/s42256-018-0004-1

Biondi, F., Alvarez, I., Jeong, K.A.: Human-vehicle cooperation in automated driving: a multidisciplinary review and appraisal. Int. J. Hum.-Comput. Interact. 35(11), 932–946 (2019)

Bonnefon, J.F., Shariff, A., Rahwan, I.: The social dilemma of autonomous vehicles. Science 352(6293), 1573–1576 (2016)

Brown, D.S., Schneider, J., Dragan, A., Niekum, S.: Value alignment verification. In: International Conference on Machine Learning, pp. 1105–1115. PMLR (2021)

Brown, S., Davidovic, J., Hasan, A.: The algorithm audit: scoring the algorithms that score us. Big Data Soc. 8(1), 2053951720983865 (2021)

Chen, M., Zhou, P., Fortino, G.: Emotion communication system. IEEE Access 5, 326–337 (2016)

Claybrook, J., Kildare, S.: Autonomous vehicles: no driver... no regulation? Science 361(6397), 36–37 (2018)

Daugherty, P.R., Wilson, H.J.: Human+ Machine: Reimagining Work in the Age of AI. Harvard Business Press (2018)

de Melo, C.M., Marsella, S., Gratch, J.: Human cooperation when acting through autonomous machines. Proc. Natl. Acad. Sci. 116(9), 3482–3487 (2019)

Eckersley, P.: Impossibility and uncertainty theorems in AI value alignment (or why your AGI should not have a utility function). arXiv preprint arXiv:1901.00064 (2018)

Faulhaber, A.K., et al.: Human decisions in moral dilemmas are largely described by utilitarianism: virtual car driving study provides guidelines for autonomous driving vehicles. Sci. Eng. Ethics 25(2), 399–418 (2019)

Fu, J., Ma, L.: Long-haul vehicle routing and scheduling with biomathematical fatigue constraints. Transp. Sci. 56, 404–435 (2021)

Gabriel, I.: Artificial intelligence, values, and alignment. Mind. Mach. 30(3), 411–437 (2020)

Gehr, T., Mirman, M., Drachsler-Cohen, D., Tsankov, P., Chaudhuri, S., Vechev, M.: AI2: Safety and robustness certification of neural networks with abstract interpretation. In: 2018 IEEE Symposium on Security and Privacy (SP), pp. 3–18. IEEE (2018)

Glikson, E., Woolley, A.W.: Human trust in artificial intelligence: review of empirical research. Acad. Manag. Ann. 14(2), 627–660 (2020)

Green, B., Chen, Y.: The principles and limits of algorithm-in-the-loop decision making. Proc. ACM Hum.-Comput. Interact. 3(CSCW), 1–24 (2019)

Guznov, S., et al.: Robot transparency and team orientation effects on human-robot teaming. Int. J. Hum.-Comput. Interact. 36, 650–660 (2020)

Haesevoets, T., De Cremer, D., Dierckx, K., Van Hiel, A.: Human-machine collaboration in managerial decision making. Comput. Hum. Behav. 119, 106730 (2021)

Hamon, R., Junklewitz, H., Sanchez, I.: Robustness and explainability of artificial intelligence. Publications Office of the European Union (2020)

Haselton, M.G., Nettle, D., Murray, D.R.: The evolution of cognitive bias. Handb. Evol. Psychol. 968–987 (2015)

Hentout, A., Aouache, M., Maoudj, A., Akli, I.: Human-robot interaction in industrial collaborative robotics: a literature review of the decade 2008–2017. Adv. Robot. 33(15–16), 764–799 (2019)

Hoc, J.M.: From human-machine interaction to human-machine cooperation. Ergonomics 43(7), 833–843 (2000)

Honig, S., Oron-Gilad, T.: Understanding and resolving failures in human-robot interaction: literature review and model development. Front. Psychol. 9(JUN), 861 (2018)

Hu, B., Chen, J.: Optimal task allocation for human-machine collaborative manufacturing systems. IEEE Robot. Autom. Lett. 2(4), 1933–1940 (2017)

Inagaki, T., Sheridan, T.B.: Authority and responsibility in human-machine systems: probability theoretic validation of machine-initiated trading of authority. Cogn. Technol. Work 14(1), 29–37 (2012)

Ishowo-Oloko, F., Bonnefon, J.F., Soroye, Z., Crandall, J., Rahwan, I., Rahwan, T.: Behavioural evidence for a transparency-efficiency tradeoff in human-machine cooperation. Nat. Mach. Intell. 1(11), 517–521 (2019)

Jaume-Palasi, L.: Why we are failing to understand the societal impact of artificial intelligence. Soc. Res.: Int. Q. 86(2), 477–498 (2019)

Johnston, P., Harris, R.: The Boeing 737 MAX saga: lessons for software organizations. Softw. Qual. Prof. 21(3), 4–12 (2019)

Kim, R., et al.: A computational model of commonsense moral decision making. In: Proceedings of the 2018 AAAI/ACM Conference on AI, Ethics, and Society, pp. 197–203 (2018)

Klumpp, M.: Automation and artificial intelligence in business logistics systems: human reactions and collaboration requirements. Int. J. Log. Res. Appl. 21(3), 224–242 (2018)

Lee, J.D., See, K.A.: Trust in automation: designing for appropriate reliance. Hum. Factors 46(1), 50–80 (2004)

Lin, R., Ma, L., Zhang, W.: An interview study exploring tesla drivers’ behavioural adaptation. Appl. Ergon. 72, 37–47 (2018)

Lyons, J.B., Wynne, K.T., Mahoney, S., Roebke, M.A.: Trust and human-machine teaming: a qualitative study. In: Artificial Intelligence for the Internet of Everything, pp. 101–116. Elsevier (2019)

Ma, L., Chablat, D., Bennis, F., Zhang, W., Hu, B., Guillaume, F.: Fatigue evaluation in maintenance and assembly operations by digital human simulation in virtual environment. Virtual Reality 15(1), 55–68 (2011)

Madhavan, P., Wiegmann, D.A.: Similarities and differences between human-human and human-automation trust: an integrative review. Theor. Issues Ergon. Sci. 8, 277–301 (2007)

Matheson, E., Minto, R., Zampieri, E.G., Faccio, M., Rosati, G.: Human-robot collaboration in manufacturing applications: a review. Robotics 8(4), 1–25 (2019)

Meissner, P., Keding, C.: The human factor in AI-based decision-making. MIT Sloan Manag. Rev. 63(1), 1–5 (2021)

National Academies of Sciences Engineering, and Medicine: Human-AI Teaming: State of the Art and Research Needs. National Academies Press (2021)

Norman, D.A., Ortony, A., Russell, D.M.: Affect and machine design: lessons for the development of autonomous machines. IBM Syst. J. 42(1), 38–44 (2003)

O’Neill, T., et al.: Human-autonomy teaming: a review and analysis of the empirical literature. Hum. Factors (2020). https://doi.org/10.1177/0018720820960865

Pereira, L.M., et al.: State-of-the-art of intention recognition and its use in decision making. AI Commun. 26(2), 237–246 (2013)

Rafferty, J., Nugent, C.D., Liu, J., Chen, L.: From activity recognition to intention recognition for assisted living within smart homes. IEEE Trans. Hum.-Mach. Syst. 47(3), 368–379 (2017)

Rahwan, I.: Society-in-the-loop: programming the algorithmic social contract. Ethics Inf. Technol. 20(1), 5–14 (2017). https://doi.org/10.1007/s10676-017-9430-8

Rahwan, I., et al.: Machine behaviour. Nature 568, 477–486 (2019)

Raisamo, R., Rakkolainen, I., Majaranta, P., Salminen, K., Rantala, J., Farooq, A.: Human augmentation: past, present and future. Int. J. Hum. Comput. Stud. 131, 131–143 (2019)

Raji, I.D., et al.: Closing the AI accountability gap: defining an end-to-end framework for internal algorithmic auditing. In: Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency, pp. 33–44 (2020)

Robla-Gomez, S., Becerra, V.M., Llata, J.R., Gonzalez-Sarabia, E., Torre-Ferrero, C., Perez-Oria, J.: Working together: a review on safe human-robot collaboration in industrial environments. IEEE Access 5, 26754–26773 (2017)

Rodriguez-Soto, M., Serramia, M., Lopez-Sanchez, M., Rodriguez-Aguilar, J.A.: Instilling moral value alignment by means of multi-objective reinforcement learning. Ethics Inf. Technol. 24(1), 1–17 (2022)

Saberi, M.: The human factor in AI safety. arXiv preprint arXiv:2201.04263 (2022)

Sandvig, C., Hamilton, K., Karahalios, K., Langbort, C.: An algorithm audit. Data and discrimination: collected essays, pp. 6–10. New America Foundation, Washington, DC (2014)

Seeber, I., et al.: Machines as teammates: a research agenda on AI in team collaboration. Inf. Manage. 57(2), 103174 (2020)

Sohn, K., Kwon, O.: Technology acceptance theories and factors influencing artificial intelligence-based intelligent products. Telematics Inform. 47, 101324 (2020)

Soll, J.B., Milkman, K.L., Payne, J.W.: A user’s guide to debiasing (2014)

Solso, R.L., MacLin, M.K., MacLin, O.H.: Cognitive Psychology. Pearson Education, New Zealand (2005)

Tahboub, K.A.: Intelligent human-machine interaction based on dynamic Bayesian networks probabilistic intention recognition. J. Intell. Rob. Syst. 45(1), 31–52 (2006)

Tjoa, E., Guan, C.: A survey on explainable artificial intelligence (XAI): toward medical XAI. IEEE Trans. Neural Netw. Learn. Syst. 32(11), 4793–4813 (2020)

Tsao, L., Li, L., Ma, L.: Human work and status evaluation based on wearable sensors in human factors and ergonomics: a review. IEEE Trans. Hum.-Mach. Syst. 49(1), 72–84 (2019)

Turk, M.: Multimodal interaction: a review. Pattern Recogn. Lett. 36, 189–195 (2014)

Warden, T., et al.: The national academies board on human system integration (BOHSI) panel: explainable AI, system transparency, and human machine teaming. In: Proceedings of the Human Factors and Ergonomics Society Annual Meeting, vol. 63, pp. 631–635. SAGE Publications, Los Angeles (2019)

Wright, J.L., Chen, J.Y., Lakhmani, S.G.: Agent transparency and reliability in human-robot interaction: the influence on user confidence and perceived reliability. IEEE Trans. Hum.-Mach. Syst. 50(3), 254–263 (2020)

Xiong, W., Fan, H., Ma, L., Wang, C.: Challenges of human-machine collaboration in risky decision-making. Front. Eng. Manage. 9(1), 1–15 (2022)

Yang, C., Zhu, Y., Chen, Y.: A review of human - machine cooperation in the robotics domain. IEEE Trans. Hum.-Mach. Syst. 52(1), 12–25 (2022)

Young, S.N., Peschel, J.M.: Review of human-machine interfaces for small unmanned systems with robotic manipulators. IEEE Trans. Hum.-Mach. Syst. 50(2), 131–143 (2020)

Yu, K.H., Beam, A.L., Kohane, I.S.: Artificial intelligence in healthcare. Nat. Biomed. Eng. 2(10), 719–731 (2018)

Zheng, J., Zhang, T., Ma, L., Wu, Y., Zhang, W.: Vibration warning design for reaction time reduction under the environment of intelligent connected vehicles. Appl. Ergon. 96, 103490 (2021)

Zhou, X., Ma, L., Zhang, W.: Event-related driver stress detection with smartphones among young novice drivers. Ergonomics 1–19 (2022). https://doi.org/10.1080/00140139.2021.2020342

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Ma, L., Wang, C. (2022). Safety Issues in Human-Machine Collaboration and Possible Countermeasures. In: Duffy, V.G. (eds) Digital Human Modeling and Applications in Health, Safety, Ergonomics and Risk Management. Anthropometry, Human Behavior, and Communication. HCII 2022. Lecture Notes in Computer Science, vol 13319. Springer, Cham. https://doi.org/10.1007/978-3-031-05890-5_21

Download citation

DOI: https://doi.org/10.1007/978-3-031-05890-5_21

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-05889-9

Online ISBN: 978-3-031-05890-5

eBook Packages: Computer ScienceComputer Science (R0)