Abstract

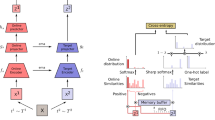

In recent years, contrastive learning has become an important technology of self-supervised representation learning and achieved SOTA performances in many fields, which has also gained increasing attention in the reinforcement learning (RL) literature. For example, by simply regarding samples augmented from the same image as positive examples and those from different images as negative examples, instance contrastive learning combined with RL has achieved considerable improvements in terms of sample efficiency. However, in the contrastive learning-related RL literature, the source images used for contrastive learning are sampled in a completely random manner, and the feedback of downstream RL task is not considered, which may severely limit the sample efficiency of the RL agent and lead to sample bias. To leverage the reward feedback of RL and alleviate sample bias, by using gaussian random projection to compress high-dimensional image into a low-dimensional space and the Q value as a guidance for sampling the hard negative pairs, i.e. samples with similar representation but diverse semantics that can be used to learn a better contrastive representation, we propose a new negative sample method, namely Q value-based Hard Mining (QHM). We conduct experiments on the DeepMind Control Suite and show that compared to the random sample manner in vanilla instance-based contrastive method, our method can effectively utilize the reward feedback in RL and improve the performance of the agent in terms of both sample efficiency and final scores, on 5 of 7 tasks.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Srinivas, A., Laskin, M., Abbeel, P.: CURL: contrastive unsupervised representations for reinforcement learning (2020)

Kaiser, L., et al.: Model-based reinforcement learning for atari. arXiv preprint arXiv:1903.00374 (2019)

Haarnoja, T., et al.: Soft actor-critic algorithms and applications. arXiv preprint arXiv:1812.05905 (2018)

Mutti, M., Pratissoli, L., Restelli, M.: A policy gradient method for task-agnostic exploration (2020)

Veeriah, V., Oh, J., Singh, S.: Many-goals reinforcement learning. arXiv preprint arXiv:1806.09605 (2018)

Mnih, V., et al.: Playing atari with deep reinforcement learning. arXiv preprint arXiv:1312.5602 (2013)

Talvitie, E.: Model regularization for stable sample rollouts. In: UAI (2014)

Racanière, S., et al.: Imagination-augmented agents for deep reinforcement learning. In: Proceedings of the 31st International Conference on Neural Information Processing Systems (2017)

Tassa, Y., et al.: Deepmind control suite. arXiv preprint arXiv:1801.00690 (2018)

Yarats, D., et al.: Improving sample efficiency in model-free reinforcement learning from images. arXiv preprint arXiv:1910.01741 (2019)

Yarats, D., et al.: Reinforcement learning with prototypical representations. arXiv preprint arXiv:2102.11271 (2021)

Chen, X., et al.: Improved baselines with momentum contrastive learning. arXiv preprint arXiv:2003.04297 (2020)

Bellemare, M.G., et al.: The arcade learning environment: an evaluation platform for general agents. J. Artif. Intell. Res. 47, 253–279 (2013)

Wu, Z., et al.: Unsupervised feature learning via non-parametric instance-level discrimination. arXiv preprint arXiv:1805.01978 (2018)

Hafner, D., et al.: Learning latent dynamics for planning from pixels. In: International Conference on Machine Learning. PMLR (2019)

Hafner, D., et al.: Dream to control: learning behaviors by latent imagination. arXiv preprint arXiv:1912.01603 (2019)

Lee, K.-H., et al.: Predictive information accelerates learning in RL. arXiv preprint arXiv:2007.12401 (2020)

van den Oord, A., Li, Y., Vinyals, O.: Representation learning with contrastive predictive coding. arXiv preprint arXiv:1807.03748 (2018)

Robinson, J., et al.: Contrastive learning with hard negative samples. arXiv preprint arXiv:2010.04592 (2020)

Chuang, C.-Y., et al.: Debiased contrastive learning. arXiv preprint arXiv:2007.00224 (2020)

Le-Khac, P.H., Healy, G., Smeaton, A.F.: Contrastive representation learning: a framework and review. IEEE Access 8, 193907–193934(2020)

Suh, Y., et al.: Stochastic class-based hard example mining for deep metric learning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (2019)

Zhu, J., et al.: Masked contrastive representation learning for reinforcement learning. arXiv preprint arXiv:2010.07470 (2020)

Kostrikov, I., Yarats, D., Fergus, R.: Image augmentation is all you need: regularizing deep reinforcement learning from pixels. arXiv preprint arXiv:2004.13649 (2020)

Liu, G., et al.: Return-based contrastive representation learning for reinforcement learning. arXiv preprint arXiv:2102.10960 (2021)

Laskin, M., et al.: Reinforcement learning with augmented data. arXiv preprint arXiv:2004.14990 (2020)

Yarats, D., et al.: Mastering visual continuous control: improved data-augmented reinforcement learning. arXiv preprint arXiv:2107.09645 (2021)

Johnson, W.B., Lindenstrauss, J.: Extensions of Lipschitz mappings into a Hilbert space 26. Contemp. Math. 26, 28 (1984)

Blundell, C., et al.: Model-free episodic control. arXiv preprint arXiv:1606.04460 (2016)

Kingma, D.P., Ba, J.: Adam: a method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014)

Gao, T., Yao, X., Chen, D.: SimCSE: simple contrastive learning of sentence embeddings. arXiv preprint arXiv:2104.08821 (2021)

He, K., et al.: Momentum contrast for unsupervised visual representation learning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (2020)

Bachman, P., Devon Hjelm, R., Buchwalter, W.: Learning representations by maximizing mutual information across views. arXiv preprint arXiv:1906.00910 (2019)

Gutmann, M., Hyvärinen, A.: Noise-contrastive estimation: a new estimation principle for unnormalized statistical models. In: Proceedings of the thirteenth international conference on artificial intelligence and statistics. JMLR Workshop and Conference Proceedings (2010)

Wang, F., Liu, H.: Understanding the behaviour of contrastive loss. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (2021)

Wu, C.-Y., et al.: Sampling matters in deep embedding learning. In: Proceedings of the IEEE International Conference on Computer Vision (2017)

Yan, W., et al.: Learning predictive representations for deformable objects using contrastive estimation. arXiv preprint arXiv:2003.05436 (2020)

Acknowledgements

This work was supported by the National Natural Science Foundation of China (No. 61772438 and No. 61375077). This work was also supported by the Innovation Strategy Research Program of Fujian Province, China (No. 2021R0012).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Chen, Q., Liang, D., Liu, Y. (2022). Hard Negative Sample Mining for Contrastive Representation in Reinforcement Learning. In: Gama, J., Li, T., Yu, Y., Chen, E., Zheng, Y., Teng, F. (eds) Advances in Knowledge Discovery and Data Mining. PAKDD 2022. Lecture Notes in Computer Science(), vol 13281. Springer, Cham. https://doi.org/10.1007/978-3-031-05936-0_22

Download citation

DOI: https://doi.org/10.1007/978-3-031-05936-0_22

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-05935-3

Online ISBN: 978-3-031-05936-0

eBook Packages: Computer ScienceComputer Science (R0)