Abstract

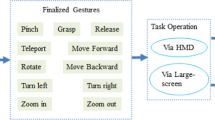

This work investigates bimanual interaction modalities for interaction between a virtual personal workspace and a virtual shared workspace in virtual reality (VR). In VR social platforms, personal and shared workspaces are commonly used to support virtual presentations, remote collaboration, data sharing, and would demand for reliable, intuitive, low-fatigue freehand gestures for a prolonged use during a virtual meeting. The interaction modalities in this work are asymmetric hand gestures created from bimanual grouping of freehand gestures including pointing, holding, and grabbing, which are known to be elemental and essential ones for interaction in VR. The design and implementation of bimanual gestures follow clear gestural metaphors to create connection and empathy with hand motions the user performs. We conducted a user study to understand advantages and drawbacks amongst three types of bimanual gestures as well as their suitability for cross-workspace interaction in VR, which we hope are valuable to assist the design of future VR social platforms.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Badam, S.K., Elmqvist, N.: Visfer: camera-based visual data transfer for cross-device visualization. Inf. Vis. 18(1), 68–93 (2019). https://doi.org/10.1177/1473871617725907

Bai, H., Nassani, A., Ens, B., Billinghurst, M.: Asymmetric bimanual interaction for mobile virtual reality. In: Proceedings of the 27th International Conference on Artificial Reality and Telexistence and 22nd Eurographics Symposium on Virtual Environments, pp. 83–86. ICAT-EGVE 2017. Eurographics Association, Goslar, DEU (2017)

Balakrishnan, R., Hinckley, K.: Symmetric bimanual interaction. In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, pp. 33–40. CHI 2000, Association for Computing Machinery, New York, NY, USA (2000). https://doi.org/10.1145/332040.332404

Balakrishnan, R., MacKenzie, I.S.: Performance differences in the fingers, wrist, and forearm in computer input control. In: Proceedings of the ACM SIGCHI Conference on Human Factors in Computing Systems, pp. 303–310. CHI 1997. Association for Computing Machinery, New York, NY, USA (1997). https://doi.org/10.1145/258549.258764

Bolt, R.A.: “Put-that-there”: voice and gesture at the graphics interface. In: Proceedings of the 7th Annual Conference on Computer Graphics and Interactive Techniques, pp. 262–270, SIGGRAPH 1980. Association for Computing Machinery, New York, NY, USA (1980). https://doi.org/10.1145/800250.807503

Brudy, F., et al.: Cross-device taxonomy: survey, opportunities and challenges of interactions spanning across multiple devices. In: Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, pp. 1–28, CHI 2019. Association for Computing Machinery, New York, NY, USA (2019). https://doi.org/10.1145/3290605.3300792

Cao, L., Peng, C., Dong, Y.: Ellic’s exercise class: promoting physical activities during exergaming with immersive virtual reality. Virtual Reality 25(3), 597–612 (2020). https://doi.org/10.1007/s10055-020-00477-z

Cassell, J.: A framework for gesture generation and interpretation. In: Computer Vision in Human-Machine Interaction, pp. 191–215 (2003)

Chan, E., Seyed, T., Stuerzlinger, W., Yang, X.D., Maurer, F.: User elicitation on single-hand microgestures. In: Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, pp. 3403–3414, CHI 2016. Association for Computing Machinery, New York, NY, USA (2016). https://doi.org/10.1145/2858036.2858589

Chastine, J., Franklin, D.M., Peng, C., Preston, J.A.: Empirically measuring control quality of gesture input. In: 2014 Computer Games: AI, Animation, Mobile, Multimedia, Educational and Serious Games (CGAMES), pp. 1–7. IEEE (2014)

Cooperrider, K.: Fifteen ways of looking at a pointing gesture (2020). https://psyarxiv.com/2vxft/download?format=pdf

Diliberti, N., Peng, C., Kaufman, C., Dong, Y., Hansberger, J.T.: Real-time gesture recognition using 3D sensory data and a light convolutional neural network. In: Proceedings of the 27th ACM International Conference on Multimedia, pp. 401–410, MM 2019. Association for Computing Machinery, New York, NY, USA (2019). https://doi.org/10.1145/3343031.3350958

Guiard, Y.: Asymmetric division of labor in human skilled bimanual action: the kinematic chain as a model. J. Motor Behav. 19, 486–517 (1987)

Guimbretière, F., Martin, A., Winograd, T.: Benefits of merging command selection and direct manipulation. ACM Trans. Comput.-Hum. Interact. 12(3), 460–476 (2005). https://doi.org/10.1145/1096737.1096742

Hansberger, J.T., Peng, C., Blakely, V., Meacham, S., Cao, L., Diliberti, N.: A multimodal interface for virtual information environments. In: Chen, J.Y.C., Fragomeni, G. (eds.) HCII 2019. LNCS, vol. 11574, pp. 59–70. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-21607-8_5

Hillmann, C.: Comparing the Gear VR, Oculus Go, and Oculus Quest, pp. 141–167. Apress, Berkeley (2019). https://doi.org/10.1007/978-1-4842-4360-2_5

Hough, G., Williams, I., Athwal, C.: Fidelity and plausibility of bimanual interaction in mixed reality. IEEE Trans. Visual. Comput. Graph. 21(12), 1377–1389 (2015). https://doi.org/10.1109/TVCG.2015.2480060

Jerald, J., LaViola, J.J., Marks, R.: VR interactions. In: ACM SIGGRAPH 2017 Courses. SIGGRAPH 2017. Association for Computing Machinery, New York, NY, USA (2017). https://doi.org/10.1145/3084873.3084900

Kang, H.J., Shin, J., Ponto, K.: A comparative analysis of 3D user interaction: how to move virtual objects in mixed reality. In: 2020 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), pp. 275–284 (2020)

Kim, H., Park, J.: DuplicateSpace: enhancing operability of virtual 3D objects by asymmetric bimanual interaction. In: 2014 11th International Conference on Ubiquitous Robots and Ambient Intelligence (URAI), pp. 324–327 (2014)

Kim, H.K., Park, J., Choi, Y., Choe, M.: Virtual reality sickness questionnaire (VRSQ): motion sickness measurement index in a virtual reality environment. Appl. Ergonomics 69, 66–73 (2018). https://doi.org/10.1016/j.apergo.2017.12.016, http://www.sciencedirect.com/science/article/pii/S000368701730282X

Kotranza, A., Quarles, J., Lok, B.: Mixed reality: are two hands better than one? In: Proceedings of the ACM Symposium on Virtual Reality Software and Technology, pp. 31–34, VRST 2006. Association for Computing Machinery, New York, NY, USA (2006). https://doi.org/10.1145/1180495.1180503

Laugwitz, B., Held, T., Schrepp, M.: Construction and evaluation of a user experience questionnaire. In: Holzinger, A. (ed.) USAB 2008. LNCS, vol. 5298, pp. 63–76. Springer, Heidelberg (2008). https://doi.org/10.1007/978-3-540-89350-9_6

Lee, H., Jeong, H., Lee, J., Yeom, K.W., Shin, H.J., Park, J.H.: Select-and-point: a novel interface for multi-device connection and control based on simple hand gestures. In: CHI 2008 Extended Abstracts on Human Factors in Computing Systems, pp. 3357–3362, CHI EA 2008. Association for Computing Machinery, New York, NY, USA (2008). https://doi.org/10.1145/1358628.1358857

Leganchuk, A., Zhai, S., Buxton, W.: Manual and cognitive benefits of two-handed input: an experimental study. ACM Trans. Comput.-Hum. Interact. 5(4), 326–359 (1998). https://doi.org/10.1145/300520.300522

Lévesque, J.-C., Laurendeau, D., Mokhtari, M.: An asymmetric bimanual gestural interface for immersive virtual environments. In: Shumaker, R. (ed.) VAMR 2013. LNCS, vol. 8021, pp. 192–201. Springer, Heidelberg (2013). https://doi.org/10.1007/978-3-642-39405-8_23

Lin, W., Du, L., Harris-Adamson, C., Barr, A., Rempel, D.: Design of hand gestures for manipulating objects in virtual reality. In: Kurosu, M. (ed.) HCI 2017. LNCS, vol. 10271, pp. 584–592. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-58071-5_44

Luxenburger, A., Prange, A., Moniri, M.M., Sonntag, D.: MedicalVR: towards medical remote collaboration using virtual reality. In: Proceedings of the 2016 ACM International Joint Conference on Pervasive and Ubiquitous Computing: Adjunct, pp. 321–324, UbiComp 2016. Association for Computing Machinery, New York, NY, USA (2016). https://doi.org/10.1145/2968219.2971392

Mistry, P., Maes, P., Chang, L.: WUW - wear ur world: a wearable gestural interface. In: CHI 2009 Extended Abstracts on Human Factors in Computing Systems, pp. 4111–4116, CHI EA 2009. Association for Computing Machinery, New York, NY, USA (2009). https://doi.org/10.1145/1520340.1520626

Nanjappan, V., Liang, H.N., Lu, F., Papangelis, K., Yue, Y., Man, K.L.: User-elicited dual-hand interactions for manipulating 3D objects in virtual reality environments. Human-Centric Comput. Inf. Sci. 8(1), 31 (2018). https://doi.org/10.1186/s13673-018-0154-5

Paay, J., Raptis, D., Kjeldskov, J., Skov, M.B., Ruder, E.V., Lauridsen, B.M.: Investigating cross-device interaction between a handheld device and a large display. In: Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems, pp. 6608–6619, CHI 2017. Association for Computing Machinery, New York, NY, USA (2017). https://doi.org/10.1145/3025453.3025724

Peng, C., Dong, Y., Cao, L.: Freehand interaction in virtual reality: bimanual gestures for cross-workspace interaction. In: Proceedings of the 27th ACM Symposium on Virtual Reality Software and Technology, VRST 2021. Association for Computing Machinery, New York, NY, USA (2021). https://doi.org/10.1145/3489849.3489900

Peng, C., Hansberger, J., Shanthakumar, V.A., Meacham, S., Blakley, V., Cao, L.: A case study of user experience on hand-gesture video games. In: 2018 IEEE Games, Entertainment, Media Conference (GEM), pp. 453–457. IEEE (2018)

Peng, C., Hansberger, J.T., Cao, L., Shanthakumar, V.A.: Hand gesture controls for image categorization in immersive virtual environments. In: 2017 IEEE Virtual Reality (VR), pp. 331–332. IEEE (2017)

Ren, G., O’Neill, E.: 3D selection with freehand gesture. Comput. Graph. 37(3), 101–120 (2013). https://doi.org/10.1016/j.cag.2012.12.006, http://www.sciencedirect.com/science/article/pii/S0097849312001823

Riecke, B.E., LaViola, J.J., Kruijff, E.: 3d user interfaces for virtual reality and games: 3d selection, manipulation, and spatial navigation. In: ACM SIGGRAPH 2018 Courses, SIGGRAPH 2018. Association for Computing Machinery, New York, NY, USA (2018). https://doi.org/10.1145/3214834.3214869

Sagayam, K.M., Hemanth, D.J.: Hand posture and gesture recognition techniques for virtual reality applications: a survey. Virtual Reality 21(2), 91–107 (2016). https://doi.org/10.1007/s10055-016-0301-0

Santoso, H.B., Schrepp, M., Isal, R., Utomo, A.Y., Priyogi, B.: Measuring user experience of the student-centered e-learning environment. J. Educators Online 13(1), 58–79 (2016)

Sarupuri, B., Chipana, M.L., Lindeman, R.W.: Trigger walking: a low-fatigue travel technique for immersive virtual reality. In: 2017 IEEE Symposium on 3D User Interfaces (3DUI), pp. 227–228 (2017). https://doi.org/10.1109/3DUI.2017.7893354

Schmidt, D., Seifert, J., Rukzio, E., Gellersen, H.: A cross-device interaction style for mobiles and surfaces. In: Proceedings of the Designing Interactive Systems Conference, pp. 318–327. DIS 2012. Association for Computing Machinery, New York, NY, USA (2012). https://doi.org/10.1145/2317956.2318005

Schrepp, M.: User experience questionnaire handbook (2019). https://www.ueq-online.org/Material/Handbook.pdf

Shanthakumar, V.A., Peng, C., Hansberger, J., Cao, L., Meacham, S., Blakely, V.: Design and evaluation of a hand gesture recognition approach for real-time interactions. Multimedia Tools Appl. 79(25), 17707–17730 (2020). https://doi.org/10.1007/s11042-019-08520-1

Song, P., Goh, W.B., Hutama, W., Fu, C.W., Liu, X.: A handle bar metaphor for virtual object manipulation with mid-air interaction. In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, pp. 1297–1306, CHI 2012. Association for Computing Machinery, New York, NY, USA (2012). https://doi.org/10.1145/2207676.2208585

Surale, H.B., Gupta, A., Hancock, M., Vogel, D.: TabletInVR: exploring the design space for using a multi-touch tablet in virtual reality. In: Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, pp. 1–13, CHI 2019. Association for Computing Machinery, New York, NY, USA (2019). https://doi.org/10.1145/3290605.3300243

Yu, R., Bowman, D.A.: Force push: exploring expressive gesture-to-force mappings for remote object manipulation in virtual reality. Front. ICT 5, 25 (2018). https://doi.org/10.3389/fict.2018.00025, https://www.frontiersin.org/article/10.3389/fict.2018.00025

Zhai, S., Kristensson, P.O., Appert, C., Andersen, T.H., Cao, X.: Foundational issues in touch-screen stroke gesture design - an integrative review. Found. Trends Hum. Comput. Interact. 5(2), 97–205 (2012). https://doi.org/10.1561/1100000012, https://hal.inria.fr/hal-00765046

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Peng, C., Dong, Y., Cao, L. (2022). Real-Time Bimanual Interaction Across Virtual Workspaces. In: Chen, J.Y.C., Fragomeni, G. (eds) Virtual, Augmented and Mixed Reality: Design and Development. HCII 2022. Lecture Notes in Computer Science, vol 13317. Springer, Cham. https://doi.org/10.1007/978-3-031-05939-1_23

Download citation

DOI: https://doi.org/10.1007/978-3-031-05939-1_23

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-05938-4

Online ISBN: 978-3-031-05939-1

eBook Packages: Computer ScienceComputer Science (R0)