Abstract

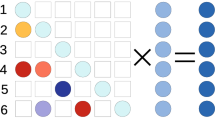

This paper presents a data management model targeting heterogeneous distributed systems integrating reconfigurable accelerators. The purpose of this model is to reduce the complexity of developing applications with multidimensional sparse data structures. It relies on a shared memory paradigm, which is convenient for parallel programming of irregular applications. The distributed data, sliced in chunks, are managed by a Software-Distributed Shared Memory (S-DSM). The integration of reconfigurable accelerators in this S-DSM, by breaking the master-slave model, allows devices to initiate access to chunks in order to accept data-dependent accesses. We use chunk partitioning of multidimensional sparse data structures, such as sparse matrices and unstructured meshes, to access them as a continuous data stream. This model enables to regularize memory accesses of irregular applications, to avoid the transfer of unnecessary data by providing fine-grained data access, and to efficiently hide data access latencies by implicitly overlaying the transferred data flow with the processed data flow.

We have used two case studies to validate the proposed data management model: General Sparse Matrix-Matrix Multiplication (SpGEMM) and Shallow Water Equations (SWE) over an unstructured mesh. The results obtained show that the proposed model efficiently hides the data access latencies by reaching computation speeds close to those of an ideal case (i.e. without latency).

This work was supported by the LEXIS project, funded by the EU’s Horizon 2020 research and innovation programme (2014–2020) under grant agreement no. 825532.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Augonnet, C., Thibault, S., Namyst, R., Wacrenier, P.A.: StarPU: a unified platform for task scheduling on heterogeneous multicore architectures. Concurr. Comput.: Pract. Exp. 23(2), 187–198 (2011)

Bader, M.: Space-Filling Curves: An Introduction with Applications in Scientific Computing, vol. 9. Springer, Heidelberg (2013). https://doi.org/10.1007/978-3-642-31046-1

Barrio, P., Carreras, C., López, J.A., Robles, Ó., Jevtic, R., Sierra, R.: Memory optimization in FPGA-accelerated scientific codes based on unstructured meshes. J. Syst. Archit. 60(7), 579–591 (2014)

Beri, T., Bansal, S., Kumar, S.: The unicorn runtime: efficient distributed shared memory programming for hybrid CPU-GPU clusters. IEEE Trans. Parallel Distrib. Syst. 28(5), 1518–1534 (2017)

Cudennec, L.: Software-distributed shared memory over heterogeneous micro-server architecture. In: Euro-Par 2017: Parallel Processing Workshops (2017)

Davis, T.A., Hu, Y.: The university of florida sparse matrix collection. ACM Trans. Math. Softw. 38(1), 1:1–1:25 (2011)

Escobar, F.A., Chang, X., Valderrama, C.: Suitability analysis of FPGAs for heterogeneous platforms in HPC. IEEE Trans. Parallel Distrib. Syst. 27(2), 600–612 (2016)

Goubier, T., et al.: Real-time model of computation over HPC/cloud orchestration - the LEXIS approach. In: Barolli, L., Poniszewska-Maranda, A., Enokido, T. (eds.) CISIS 2020. AISC, vol. 1194, pp. 255–266. Springer, Cham (2021). https://doi.org/10.1007/978-3-030-50454-0_24

Goubier, T., Rakowsky, N., Harig, S.: Fast tsunami simulations for a real-time emergency response flow. In: 2020 IEEE/ACM HPC for Urgent Decision Making, UrgentHPC@SC 2020, pp. 21–26. IEEE (2020)

Gustavson, F.G.: Two fast algorithms for sparse matrices: multiplication and permuted transposition. ACM Trans. Math. Softw. 4(3), 250–269 (1978)

High-Performance Conjugate Gradient (HPCG) Benchmark results, November 2020. https://www.top500.org/lists/hpcg/list/2020/11/

Lenormand, E., Goubier, T., Cudennec, L., Charles, H.P.: A combined fast/cycle accurate simulation tool for reconfigurable accelerator evaluation: application to distributed data management. In: 2020 International Workshop on Rapid System Prototyping (RSP) (2020)

Rubensson, E.H., Rudberg, E.: Chunks and tasks: a programming model for parallelization of dynamic algorithms. Parallel Comput. 40(7), 328–343 (2014)

Soltaniyeh, M., Martin, R.P., Nagarakatte, S.: Synergistic CPU-FPGA acceleration of sparse linear algebra. CoRR abs/2004.13907 (2020)

Srivastava, N.K., Jin, H., Liu, J., Albonesi, D.H., Zhang, Z.: MatRaptor: a sparse-sparse matrix multiplication accelerator based on row-wise product. In: 53rd Annual IEEE/ACM International Symposium on Microarchitecture, MICRO, pp. 766–780. IEEE (2020)

Willenberg, R., Chow, P.: A remote memory access infrastructure for global address space programming models in FPGAs. In: Proceedings of the ACM/SIGDA International Symposium on Field Programmable Gate Arrays, pp. 211–220. ACM (2013)

Winter, M., Mlakar, D., Zayer, R., Seidel, H.P., Steinberger, M.: Adaptive sparse matrix-matrix multiplication on the GPU. In: Proceedings of the 24th Symposium on Principles and Practice of Parallel Programming, pp. 68–81. ACM (2019)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 Springer Nature Switzerland AG

About this paper

Cite this paper

Lenormand, E., Goubier, T., Cudennec, L., Charles, HP. (2022). Data Management Model to Program Irregular Compute Kernels on FPGA: Application to Heterogeneous Distributed System. In: Chaves, R., et al. Euro-Par 2021: Parallel Processing Workshops. Euro-Par 2021. Lecture Notes in Computer Science, vol 13098. Springer, Cham. https://doi.org/10.1007/978-3-031-06156-1_8

Download citation

DOI: https://doi.org/10.1007/978-3-031-06156-1_8

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-06155-4

Online ISBN: 978-3-031-06156-1

eBook Packages: Computer ScienceComputer Science (R0)