Abstract

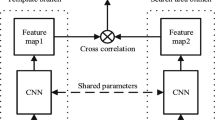

Siamese trackers perform similarity matching with templates (i.e., target models) to recursively localize objects within a search region. Several strategies have been proposed in the literature to update a template based on the tracker output, typically extracted from the target search region in the current frame, and thereby mitigate the effects of target drift. However, this may lead to corrupted templates, limiting the potential benefits of a template update strategy. This paper proposes a model adaptation method for Siamese trackers that uses a generative model to produce a synthetic template from the object search regions of several previous frames, rather than directly using the tracker output. Since the search region encompasses the target, attention from the search region is used for robust model adaptation. In particular, our approach relies on an auto-encoder trained through adversarial learning to detect changes in a target object’s appearance, and predict a future target template, using a set of target templates localized from tracker outputs at previous frames. To prevent template corruption during the update, the proposed tracker also performs change detection using the generative model to suspend updates until the tracker stabilizes, and robust matching can resume through dynamic template fusion. Extensive experiments conducted on VOT-16, VOT-17, OTB-50, and OTB-100 datasets highlight the effectiveness of our method, along with the impact of its key components. Results indicate that our proposed approach can outperform state-of-the-art trackers, and its overall robustness allows tracking for a longer time before failure.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Bertinetto, L., Valmadre, J., Henriques, J.F., Vedaldi, A., Torr, P.H.: Fully-convolutional Siamese networks for object tracking. arXiv:1606.09549 (2016)

Bhat, G., Danelljan, M., Gool, L.V., Timofte, R.: Learning discriminative model prediction for tracking. In: ICCV 2019

Danelljan, M., Bhat, G., Khan, F.S., Felsberg, M.: Atom: accurate tracking by overlap maximization. In: CVPR (2019)

Danelljan, M., Gool, L.V., Timofte, R.: Probabilistic regression for visual tracking. In: CVPR (2020)

Dong, X., Shen, J.: Triplet loss in Siamese network for object tracking. In: ECCV (2018)

Duman, E., Erdem, O.A.: Anomaly detection in videos using optical flow and convolutional autoencoder. IEEE Access 7, 183914–183923 (2019)

Fan, H., Ling, H.: Siamese cascaded region proposal networks for real-time visual tracking. In: CVPR (2019)

Guo, D., Wang, J., Cui, Y., Wang, Z., Chen, S.: Siamcar: Siamese fully convolutional classification and regression for visual tracking. In: CVPR (2020)

Guo, Q., Feng, W., Zhou, C., Huang, R., Wan, L., Wang, S.: Learning dynamic Siamese network for visual object tracking. In: ICCV (2017)

Hare, S., et al.: Struck: Structured output tracking with kernels. IEEE Trans. Pattern Anal. Mach. Intell. 38(10), 2096–2109 (2016)

He, A., Luo, C., Tian, X., Zeng, W.: A twofold Siamese network for real-time object tracking. In: CVPR 2018

Henriques, J.F., Caseiro, R., Martins, P., Batista, J.: High-speed tracking with kernelized correlation filters. IEEE Trans. Pattern Anal. Mach. Int. 37(3), 583–596 (2015)

Huang, L., Zhao, X., Huang, K.: Got-10k: a large high-diversity benchmark for generic object tracking in the wild. IEEE Trans. Pattern Anal. Mach. Intell. 43, 1562–1577 (2019)

Kristan, M., et al.: The visual object tracking vot2017 challenge results. In: ICCVW (2017)

Kristan, M., et al.: The sixth visual object tracking vot2018 challenge results (2018)

Li, B., Wu, W., Wang, Q., Zhang, F., Xing, J., Yan, J.S.: Evolution of Siamese visual tracking with very deep networks. In: CVPR (2019)

Li, B., Yan, J., Wu, W., Zhu, Z., Hu, X.: High performance visual tracking with Siamese region proposal network. In: CVPR (2018)

Li, Y., Zhang, X.: Siamvgg: visual tracking using deeper Siamese networks (2019)

Nam, H., Han, B.: Learning multi-domain convolutional neural networks for visual tracking. In: CVPR (2016)

Nebehay, G., Pflugfelder, R.: Consensus-based matching and tracking of keypoints for object tracking. In: WACV (2014)

Salti, S., Cavallaro, A., Stefano, L.D.: Adaptive appearance modeling for video tracking: Survey and evaluation. IEEE Trans. Image Process. 21(10), 4334–4348 (2012)

Song, Y., et al.: Vital: visual tracking via adversarial learning. In: CVPR (2018)

Sosnovik, I., Moskalev, A., Smeulders, A.W.: Scale equivariance improves Siamese tracking. In: WACV (2021)

Tang, Y., Zhao, L., Zhang, S., Gong, C., Li, G., Yang, J.: Integrating prediction and reconstruction for anomaly detection. Pattern Recogn. Lett. 129, 123–130 (2020)

Tao, R., Gavves, E., Smeulders, A.W.: Siamese instance search for tracking. In: CVPR (2016)

Valmadre, J., Bertinetto, L., Henriques, J., Vedaldi, A., Torr, P.H.: End-to-end representation learning for correlation filter based tracking. In: CVPR (2017)

Wang, X., O’Brien, M., Xiang, C., Xu, B., Najjaran, H.: Real-time visual tracking via robust kernelized correlation filter. In: ICRA (2017)

Wu, Y., Lim, J., Yang, M.H.: Online object tracking: a benchmark. In: CVPR (2013)

Yang, T., Chan, A.B.: Learning dynamic memory nets for object tracking. In: ECCV (2018)

Yao, Y., Wu, X., Zhang, L., Shan, S., Zuo, W.: Joint representation and truncated inference learning for correlation filter based tracking. In: ECCV (2018)

Zhang, L., Gonzalez-Garcia, A., Weijer, J.V.D., Danelljan, M., Khan, F.S.: Learning the model update for Siamese trackers. In: ICCV (2019)

Zhang, Y., Wang, L., Qi, J., Wang, D., Feng, M., Lu, H.: Structured Siamese network for real-time visual tracking. In: ECCV (2018)

Zhang, Z., Peng, H.: Deeper and wider Siamese networks for real-time visual tracking. In: CVPR (2019)

Zhang, Z., Peng, H., Fu, J., Li, B., Hu, W.: Ocean: Object-aware anchor-free tracking. In: ECCV (2020)

Zhao, Y., Deng, B., Shen, C., Liu, Y., Lu, H., Hua, X.S.: Spatio-temporal autoencoder for video anomaly detection. In: ICM (2017)

Zhong, B., Bai, B., Li, J., Zhang, Y., Fu, Y.: Hierarchical tracking by reinforcement learning-based searching and coarse-to-fine verifying. IEEE Trans. Image Process. 28, 2331–2341 (2018)

Zhu, Z., Wang, Q., Bo, L., Wu, W., Yan, J., Hu, W.: Distractor-aware Siamese networks for visual object tracking. In: ECCV (2018)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 Springer Nature Switzerland AG

About this paper

Cite this paper

Kiran, M., Nguyen-Meidine, L.T., Sahay, R., Cruz, R.M.O.E., Blais-Morin, LA., Granger, E. (2022). Generative Target Update for Adaptive Siamese Tracking. In: El Yacoubi, M., Granger, E., Yuen, P.C., Pal, U., Vincent, N. (eds) Pattern Recognition and Artificial Intelligence. ICPRAI 2022. Lecture Notes in Computer Science, vol 13363. Springer, Cham. https://doi.org/10.1007/978-3-031-09037-0_41

Download citation

DOI: https://doi.org/10.1007/978-3-031-09037-0_41

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-09036-3

Online ISBN: 978-3-031-09037-0

eBook Packages: Computer ScienceComputer Science (R0)