Abstract

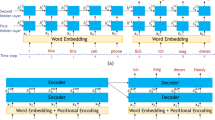

Recently the transformer has established itself as the state-of-the-art in text processing and has demonstrated impressive results in image processing, leading to the decline in the use of recurrence in neural network models. As established in the seminal paper, Attention Is All You Need, recurrence can be removed in favor of a simpler model using only self-attention. While transformers have shown themselves to be robust in a variety of text and image processing tasks, these tasks all have one thing in common; they are inherently non-temporal. Although transformers are also finding success in modeling time-series data, they also have their limitations as compared to recurrent models. We explore a class of problems involving classification and prediction from time-series data and show that recurrence combined with self-attention can meet or exceed the transformer architecture performance. This particular class of problem, temporal classification, and prediction of labels through time from time-series data is of particular importance to medical data sets which are often time-series based (Source code: https://github.com/imics-lab/recurrence-with-self-attention).

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Australian Bureau of Meteorology (BOM): Australia, rain tomorrow. Australian BOM National Weather Observations

Bahdanau, D., Cho, K., Bengio, Y.: Neural machine translation by jointly learning to align and translate. arXiv 1409, September 2014

Banos, O., et al.: Design, implementation and validation of a novel open framework for agile development of mobile health applications. Biomed. Eng. OnLine 14(2), S6 (2015)

Cheng, J., Dong, L., Lapata, M.: Long short-term memory-networks for machine reading. In: Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing, pp. 551–561, January 2016

Hochreiter, S., Schmidhuber, J.: Long short-term memory. Neural Comput. 9(8), 1735–1780 (1997). https://doi.org/10.1162/neco.1997.9.8.1735

Katrompas, A., Metsis, V.: Enhancing LSTM models with self-attention and stateful training. In: Arai, K. (ed.) IntelliSys 2021. LNNS, vol. 294, pp. 217–235. Springer, Cham (2022). https://doi.org/10.1007/978-3-030-82193-7_14

Lin, Z., et al.: A structured self-attentive sentence embedding, March 2017

Luong, M.T., Pham, H., Manning, C.: Effective approaches to attention-based neural machine translation, August 2015

Qin, Y., Song, D., Cheng, H., Cheng, W., Jiang, G., Cottrell, G.: A dual-stage attention-based recurrent neural network for time series prediction, April 2017

Rahman, L., Mohammed, N., Al Azad, A.K.: A new LSTM model by introducing biological cell state. In: 2016 3rd International Conference on Electrical Engineering and Information Communication Technology (ICEEICT), pp. 1–6 (2016)

De Vito, S.: Air quality data set. https://archive.ics.uci.edu/ml/datasets/Air+quality

Shaw, P., Uszkoreit, J., Vaswani, A.: Self-attention with relative position representations, pp. 464–468, January 2018

Vaswani, A., et al.: Attention is all you need. In: 31st Conference on Neural Information Processing Systems (NIPS 2017), June 2017

Vavoulas, G., Chatzaki, C., Malliotakis, T., Pediaditis, M., Tsiknakis, M.: The MobiAct dataset: recognition of activities of daily living using smartphones. In: Proceedings of the International Conference on Information and Communication Technologies for Ageing Well and e-Health, pp. 143–151. SciTePress (2016)

Wang, J., Yang, Y., Mao, J., Huang, Z., Huang, C., Xu, W.: CNN-RNN: a unified framework for multi-label image classification. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition, April 2016

Wu, N., Green, B., Ben, X., O’Banion, S.: Deep transformer models for time series forecasting: the influenza prevalence case (2020)

Zhao, H., Jia, J., Koltun, V.: Exploring self-attention for image recognition, pp. 10073–10082, June 2020

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

A Appendix: Detailed Classification Report Results

A Appendix: Detailed Classification Report Results

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Katrompas, A., Ntakouris, T., Metsis, V. (2022). Recurrence and Self-attention vs the Transformer for Time-Series Classification: A Comparative Study. In: Michalowski, M., Abidi, S.S.R., Abidi, S. (eds) Artificial Intelligence in Medicine. AIME 2022. Lecture Notes in Computer Science(), vol 13263. Springer, Cham. https://doi.org/10.1007/978-3-031-09342-5_10

Download citation

DOI: https://doi.org/10.1007/978-3-031-09342-5_10

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-09341-8

Online ISBN: 978-3-031-09342-5

eBook Packages: Computer ScienceComputer Science (R0)