Abstract

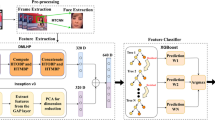

It is becoming increasingly easy to generate forged images and videos using pre-trained deepfake methods. The forged images and videos are difficult to distinguish with the human eye, posing security and privacy threats. This chapter describes a deepfake detection method that employs multiple feature fusion to identify forged images. An image is preprocessed to extract high-frequency features in the spatial and frequency domains. Two autoencoder networks are then used to extract the two features of the preprocessed image, following which the extracted features are fused. Finally, the fused feature is input to a classifier comprising three fully-connected layers and a max-pooling layer. The experimental results demonstrate that fusing two features yields an average detection accuracy of 98.62%, much better than using the features individually. The results also show that the method has superior accuracy and generalization capability than state-of-the-art deepfake detection methods.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Preview

Unable to display preview. Download preview PDF.

Similar content being viewed by others

References

D. Afchar, V. Nozick, J. Yamagishi and I. Echizen, MesoNet: A compact facial video forgery detection network, Proceedings of the IEEE International Workshop on Information Forensics and Security, 2018.

B. Bayar and M. Stamm, A deep learning approach to universal image manipulation detection using a new convolutional layer, Proceedings of the Fourth ACM Workshop on Information Hiding and Multimedia Security, pp. 5–10, 2016.

A. Brock, J. Donahue and K. Simonyan, Large-scale GAN training for high-fidelity natural image synthesis, Proceedings of the Seventh International Conference on Learning Representations, 2019.

Y. Choi, M. Choi, M. Kim, J. Ha, S. Kim and J. Choo, StarGAN: Unified generative adversarial networks for multi-domain image-to-image translation, Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 8789–8797, 2018.

E. Collier, K. Duffy, S. Ganguly, G. Madanguit, S. Kalia, G. Shreekant, R. Nemani, A. Michaelis, S. Li, A. Ganguly and S. Mukhopadhyay, Progressively growing generative adversarial networks for high resolution semantic segmentation of satellite images, Proceedings of the IEEE International Conference on Data Mining Workshops, pp. 763–769, 2018.

D. Cozzolino, J. Thies, A. Rossler, C. Riess and L. Verdoliva, Forensic Transfer: Weakly-Supervised Domain Adaptation for Forgery Detection, arXiv: 1812.02510 (arxiv.org/abs/1812.02510), November 27, 2019.

R. Durall, M. Keuper, F. Pfreundt and J. Keuper, Unmasking Deepfakes with Simple Features, arXiv: 1911.00686 (arxiv.org/abs/1911.00686), March 4, 2020.

I. Goodfellow, J. Abadie, M. Mirza, B. Xu, D. Warde-Farley, S. Ozair, A. Courville and Y. Bengio, Generative adversarial nets, Proceedings of the Twenty-Seventh Annual Conference on Neural Information Processing Systems, pp. 2672–2680, 2014.

T. Karras, S. Laine and T. Aila, A style-based generator architecture for generative adversarial networks, Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 4396–4405, 2019.

T. Karras, S. Laine, M. Aittala, J. Hellsten, J. Lehtinen and T. Aila, Analyzing and improving the image quality of StyleGAN, Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 8107–8116, 2020.

H. Khalil and S. Maged, Deepfake creation and detection using deep learning, Proceedings of the International Mobile, Intelligent and Ubiquitous Computing Conference, pp. 1–4, 2021.

Y. Kikutani, A. Okamoto, X. Han, X. Ruan and Y. Chen, Hierarchical classifier with multiple feature weighted fusion for scene recognition, Proceedings of the Second International Conference on Software Engineering and Data Mining, pp. 648–651, 2010.

P. Kumar, M. Vatsa and R. Singh, Detecting Face2Face facial reenactment in videos, Proceedings of the IEEE Winter Conference on Applications of Computer Vision, pp. 2578–2586, 2020.

H. Mo, B. Chen and W. Luo, Fake face identification via convolutional neural network, Proceedings of the Sixth ACM Workshop on Information Hiding and Multimedia Security, pp. 43–47, 2018.

L. Nataraj, T. Mohammed, B. Manjunath, S. Chandrasekaran, A. Flenner, J. Bappy and A. Roy-Chowdhury, Detecting GAN-generated fake images using co-occurrence matrices, Proceedings of the IS&T International Symposium on Electronic Imaging, article no. 532, 2019.

A. Pal and A. Chua, Propagation pattern as a telltale sign of fake news on social media, Proceedings of the Fifth International Conference on Information Management, pp. 269–273, 2019.

S. Wang, O. Wang, R. Zhang, A. Owens and A. Efros, CNN-generated images are surprisingly easy to spot\(\ldots \) for now, Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 8692–8701, 2020.

M. Younus and T. Hasan, Effective and fast deepfake detection method based on Haar wavelet transform, Proceedings of the International Conference on Computer Science and Software Engineering, pp. 186–190, 2020.

P. Zhou, X. Han, V. Morariu and L. Davis, Two-stream neural networks for tampered face detection, Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 1831–1839, 2017.

J. Zhu, T. Park, P. Isola and A. Efros, Unpaired image-to-image translation using cycle-consistent adversarial networks, Proceedings of the IEEE International Conference on Computer Vision, pp. 2242–2251, 2017.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 IFIP International Federation for Information Processing

About this paper

Cite this paper

Zhang, Y. et al. (2022). Deepfake Detection Using Multiple Feature Fusion. In: Peterson, G., Shenoi, S. (eds) Advances in Digital Forensics XVIII. DigitalForensics 2022. IFIP Advances in Information and Communication Technology, vol 653. Springer, Cham. https://doi.org/10.1007/978-3-031-10078-9_7

Download citation

DOI: https://doi.org/10.1007/978-3-031-10078-9_7

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-10077-2

Online ISBN: 978-3-031-10078-9

eBook Packages: Computer ScienceComputer Science (R0)