Abstract

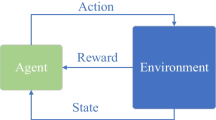

Discovering efficient exploration strategies is a central challenge in reinforcement learning (RL). Deep reinforcement learning (DRL) methods proposed in recent years have mainly focused on improving the generalization of models while ignoring models’ explanation. In this study, an embedding explanation for the advantage actor-critic algorithm (EEA2C) is proposed to balance the relationship between exploration and exploitation for DRL models. Specifically, the proposed algorithm explains agent’s actions before employing explanation to guide exploration. A fusion strategy is then designed to retain information that is helpful for exploration from experience. Based on the results of the fusion strategy, a variational autoencoder (VAE) is designed to encode the task-related explanation into a probabilistic latent representation. The latent representation of the VAE is finally incorporated into the agent’s policy as prior knowledge. Experimental results for six Atari environments show that the proposed method improves the agent’s exploratory capabilities with explainable knowledge.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Baker, B., et al.: Emergent tool use from multi-agent autocurricula. In: 8th International Conference on Learning Representations. OpenReview.net (2020)

Bellemare, M.G., Srinivasan, S., Ostrovski, G., Schaul, T., Saxton, D., Munos, R.: Unifying count-based exploration and intrinsic motivation. In: Advances in Neural Information Processing Systems, vol. 29, pp. 1471–1479 (2016)

Brockman, G., et al.: OpenAI gym. arXiv preprint arXiv:1606.01540 (2021)

Burda, Y., Edwards, H., Pathak, D., Storkey, A., Darrell, T., Efros, A.A.: Large-scale study of curiosity-driven learning. arXiv preprint arXiv:1808.04355 (2018)

Chakraborty, S., et al.: Interpretability of deep learning models: a survey of results. In: Smartworld, pp. 1–6 (2017)

Fong, R.C., Vedaldi, A.: Interpretable explanations of black boxes by meaningful perturbation. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 3429–3437 (2017)

Fortunato, M., et al.: Noisy networks for exploration. arXiv preprint arXiv:1706.10295 (2017)

François-Lavet, V., Bengio, Y., Precup, D., Pineau, J.: Combined reinforcement learning via abstract representations. In: Proceedings of the AAAI Conference on Artificial Intelligence, pp. 3582–3589 (2019)

Fukui, H., Hirakawa, T., Yamashita, T., Fujiyoshi, H.: Attention branch network: learning of attention mechanism for visual explanation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10705–10714 (2019)

Goyal, A., et al.: InfoBot: transfer and exploration via the information bottleneck. arXiv preprint arXiv:1901.10902 (2019)

Greydanus, S., Koul, A., Dodge, J., Fern, A.: Visualizing and understanding Atari agents. In: International Conference on Machine Learning, pp. 1792–1801 (2018)

Houthooft, R., Chen, X., Duan, Y., Schulman, J., De Turck, F., Abbeel, P.: VIME: variational information maximizing exploration. In: Advances in Neural Information Processing Systems, vol. 29 (2016)

Mnih, V., et al.: Asynchronous methods for deep reinforcement learning. In: International Conference on Machine Learning, pp. 1928–1937. PMLR (2016)

Mnih, V., et al.: Human-level control through deep reinforcement learning. Nature 518(7540), 529–533 (2015)

Pathak, D., Agrawal, P., Efros, A.A., Darrell, T.: Curiosity-driven exploration by self-supervised prediction. In: International Conference on Machine Learning, pp. 2778–2787. PMLR (2017)

Plappert, M., et al.: Parameter space noise for exploration. arXiv preprint arXiv:1706.01905 (2017)

Schulman, J., Wolski, F., Dhariwal, P., Radford, A., Klimov, O.: Proximal policy optimization algorithms. arXiv preprint arXiv:1707.06347 (2017)

Selvaraju, R.R., Cogswell, M., Das, A., Vedantam, R., Parikh, D., Batra, D.: Grad-CAM: visual explanations from deep networks via gradient-based localization. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 618–626 (2017)

Sutton, R.S., Barto, A.G.: Reinforcement Learning: An Introduction. MIT Press, Cambridge (2018)

Tang, H., et al.: Exploration: a study of count-based exploration for deep reinforcement learning. In: Advances in Neural Information Processing Systems, vol. 30, pp. 2753–2762 (2017)

Van Hasselt, H., Guez, A., Silver, D.: Deep reinforcement learning with double Q-learning. In: Proceedings of the AAAI Conference on Artificial Intelligence (2016)

Vinyals, O., et al.: Grandmaster level in StarCraft II using multi-agent reinforcement learning. Nature 575(7782), 350–354 (2019)

Wang, X., Sugumaran, V., Zhang, H., Xu, Z.: A capability assessment model for emergency management organizations. Inf. Syst. Front. 20(4), 653–667 (2018). https://doi.org/10.1007/s10796-017-9786-7

Wang, X., Yuan, S., Zhang, H., Lewis, M., Sycara, K.P.: Verbal explanations for deep reinforcement learning neural networks with attention on extracted features. In: 28th IEEE International Conference on Robot and Human Interactive Communication, pp. 1–7. IEEE (2019)

Zintgraf, L.M., et al.: VariBAD: variational Bayes-adaptive deep RL via meta-learning. J. Mach. Learn. Res. 22, 289:1–289:39 (2021)

Acknowledgements

This work is sponsored by Shanghai Sailing Program (NO. 20YF1413800).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Wang, X., Liu, Y., Chang, Y., Jiang, C., Zhang, Q. (2022). Incorporating Explanations to Balance the Exploration and Exploitation of Deep Reinforcement Learning. In: Memmi, G., Yang, B., Kong, L., Zhang, T., Qiu, M. (eds) Knowledge Science, Engineering and Management. KSEM 2022. Lecture Notes in Computer Science(), vol 13369. Springer, Cham. https://doi.org/10.1007/978-3-031-10986-7_16

Download citation

DOI: https://doi.org/10.1007/978-3-031-10986-7_16

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-10985-0

Online ISBN: 978-3-031-10986-7

eBook Packages: Computer ScienceComputer Science (R0)