Abstract

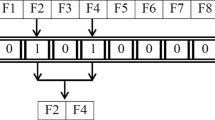

Feature selection is an efficient method to extract useful information embedded in the data so that improving the performance of machine learning. However, as a direct factor that affects the result of feature selection, classifier performance is not widely considered in feature selection. In this paper, we formulate a multi-objective minimization optimization problem to simultaneously minimize the number of features and minimize the classification error rate by jointly considering the optimization of the selected features and the classifier parameters. Then, we propose an Improved Multi-Objective Gray Wolf Optimizer (IMOGWO) to solve the formulated multi-objective optimization problem. First, IMOGWO combines the discrete binary solution and the classifier parameters to form a mixed solution. Second, the algorithm uses the initialization strategy of tent chaotic map, sinusoidal chaotic map and Opposition-based Learning (OBL) to improve the quality of the initial solution, and utilizes a local search strategy to search for a new set of solutions near the Pareto front. Finally, a mutation operator is introduced into the update mechanism to increase the diversity of the population. Experiments are conducted on 15 classic datasets, and the results show that the algorithm outperforms other comparison algorithms.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Alber, M., Buganza Tepole, A., et al.: Integrating machine learning and multiscale modeling-perspectives, challenges, and opportunities in the biological, biomedical, and behavioral sciences. NPJ Digit. Med. 2(1), 1–11 (2019)

Aličković, E., Subasi, A.: Breast cancer diagnosis using GA feature selection and rotation forest. Neural Comput. Appl. 28(4), 753–763 (2017)

Butler, K.T., Davies, D.W., et al.: Machine learning for molecular and materials science. Nature 559(7715), 547–555 (2018)

Deb, K., Pratap, A., et al.: A fast and elitist multiobjective genetic algorithm: NSGA-ii. IEEE Trans. Evol. Comput. 6(2), 182–197 (2002)

Ghoddusi, H., Creamer, G.G., Rafizadeh, N.: Machine learning in energy economics and finance: a review. Energy Econ. 81, 709–727 (2019)

Han, J., Pei, J., Kamber, M.: Data Mining: Concepts and Techniques. Elsevier, Amsterdam (2011)

Huda, R.K., Banka, H.: Efficient feature selection and classification algorithm based on PSO and rough sets. Neural Compu. Appl. 31(8), 4287–4303 (2019)

Ji, B., Lu, X., Sun, G., et al.: Bio-inspired feature selection: an improved binary particle swarm optimization approach. IEEE Access 8, 85989–86002 (2020)

Kashef, S., Nezamabadi-pour, H.: An advanced ACO algorithm for feature subset selection. Neurocomputing 147, 271–279 (2015)

Li, J., Kang, H., et al.: IBDA: improved binary dragonfly algorithm with evolutionary population dynamics and adaptive crossover for feature selection. IEEE Access 8, 108032–108051 (2020)

Li, Y., Song, Y., et al.: Intelligent fault diagnosis by fusing domain adversarial training and maximum mean discrepancy via ensemble learning. IEEE TII 17(4), 2833–2841 (2020)

Liu, M., Zhang, S., et al.: H infinite state estimation for discrete-time chaotic systems based on a unified model. IEEE Trans. SMC (B) 42(4), 1053–1063 (2012)

Lu, Z., Wang, N., et al.: IoTDeM: an IoT Big Data-oriented MapReduce performance prediction extended model in multiple edge clouds. JPDC 118, 316–327 (2018)

Mafarja, M., Aljarah, I., et al.: Binary dragonfly optimization for feature selection using time-varying transfer functions. Knowl.-Based Syst. 161, 185–204 (2018)

Mafarja, M., Mirjalili, S.: Whale optimization approaches for wrapper feature selection. Appl. Soft Comput. 62, 441–453 (2018)

Mirjalili, S., Mirjalili, S.M., Lewis, A.: Grey wolf optimizer. Adv. Eng. Softw. 69, 46–61 (2014)

Mirjalili, S., Saremi, S., et al.: Multi-objective Grey Wolf Optimizer: a novel algorithm for multi-criterion optimization. Expert Syst. Appl. 47, 106–119 (2016)

Qiu, L., et al.: Optimal big data sharing approach for tele-health in cloud computing. In: IEEE SmartCloud, pp. 184–189 (2016)

Qiu, M., Cao, D., et al.: Data transfer minimization for financial derivative pricing using Monte Carlo simulation with GPU in 5G. Int. J. Commun. Syst. 29(16), 2364–2374 (2016)

Qiu, M., Liu, J., et al.: A novel energy-aware fault tolerance mechanism for wireless sensor networks. In: IEEE/ACM GCC (2011)

Qiu, M., Xue, C., et al.: Energy minimization with soft real-time and DVS for uniprocessor and multiprocessor embedded systems. In: IEEE DATE, pp. 1–6 (2007)

Wu, G., Zhang, H., et al.: A decentralized approach for mining event correlations in distributed system monitoring. JPDC 73(3), 330–340 (2013)

Yu, L., Liu, H.: Feature selection for high-dimensional data: a fast correlation-based filter solution. In: 20th IEEE Conference on ICML, pp. 856–863 (2003)

Acknowledgment

This study is supported in part by the National Natural Science Foundation of China (62172186, 62002133, 61872158, 61806083), in part by the Science and Technology Development Plan (International Cooperation) Project of Jilin Province (20190701019GH, 20190701002GH, 20210101183JC, 20210201072GX) and in part by the Young Science and Technology Talent Lift Project of Jilin Province (QT202013). Geng Sun is the corresponding author.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Pang, Y., Wang, A., Lian, Y., Li, J., Sun, G. (2022). A Multi-objective Optimization Method for Joint Feature Selection and Classifier Parameter Tuning. In: Memmi, G., Yang, B., Kong, L., Zhang, T., Qiu, M. (eds) Knowledge Science, Engineering and Management. KSEM 2022. Lecture Notes in Computer Science(), vol 13369. Springer, Cham. https://doi.org/10.1007/978-3-031-10986-7_19

Download citation

DOI: https://doi.org/10.1007/978-3-031-10986-7_19

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-10985-0

Online ISBN: 978-3-031-10986-7

eBook Packages: Computer ScienceComputer Science (R0)