Abstract

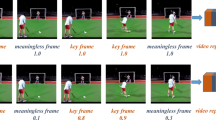

Using video sequence order as a supervised signal has proven to be effective in initializing 2d convnets for downstream tasks like video retrieval and action recognition. Earlier works used it as sequence sorting task, odd-one out task and sequence order prediction task. In this work, we propose an enhanced unsupervised video representation learning method by solving order prediction and contrastive learning jointly using 2d-CNN (as backbone). With contrastive learning we aim to pull different temporally transformed versions of same video sequence closer while pushing the other sequences away in the latent space. In addition, instead of pair wise feature extraction, the features are learned with 1-d temporal convolutions. Experiments conducted on UCF-101 and HMDB-51 datasets show that our proposal outperforms the other methods on both down-stream tasks (video retrieval and action recognition) with 2d-CNN and, achieves satisfactory results compared to 3d-CNN based methods.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Krizhevsky, A., Sutskever, I., Hinton, G.E.: Imagenet classification with deep convolutional neural networks. In: Advances in Neural Information Processing Systems, pp. 1097–1105 (2012)

Deng, J., Dong, W., Socher, R., Li, L.-J., Li, K., Fei-Fei, L.: ImageNet: a large-scale hierarchical image database. In: 2009 IEEE Conference on Computer Vision and Pattern Recognition, pp. 248–255. IEEE (2009)

Simonyan, K., Zisserman, A.: Two-stream convolutional networks for action recognition in videos. In: Advances in Neural Information Processing Systems, pp. 568–576 (2014)

Karpathy, A., Toderici, G., Shetty, S., Leung, T., Sukthankar, R., Fei-Fei, L.: Large-scale video classification with convolutional neural networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1725–1732. IEEE (2014)

Gidaris, S., Singh, P., Komodakis, N.: Unsupervised representation learning by predicting image rotations. In: International Conference on Learning Representations (2018)

Noroozi, M., Favaro, P.: Unsupervised learning of visual representations by solving Jigsaw puzzles. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9910, pp. 69–84. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46466-4_5

Doersch, C., Gupta, A., Efros, A.A.: Unsupervised visual representation learning by context prediction. In: 2015 IEEE International Conference on Computer Vision (ICCV), pp. 1422–1430. IEEE (2015)

Lee, H.-Y., Huang, J.-B., Singh, M., Yang, M.-H.: Unsupervised representation learning by sorting sequences. In: 2017 IEEE International Conference on Computer Vision (ICCV), pp. 667–676. IEEE (2017)

Misra, I., Zitnick, C.L., Hebert, M.: Shuffle and learn: unsupervised learning using temporal order verification. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) Computer Vision – ECCV 2016. LNCS, vol. 9905, pp. 527–544. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46448-0_32

Fernando, B., Bilen, H., Gavves, E., Gould, S.: Self-supervised video representation learning with odd-one-out networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3636–3645. IEEE (2017)

Jing, L., Yang, X., Liu, J., Tian, Y.: Self-supervised spatiotemporal feature learning via video rotation prediction. arXiv preprint arXiv:1811.11387 (2018)

Wang, J., Jiao, J., Liu, Y.-H.: Self-supervised video representation learning by pace prediction. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12362, pp. 504–521. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58520-4_30

Kim, D., Cho, D., Kweon, I. S.: Self-supervised video representation learning with space-time cubic puzzles. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 33, pp. 8545–8552 (2019)

Xu, D., Xiao, J., Zhao, Z., Shao, J., Xie, D., Zhuang, Y.: Self-Supervised Spatiotemporal Learning via Video Clip Order Prediction. In: 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 10326–10335. IEEE (2019)

Benaim, S., et al.: SpeedNet: learning the speediness in videos. In: 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 9922–9931. IEEE (2020)

Kumar, V., Tripathi, V., Pant, B.: Learning spatio-temporal features for movie scene retrieval using 3D convolutional autoencoder. In: International Conference on Computational Intelligence in Analytics and Information System (CIAIS) (2021)

Kumar, V., Tripathi, V., Pant, B.: Unsupervised learning of visual representations via rotation and future frame prediction for video retrieval. In: Singh, M., Tyagi, V., Gupta, P.K., Flusser, J., Ören, T., Sonawane, V.R. (eds.) ICACDS 2021. CCIS, vol. 1440, pp. 701–710. Springer, Cham (2021). https://doi.org/10.1007/978-3-030-81462-5_61

Chao, Y.-W., Vijayanarasimhan, S., Seybold, B., Ross, D.A., Deng, J., Sukthankar, R.: Rethinking the faster R-CNN architecture for temporal action localization. In: 2018 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1130–1139. IEEE (2018). https://doi.org/10.1109/CVPR.2018.00124

Hussein, N., Gavves, E., Smeulders, A.W.M.: Timeception for complex action recognition. In: 2019 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 254–263. IEEE (2019). https://doi.org/10.1109/CVPR.2019.00034

Wang, B., Ma, L., Zhang, W., Liu, W.: Reconstruction network for video captioning. In: 2018 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 7622–7631. IEEE (2018). https://doi.org/10.1109/CVPR.2018.00795

Kumar, V.: A Multi-face recognition framework for real time monitoring. In: 2021 Sixth International Conference on Image Information Processing (ICIIP). IEEE (2021)

Hu, X., Peng, S., Wang, L., Yang, Z., Li, Z.: Surveillance video face recognition with single sample per person based on 3D modeling. Neurocomputing 235, 46–58 (2017)

Kumar, V., Tripathi, V., Pant, B.: Learning compact spatio-temporal features for fast content based video retrieval. IJITEE 9, 2404–2409 (2019)

Mühling, M., et al.: Deep learning for content-based video retrieval in film and television production. Multimed. Tools Appl. 76, 22169–22194 (2017)

Kumar, V., Tripathi, V., Pant, B.: Content based movie scene retrieval using spatio-temporal features. IJEAT 9, 1492–1496 (2019)

Laptev, I.: On space-time interest points. IJCV 64(2–3), 107–123 (2005)

Klaser, A., Marsza lek, M., Schmid, C.: A spatio-temporal descriptor based on 3D-gradients. In: BMVC (2008)

Wang, H., Schmid, C.: Action recognition with improved trajectories. In: 2013 IEEE International Conference on Computer Vision (ICCV), pp. 3551–3558. IEEE (2013)

Laptev, I., Marszalek, M., Schmid, C., Rozenfeld, B.: Learning realistic human actions from movies. In: 2008 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1–8. IEEE (2008)

Dalal, N., Triggs, B., Schmid, C.: Human detection using oriented histograms of flow and appearance. In: Leonardis, A., Bischof, H., Pinz, A. (eds.) ECCV 2006. LNCS, vol. 3952, pp. 428–441. Springer, Heidelberg (2006). https://doi.org/10.1007/11744047_33

Dalal, N., Triggs, B.: Histograms of oriented gradients for human detection. In: 2005 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 886–893. IEEE (2005). https://doi.org/10.1109/CVPR.2005.177

Tran, D., Bourdev, L., Fergus, R., Torresani, L., Paluri, M.: Learning spatiotemporal features with 3D convolutional networks. In: 2015 IEEE International Conference on Computer Vision (ICCV), pp. 4489–4497. IEEE (2015)

Kumar, V., Tripathi, V., Pant, B.: Exploring the strengths of neural codes for video retrieval. In: Tomar, A., Malik, H., Kumar, P., Iqbal, A. (eds.) Machine Learning, Advances in Computing, Renewable Energy and Communication. LNEE, vol. 768, pp. 519–531. Springer, Singapore (2022). https://doi.org/10.1007/978-981-16-2354-7_46

Kumar, V., Tripathi, V., Pant, B.: Content based surgical video retrieval via multideep features fusion. In: 2021 IEEE International Conference on Electronics, Computing and Communication Technologies (CONECCT). IEEE (2021)

Kumar, V., Tripathi, V., Pant, B.: Content based fine-grained image retrieval using convolutional neural network. In: 2020 7th International Conference on Signal Processing and Integrated Networks (SPIN), pp. 1120–1125. IEEE (2020)

Zhang, R., Isola, P., Efros, A.A.: Colorful image colorization. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9907, pp. 649–666. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46487-9_40

Caron, M., Bojanowski, P., Joulin, A., Douze, M.: Deep clustering for unsupervised learning of visual features. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) Computer Vision – ECCV 2018. LNCS, vol. 11218, pp. 139–156. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01264-9_9

Pathak, D., Krahenbuhl, P., Donahue, J., Darrell, T., Efros, A.A.: Context encoders: feature learning by inpainting. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 2536–2544. IEEE (2016)

Wu, Z., Xiong, Y., Stella, X.Y., Lin, D.: Unsupervised feature learning via non-parametric instance discrimination. In: 2018 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 3733–3742. IEEE (2018)

Chen, T., Kornblith, S., Norouzi, M., Hinton, G.: A simple framework for contrastive learning of visual representations. In: International Conference on Machine Learning, pp. 1597–1607. PMLR (2020)

He, K., Fan, H., Wu, Y., Xie, S., Girshick, R.: Momentum contrast for unsupervised visual representation learning. In: 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 9729–9738. IEEE (2020)

Büchler, U., Brattoli, B., Ommer, B.: Improving spatiotemporal self-supervision by deep reinforcement learning. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11219, pp. 797–814. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01267-0_47

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: 2016 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 770–778. IEEE (2016). https://doi.org/10.1109/CVPR.2016.90

Soomro, K., Zamir, A.R., Shah, M.: UCF101: a dataset of 101 human actions classes from videos in the wild. arXiv preprint arXiv:1212.0402 (2012)

Kuehne, H., Jhuang, H., Garrote, E., Poggio, T., Serre, T.: HMDB: a large video database for human motion recognition. In: 2011 International Conference on Computer Vision ICCV, pp. 2556–2563. IEEE (2011)

Kumar, V., et al.: Hybrid spatiotemporal contrastive representation learning for content-based surgical video retrieval. Electron. 11, 1353 (2022). https://doi.org/10.3390/electronics11091353

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Kumar, V., Tripathi, V., Pant, B. (2022). Enhancing Unsupervised Video Representation Learning by Temporal Contrastive Modelling Using 2D CNN. In: Raman, B., Murala, S., Chowdhury, A., Dhall, A., Goyal, P. (eds) Computer Vision and Image Processing. CVIP 2021. Communications in Computer and Information Science, vol 1568. Springer, Cham. https://doi.org/10.1007/978-3-031-11349-9_43

Download citation

DOI: https://doi.org/10.1007/978-3-031-11349-9_43

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-11348-2

Online ISBN: 978-3-031-11349-9

eBook Packages: Computer ScienceComputer Science (R0)