Abstract

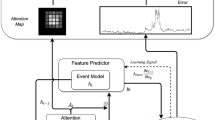

Using offline training schemes, researchers have tackled the event segmentation problem by providing full or weak-supervision through manually annotated labels or self-supervised epoch-based training. Most works consider videos that are at most 10’s of minutes long. We present a self-supervised perceptual prediction framework capable of temporal event segmentation by building stable representations of objects over time and demonstrate it on long videos, spanning several days at 25 FPS. The approach is deceptively simple but quite effective. We rely on predictions of high-level features computed by a standard deep learning backbone. For prediction, we use an LSTM, augmented with an attention mechanism, trained in a self-supervised manner using the prediction error. The self-learned attention maps effectively localize and track the event-related objects in each frame. The proposed approach does not require labels. It requires only a single pass through the video, with no separate training set. Given the lack of datasets of very long videos, we demonstrate our method on video from 10 d (254 h) of continuous wildlife monitoring data that we had collected with required permissions. We find that the approach is robust to various environmental conditions such as day/night conditions, rain, sharp shadows, and windy conditions. For the task of temporally locating events at the activity level, we had an 80% activity recall rate for one false activity detection every 50 min. We will make the dataset, which is the first of its kind, and the code available to the research community. Project page is available at https://ramymounir.com/publications/EventSegmentation/.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

Available at https://ramymounir.com/publications/EventSegmentation/.

References

Actev: Activities in extended video. https://actev.nist.gov/

Aakur, S.N., Sarkar, S.: A perceptual prediction framework for self supervised event segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1197–1206 (2019)

Aakur, S.N., Sarkar, S.: Action localization through continual predictive learning (2020). arXiv preprint arXiv:2003.12185

Alayrac, J.B., Bojanowski, P., Agrawal, N., Sivic, J., Laptev, I., Lacoste-Julien, S.: Unsupervised learning from narrated instruction videos. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4575–4583 (2016)

Bahdanau, D., Cho, K., Bengio, Y.: Neural machine translation by jointly learning to align and translate (2014). arXiv preprint arXiv:1409.0473

Bhatnagar, B.L., Singh, S., Arora, C., Jawahar, C.: CVIT K: Unsupervised learning of deep feature representation for clustering egocentric actions. In: IJCAI, pp. 1447–1453 (2017)

Bojanowski, P., et al.: Weakly supervised action labeling in videos under ordering constraints. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) ECCV 2014. LNCS, vol. 8693, pp. 628–643. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-10602-1_41

Devlin, J., Chang, M.W., Lee, K., Toutanova, K.: Bert: pre-training of deep bidirectional transformers for language understanding (2018). arXiv preprint arXiv:1810.04805

Ding, L., Xu, C.: Weakly-supervised action segmentation with iterative soft boundary assignment. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6508–6516 (2018)

Finn, C., Goodfellow, I., Levine, S.: Unsupervised learning for physical interaction through video prediction. In: Advances in Neural Information Processing Systems, pp. 64–72 (2016)

Hochreiter, S., Schmidhuber, J.: Long short-term memory. Neural Comput. 9(8), 1735–1780 (1997)

Huang, D.A., Fei-Fei, L., Niebles, J.C.: Connectionist temporal modeling for weakly supervised action labeling. In: European Conference on Computer Vision, pp. 137–153. Springer (2016). https://doi.org/10.48550/arXiv.1607.08584

Lea, C., Flynn, M.D., Vidal, R., Reiter, A., Hager, G.D.: Temporal convolutional networks for action segmentation and detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 156–165 (2017)

Lea, C., Reiter, A., Vidal, R., Hager, G.D.: Segmental spatiotemporal CNNs for fine-grained action segmentation. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9907, pp. 36–52. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46487-9_3

Loschky, L.C., Larson, A.M., Smith, T.J., Magliano, J.P.: The scene perception & event comprehension theory (spect) applied to visual narratives. Top. Cogn. Sci. 12(1), 311–351 (2020)

Lotter, W., Kreiman, G., Cox, D.: Deep predictive coding networks for video prediction and unsupervised learning (2016). arXiv preprint arXiv:1605.08104

Luong, M.T., Pham, H., Manning, C.D.: Effective approaches to attention-based neural machine translation (2015). arXiv preprint arXiv:1508.04025

Malmaud, J., Huang, J., Rathod, V., Johnston, N., Rabinovich, A., Murphy, K.: What’s cookin’? interpreting cooking videos using text, speech and vision (2015). arXiv preprint arXiv:1503.01558

Metcalf, K., Leake, D.: Modelling unsupervised event segmentation: learning event boundaries from prediction errors. In: CogSci. (2017)

Garcia del Molino, A., Lim, J.H., Tan, A.H.: Predicting visual context for unsupervised event segmentation in continuous photo-streams. In: Proceedings of the 26th ACM International Conference on Multimedia, pp. 10–17 (2018)

Qiu, J., Huang, G., Lee, T.S.: A neurally-inspired hierarchical prediction network for spatiotemporal sequence learning and prediction (2019). arXiv preprint arXiv:1901.09002

Radvansky, G.A., Krawietz, S.A., Tamplin, A.K.: Walking through doorways causes forgetting: further explorations. Quart. J. Exper. Psychol. 64(8), 1632–1645 (2011)

Richard, A., Kuehne, H., Gall, J.: Weakly supervised action learning with RNN based fine-to-coarse modeling. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 754–763 (2017)

Sahoo, D., Pham, Q., Lu, J., Hoi, S.C.: Online deep learning: Learning deep neural networks on the fly (2017). arXiv preprint arXiv:1711.03705

Sener, F., Yao, A.: Unsupervised learning and segmentation of complex activities from video. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 8368–8376 (2018)

Speer, N.K., Swallow, K.M., Zacks, J.M.: Activation of human motion processing areas during event perception. Cogn. Aff. Behav. Neurosci. 3(4), 335–345 (2003)

Vaswani, A., et al.: Attention is all you need. In: Advances in Neural Information Processing Systems, pp. 5998–6008 (2017)

VidalMata, R.G., Scheirer, W.J., Kuehne, H.: Joint visual-temporal embedding for unsupervised learning of actions in untrimmed sequences (2020). arXiv preprint arXiv:2001.11122

Wang, Y., Gao, Z., Long, M., Wang, J., Yu, P.S.: Predrnn++: towards a resolution of the deep-in-time dilemma in spatiotemporal predictive learning (2018). arXiv preprint arXiv:1804.06300

Wichers, N., Villegas, R., Erhan, D., Lee, H.: Hierarchical long-term video prediction without supervision (2018). arXiv preprint arXiv:1806.04768

Xu, K., et al.: Show, attend and tell: neural image caption generation with visual attention. In: International Conference on Machine Learning, pp. 2048–2057 (2015)

Yang, Z., Dai, Z., Yang, Y., Carbonell, J., Salakhutdinov, R.R., Le, Q.V.: Xlnet: generalized autoregressive pretraining for language understanding. In: Advances in Neural Information Processing Systems, pp. 5754–5764 (2019)

Zacks, J.M.: Using movement and intentions to understand simple events. Cogn. Sci. 28(6), 979–1008 (2004)

Zacks, J.M., Swallow, K.M.: Event segmentation. Curr. Dir. Psychol. Sci. 16(2), 80–84 (2007)

Zacks, J.M., Tversky, B.: Event structure in perception and conception. Psychol. Bull. 127(1), 3 (2001)

Zacks, J.M., Tversky, B., Iyer, G.: Perceiving, remembering, and communicating structure in events. J. Exp. Psychol. Gen. 130(1), 29 (2001)

Acknowledgment

This research was supported in part by the US National Science Foundation grants CNS 1513126 and IIS 1956050. The bird video dataset used in this paper was made possible through funding from the Polish National Science Centre (grant NCN 2011/01/M/NZ8/03344 and 2018/29/B/NZ8/02312). Province Sud (New Caledonia) issued all permits - from 2002 to 2020 - required for data collection.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Mounir, R., Gula, R., Theuerkauf, J., Sarkar, S. (2022). Spatio-Temporal Event Segmentation for Wildlife Extended Videos. In: Raman, B., Murala, S., Chowdhury, A., Dhall, A., Goyal, P. (eds) Computer Vision and Image Processing. CVIP 2021. Communications in Computer and Information Science, vol 1568. Springer, Cham. https://doi.org/10.1007/978-3-031-11349-9_5

Download citation

DOI: https://doi.org/10.1007/978-3-031-11349-9_5

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-11348-2

Online ISBN: 978-3-031-11349-9

eBook Packages: Computer ScienceComputer Science (R0)