Abstract

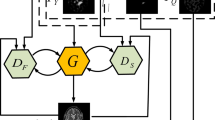

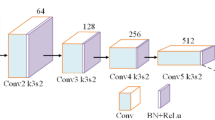

The generative adversarial networks (GAN), complete model, is used to fuse computed tomography (CT) and magnetic resonance imaging (MRI) brain images in this research paper. To create a resultant fused image with bone structures from CT images and soft tissues from MRI images, our method develops an adversarial game between a generator and a discriminator. To make a stable training process, we use GAN instead of conventional fusion methods, and our architecture can handle different resolutions of multi-source medical images. The efficacy of the proposed procedure is demonstrated using several evaluation metrics. The proposed algorithms provide the best fused images without distortion and false artefacts. Comparison of proposed methods is done with the conventional techniques. The images obtained by fusing both sources’ content with the help of the above algorithm gives the best with respect to visualization and diagnosis of the condition.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Dogra, A., Goyal, B., Agrawal, S.: From multi-scale decomposition to non-multi-scale decomposition methods: a comprehensive survey of image fusion techniques and its applications. IEEE Access 5, 16040–16067 (2017)

Ma, Y., Chen, J., Chen, C., Fan, F., Ma, J.: Infrared and visible image fusion using total variation model. Neurocomputing 202, 12–19 (2016)

Sruthy, S, Parameswaran, L., Sasi, A.P.: Image Fusion Technique using DT-CWT. IEEE 978–1–4673–5090–7/13/2013

Li, S., Yang, B., Hu, J.: Performance comparison of different multi-resolution transform for image fusion. Information Fusion 12(2), 74–84 (2011)

Zhang, Z., Blum, R.S.: A categorization of multiscale-decomposition-based image fusion schemes with a performance study for a digital camera application. Proceedings of the IEEE 87(8), 1315–1326 (1999)

Wang, J., Peng, J., Feng, X., He, G., Fan, J.: Fusion method for infrared and visible images by using non-negative sparse representation. Infrared Physics & Technology 67, 477–489 (2014)

Li, S., Yin, H., Fang, L.: Group-sparse representation with dictionary learning for medical image denoising and fusion. IEEE Trans. Biomedi. Eng. 59(12), 3450–3459 (2012)

Xiang, T., Yan, L., Gao, R.: A fusion algorithm for infrared and visible images based on adaptive dual-channel unit-linking pcnn in nsct domain. Infrared Physics & Technology 69, 53–61 (2015)

Kong, W., Zhang, L., Lei, Y.: Novel fusion method for visible light and infrared images based on nsst-sf-pcnn. Infrared Physics & Technology 65, 103–112 (2014)

Bavirisetti, D.P., Xiao, G., Liu, G.: Multi-sensor image fusion based on fourth order partial differential equations. In: International Conference on Information Fusion, pp. 1–9 (2017)

Kong, W., Lei, Y., Zhao, H.: Adaptive fusion method of visible light and infrared images based on non-subsampled shearlet transform and fast non-negative matrix factorization. Infrared Physics & Technology 67, 161–172 (2014)

Zhang, X., Ma, Y., Fan, F., Zhang, Y., Huang, J.: Infrared and visible image fusion via saliency analysis and local edge-preserving multi-scale decomposition. JOSA A 34(8), 1400–1410 (2017)

Zhao, J., Chen, Y., Feng, H., Xu, Z., Li, Q.: Infrared image enhancement through saliency feature analysis based on multi-scale decomposition. Infrared Physics & Technology 62, 86–93 (2014)

Liu, Y., Liu, S., Wang, Z.: A general framework for image fusion based on multi-scale transform and sparse representation. Information Fusion 24, 147–164 (2015)

Ma, J., Zhou, Z., Wang, B., Zong, H.: Infrared and visible image fusion based on visual saliency map and weighted least square optimization. Infrared Physics & Technology 82, 8–17 (2017)

Ma, J., Chen, C., Li, C., Huang, J.: Infrared and visible image fusion via gradient transfer and total variation minimization. Information Fusion 31, 100–109 (2016)

Liu, Y., Chen, X., Wang, Z., Wang, Z.J., Ward, R.K., Wang, X.: Deep learning for pixel-level image fusion: Recent advances and future prospects. Information Fusion 42, 158–173 (2018)

Vani, M., Saravanakumar, S.: Multi focus and multi modal image fusion using wavelet transform. In: 3rd International Conference on Signal Processing, Communication and Networking (ICSCN). In proceeding of IEEE (2015)

Bhavana, V., Krishnappa, H.K.: Multi-modality medical image fusion using discrete wavelet transform. In: International Conference on Eco-friendly Computing and Communication Systems. Procedia Computer Science 70, pp. 625–631. Elsevier (2015)

Aishwarya, N., Abirami, S., Amutha, R.: Multifocus image fusion using discrete wavelet transform and sparse representation. In: IEEE WiSPNET conference (2016)

Paramanandham, N., Rajendiran, K.: A simple and efficient image fusion algorithm based on standard deviation in wavelet domain. In: IEEE WiSPNET conference (2016)

Chatterjee, A., Biswas, M., Maji, D.: Discrete wavelet transform based V-I image fusion with artificial bee colony optimization. In: proceeding of IEEE, 7th annual Computing and Communication Workshop and Conference (CCWC) (2017)

Rajalingam, B., Priya, R.: Multimodal medical image fusion based on deep learning neural network for clinical treatment analysis. Int. J. ChemTech Res. 11(06), 160–176 (2018)

Rajalingam, B., Priya, R., Bhavani, R.: Hybrid multimodal medical image fusion algorithms for astrocytoma disease analysis. Emerging Technologies in Computer Engineering: Microservices in Big Data Analytics 985, pp. 336–348. Springer (2019)

Rajalingam, B., Priya, R., Bhavani, R.: Hybrid multimodal medical image fusion using combination of transform techniques for disease analysis. Procedia Computer Science 152, pp. 150–157. Elsevier (2019)

Rajalingam, B., Priya, R., Bhavani, R.: Multimodal medical image fusion using hybrid fusion techniques for neoplastic and alzheimer’s disease analysis. J. Computati. Theoreti. Nanoscience 16, 1320–1331 (2019)

Santhoshkumar, R., Kalaiselvi Geetha, M.: Deep learning approach: emotion recognition from human body movements. J. Mechani. Continua Mathemati. Sci. (JMCMS) 14(3), pp. 182–195 (June 2019). ISSN: 2454-7190

Santhoshkumar, R., Kalaiselvi Geetha, M.: Vision based human emotion recognition using HOG-KLT feature’ advances in intelligent system and computing. Lecture Notes in Networks and Systems 121, 261–272. Springer. ISSN: 2194–5357. https://doi.org/10.1007/978-981-15-3369-3_20

Santhoshkumar, R., Kalaiselvi Geetha, M.: Deep learning approach: emotion recognition from human body movements. J. Mechani. Continua and Mathemati. 14(3), 182–195 (2019)

Talbi, H., KhireddineKholladi, M.: Predator Prey Optimizer and DTCWT for Multimodal Medical Image Fusion. 978–1–5386–4690–8/18/$31.00 IEEE (2018)

Talbar, S.N., Satishkumar, S.C., Pawar, A.: Non-subsampled complex wavelet transform based medical image fusion. In: Proceedings of the Future Technologies Conference, pp. 548–556. Springer, Cham (2018)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Narute, B., Bartakke, P. (2022). Brain MRI and CT Image Fusion Using Generative Adversarial Network. In: Raman, B., Murala, S., Chowdhury, A., Dhall, A., Goyal, P. (eds) Computer Vision and Image Processing. CVIP 2021. Communications in Computer and Information Science, vol 1568. Springer, Cham. https://doi.org/10.1007/978-3-031-11349-9_9

Download citation

DOI: https://doi.org/10.1007/978-3-031-11349-9_9

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-11348-2

Online ISBN: 978-3-031-11349-9

eBook Packages: Computer ScienceComputer Science (R0)