Abstract

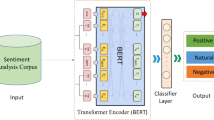

Sentiment analysis and part-of-speech tagging are two main tasks in the field of Natural Language Processing (NLP). Bidirectional Encoder Representation from Transformers (BERT) model is a famous NLP model proposed by Google. However, the accuracy of sentiment analysis of the BERT model is not significantly improved compared with traditional machine learning. In order to improve the accuracy of sentiment analysis of the BERT model, we propose Bidirectional Encoder Representation from Transformers with Part-of-Speech Information (BERT-POS). It is a sentiment analysis model combined with part-of-speech tagging for iCourse (launched in 2014, one of the largest MOOC platforms in China). Specifically, this model consists of the part-of-speech information on the embedding layer of the BERT model. To evaluate this model, we construct large data sets which are derived from iCourse. We compare the BERT-POS with several classical NLP models on the constructed data sets. The experimental results show that the accuracy of sentiment analysis of the BERT-POS is better than other baselines.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

References

Cui, Y., et al.: Pre-training with whole word masking for Chinese BERT, pp. 11–21. arXiv preprint arXiv:1906.08101 (2019)

Devlin, J., Chang, M.W., Lee, K., Toutanova, K.: BERT: pre-training of deep bidirectional transformers for language understanding, pp. 1–16. arXiv preprint arXiv:1810.04805 (2018)

Paranjape, B., Bai, Z., Cassell, J.: Predicting the temporal and social dynamics of curiosity in small group learning. In: Penstein Rosé, C., et al. (eds.) AIED 2018. LNCS (LNAI), vol. 10947, pp. 420–435. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-93843-1_31

Santos, O.C., Salmeron-Majadas, S., Boticario, J.G.: Emotions detection from math exercises by combining several data sources. In: Lane, H.C., Yacef, K., Mostow, J., Pavlik, P. (eds.) AIED 2013. LNCS (LNAI), vol. 7926, pp. 742–745. Springer, Heidelberg (2013). https://doi.org/10.1007/978-3-642-39112-5_102

Sun, Y., et al.: ERNIE: enhanced representation through knowledge integration, pp. 1–8. arXiv preprint arXiv:1904.09223 (2019)

Sun, Z., et al.: ChineseBERT: Chinese pretraining enhanced by glyph and pinyin information, pp. 1–12. arXiv preprint arXiv:2106.16038 (2021)

Vaswani, A., et al.: Attention is all you need. In: Advances in Neural Information Processing Systems, pp. 5998–6008 (2017)

Wang, S., Huang, M., Deng, Z., et al.: Densely connected CNN with multi-scale feature attention for text classification. In: IJCAI, pp. 4468–4474 (2018)

You, Q., Luo, J., Jin, H., Yang, J.: Cross-modality consistent regression for joint visual-textual sentiment analysis of social multimedia. In: Proceedings of the Ninth ACM International Conference on Web Search and Data Mining, pp. 13–22 (2016)

Yuan, J., et al.: Gated CNN: integrating multi-scale feature layers for object detection. Pattern Recogn. 105, 107131–107141 (2020)

Zhou, C., Sun, C., Liu, Z., Lau, F.: A C-LSTM neural network for text classification, pp. 1–10. arXiv preprint arXiv:1511.08630 (2015)

Zulqarnain, M., Ghazali, R., Ghouse, M.G., Mushtaq, M.F.: Efficient processing of GRU based on word embedding for text classification. JOIV Int. J. Inform. Visual. 3(4), 377–383 (2019)

Acknowledgements

This work was supported by the National Natural Science Foundation of China (61877029).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 Springer Nature Switzerland AG

About this paper

Cite this paper

Liu, W. et al. (2022). BERT-POS: Sentiment Analysis of MOOC Reviews Based on BERT with Part-of-Speech Information. In: Rodrigo, M.M., Matsuda, N., Cristea, A.I., Dimitrova, V. (eds) Artificial Intelligence in Education. Posters and Late Breaking Results, Workshops and Tutorials, Industry and Innovation Tracks, Practitioners’ and Doctoral Consortium. AIED 2022. Lecture Notes in Computer Science, vol 13356. Springer, Cham. https://doi.org/10.1007/978-3-031-11647-6_72

Download citation

DOI: https://doi.org/10.1007/978-3-031-11647-6_72

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-11646-9

Online ISBN: 978-3-031-11647-6

eBook Packages: Computer ScienceComputer Science (R0)