Abstract

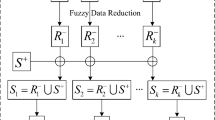

Using fuzzy systems to deal with high-dimensional data is still a challenging work, even though our recently proposed adaptive Takagi-Sugeno-Kang (AdaTSK) model equipped with Ada-softmin can be effectively employed to solve high-dimensional classification problems. Facing high-dimensional data, AdaTSK is prone to overfitting phenomenon, which results in poor performance. While ensemble learning is an effective technique to help the base learners to improve the final performance and avoid overfitting. Therefore, in this paper, we propose an ensemble fuzzy classifier integrating an improved bagging strategy and AdaTSK model to handle high-dimensional classification problems, which is named as Bagging-AdaTSK. At first, an improved bagging strategy is introduced and the original dataset is split into multiple subsets containing fewer samples and features. These subsets are overlapped with each other and can cover all the samples and features to guarantee the satisfactory accuracy. Then, on each subset, an AdaTSK model is trained as a base learner. Finally, these trained AdaTSK models are aggregated together to conduct the task, which results in so-called Bagging-AdaTSK. The experimental results on high-dimensional datasets demonstrate that Bagging-AdaTSK has competitive performance.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Change history

21 November 2022

In an older version of this chapter, some table citations was presented incorrectly. This was corrected.

References

Bian, Y., Chen, H.: When does diversity help generalization in classification ensembles? IEEE Trans. Cybern. (2022). In Press, https://doi.org/10.1109/TCYB.2021.3053165

Chakraborty, D., Pal, N.R.: A neuro-fuzzy scheme for simultaneous feature selection and fuzzy rule-based classification. IEEE Trans. Neural Networks 15(1), 110–123 (2004)

Chen, Y., Pal, N.R., Chung, I.: An integrated mechanism for feature selection and fuzzy rule extraction for classification. IEEE Trans. Fuzzy Syst. 20(4), 683–698 (2012)

Ebadzadeh, M.M., Salimi-Badr, A.: IC-FNN: a novel fuzzy neural network with interpretable, intuitive, and correlated-contours fuzzy rules for function approximation. IEEE Trans. Fuzzy Syst. 26(3), 1288–1302 (2018)

Feng, S., Chen, C.L.P.: Fuzzy broad learning system: a novel neuro-fuzzy model for regression and classification. IEEE Trans. Cybern. 50(2), 414–424 (2020)

Gao, T., Zhang, Z., Chang, Q., Xie, X., Wang, J.: Conjugate gradient-based Takagi-Sugeno fuzzy neural network parameter identification and its convergence analysis. Neurocomputing 364, 168–181 (2019)

Guo, F., et al.: A concise TSK fuzzy ensemble classifier integrating dropout and bagging for high-dimensional problems. IEEE Trans. Fuzzy Syst. (2022). In Press, https://doi.org/10.1109/TFUZZ.2021.3106330

Lau, C., Ghosh, K., Hussain, M.A., Hassan, C.C.: Fault diagnosis of Tennessee Eastman process with multi-scale PCA and ANFIS. Chemom. Intell. Lab. Syst. 120, 1–14 (2013)

Mizumoto, M.: Pictorial representations of fuzzy connectives, part I: cases of t-norms, t-conorms and averaging operators. Fuzzy Sets Syst. 31(2), 217–242 (1989)

Pal, N.R., Eluri, V.K., Mandal, G.K.: Fuzzy logic approaches to structure preserving dimensionality reduction. IEEE Trans. Fuzzy Syst. 10(3), 277–286 (2002)

Pal, N.R., Saha, S.: Simultaneous structure identification and fuzzy rule generation for Takagi-Sugeno models. IEEE Trans. Syst. Man Cybern. Part B (Cybern.) 38(6), 1626–1638 (2008)

Pratama, M., Pedrycz, W., Lughofer, E.: Evolving ensemble fuzzy classifier. IEEE Trans. Fuzzy Syst. 26(5), 2552–2567 (2018)

Rini, D.P., Shamsuddin, S.M., Yuhaniz, S.S.: Particle swarm optimization for ANFIS interpretability and accuracy. Soft Comput. 20(1), 251–262 (2014). https://doi.org/10.1007/s00500-014-1498-z

Rokach, L.: Ensemble-based classifiers. Artif. Intell. Rev. 33(1), 1–39 (2010)

Safari Mamaghani, A., Pedrycz, W.: Genetic-programming-based architecture of fuzzy modeling: towards coping with high-dimensional data. IEEE Trans. Fuzzy Syst. 29(9), 2774–2784 (2021)

Wang, B., Pineau, J.: Online bagging and boosting for imbalanced data streams. IEEE Trans. Knowl. Data Eng. 28(12), 3353–3366 (2016)

Wang, J., Zhang, H., Wang, J., Pu, Y., Pal, N.R.: Feature selection using a neural network with group lasso regularization and controlled redundancy. IEEE Trans. Neural Netw. Learn. Syst. 32(3), 1110–1123 (2021)

Wu, D., Yuan, Y., Huang, J., Tan, Y.: Optimize TSK fuzzy systems for regression problems: minibatch gradient descent with regularization, DropRule, and AdaBound (MBGD-RDA). IEEE Trans. Fuzzy Syst. 28(5), 1003–1015 (2020)

Xie, Z., Xu, Y., Hu, Q., Zhu, P.: Margin distribution based bagging pruning. Neurocomputing 85, 11–19 (2012)

Xue, G., Chang, Q., Wang, J., Zhang, K., Pal, N.R.: An adaptive neuro-fuzzy system with integrated feature selection and rule extraction for high-dimensional classification problems. arXiv: 2201.03187 (2022)

Zhang, T., Deng, Z., Ishibuchi, H., Pang, L.M.: Robust TSK fuzzy system based on semisupervised learning for label noise data. IEEE Trans. Fuzzy Syst. 29(8), 2145–2157 (2021)

Zhou, T., Chung, F.L., Wang, S.: Deep TSK fuzzy classifier with stacked generalization and triplely concise interpretability guarantee for large data. IEEE Trans. Fuzzy Syst. 25(5), 1207–1221 (2017)

Zhou, Z.H.: Ensemble Methods: Foundations and Algorithms. CRC Press, Boca Raton (2012)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Xue, G., Zhang, B., Gong, X., Wang, J. (2022). Bagging-AdaTSK: An Ensemble Fuzzy Classifier for High-Dimensional Data. In: Huang, DS., Jo, KH., Jing, J., Premaratne, P., Bevilacqua, V., Hussain, A. (eds) Intelligent Computing Methodologies. ICIC 2022. Lecture Notes in Computer Science(), vol 13395. Springer, Cham. https://doi.org/10.1007/978-3-031-13832-4_3

Download citation

DOI: https://doi.org/10.1007/978-3-031-13832-4_3

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-13831-7

Online ISBN: 978-3-031-13832-4

eBook Packages: Computer ScienceComputer Science (R0)