Abstract

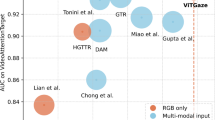

Human visual focus is a vital feature to uncover subjects’ underlying cognitive processes. To predict the subject’s visual focus, existing deep learning methods learn to combine the head orientation, location, and scene content for estimating the visual focal point. However, these methods mainly face three problems: the visual focal point prediction solely depends on learned spatial distribution heatmaps, the reasoning process in post-processing is non-learnable, and the learning of gaze salience representation could utilize more prior knowledge. Therefore, we propose a coarse-to-fine human visual focus estimation method to address these problems, for improving estimation performance. To begin with, we introduce a coarse-to-fine regression module, in which the coarse branch aims to estimate the subject’s possible attention area while the fine branch directly outputs the estimated visual focal point position, thus avoiding sequential reasoning and making visual focal point estimation is totally learnable. Furthermore, the human visual field prior is used to guide the learning of gaze salience for better encoding target-related representation. Extensive experimental results demonstrate that our method outperforms existing state-of-the-art methods on self-collected ASD-attention datasets.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Cao, Z., Wang, G., Guo, X.: Stage-by-stage based design paradigm of two-pathway model for gaze following. In: Lin, Z., et al. (eds.) PRCV 2019. LNCS, vol. 11858, pp. 644–656. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-31723-2_55

Chong, E., Ruiz, N., Wang, Y., Zhang, Y., Rozga, A., Rehg, J.M.: Connecting gaze, scene, and attention: generalized attention estimation via joint modeling of gaze and scene saliency. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11209, pp. 397–412. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01228-1_24

Chong, E., Wang, Y., Ruiz, N., Rehg, J.M.: Detecting attended visual targets in video. In: 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2020, Seattle, WA, USA, 13–19 June, 2020, pp. 5395–5405. Computer Vision Foundation / IEEE (2020)

Dai, L., Liu, J., Ju, Z., Gao, Y.: Attention mechanism based real time gaze tracking in natural scenes with residual blocks. IEEE Trans. Cogn. Dev. Syst. 14, 1 (2021)

Fan, L., Chen, Y., Wei, P., Wang, W., Zhu, S.: Inferring shared attention in social scene videos. In: 2018 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2018, Salt Lake City, UT, USA, 18–22 June, 2018, pp. 6460–6468. Computer Vision Foundation/IEEE Computer Society (2018)

Fang, Y., Tang, J., Shen, W., Shen, W., Gu, X., Song, L., Zhai, G.: Dual attention guided gaze target detection in the wild. In: IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2021, virtual, June 19–25, 2021. pp. 11390–11399. Computer Vision Foundation / IEEE (2021)

Lian, D., Yu, Z., Gao, S.: Believe it or not, we know what you are looking at! In: Jawahar, C.V., Li, H., Mori, G., Schindler, K. (eds.) ACCV 2018. LNCS, vol. 11363, pp. 35–50. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-20893-6_3

Liu, J., et al.: Early screening of autism in toddlers via response-to-instructions protocol. IEEE Trans. Cybern., 1–11 (2020)

Massé, B., Lathuilière, S., Mesejo, P., Horaud, R.: Extended gaze following: Detecting objects in videos beyond the camera field of view. In: 2019 14th IEEE International Conference on Automatic Face Gesture Recognition (FG 2019), pp. 1–8 (2019)

Newell, A., Yang, K., Deng, J.: Stacked hourglass networks for human pose estimation. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9912, pp. 483–499. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46484-8_29

Recasens, A., Khosla, A., Vondrick, C., Torralba, A.: Where are they looking? In: Cortes, C., Lawrence, N., Lee, D., Sugiyama, M., Garnett, R. (eds.) Advances in Neural Information Processing Systems. vol. 28. Curran Associates, Inc. (2015)

Recasens, A., Vondrick, C., Khosla, A., Torralba, A.: Following gaze in video. In: IEEE International Conference on Computer Vision, ICCV 2017, Venice, Italy, 22–29 October, 2017, pp. 1444–1452. IEEE Computer Society (2017)

Tan, G., Xu, K., Liu, J., Liu, H.: A trend on autism spectrum disorder research: eye tracking-eeg correlative analytics. IEEE Trans. Cogn. Dev. Syst., 1 (2021)

Wang, X., Zhang, J., Zhang, H., Zhao, S., Liu, H.: Vision-based gaze estimation: a review. IEEE Trans. Cogn. Dev. Syst., 1 (2021)

Wang, Z., Liu, J., He, K., Xu, Q., Xu, X., Liu, H.: Screening early children with autism spectrum disorder via response-to-name protocol. IEEE Trans. Industr. Inf. 17(1), 587–595 (2021)

Yang, L., Dong, K., Dmitruk, A.J., Brighton, J., Zhao, Y.: A dual-cameras-based driver gaze mapping system with an application on non-driving activities monitoring. IEEE Trans. Intell. Transp. Syst. 21(10), 4318–4327 (2020)

Yücel, Z., Salah, A.A., Meriçli,, Meriçli, T., Valenti, R., Gevers, T.: Joint attention by gaze interpolation and saliency. IEEE Trans. Cybern. 43(3), 829–842 (2013)

Zhao, H., Lu, M., Yao, A., Chen, Y., Zhang, L.: Learning to draw sight lines. Int. J. Comput. Vis. 128(5), 1076–1100 (2020)

Zhuang, N., Ni, B., Xu, Y., Yang, X., Zhang, W., Li, Z., Gao, W.: Muggle: multi-stream group gaze learning and estimation. IEEE Trans. Circuits Syst. Video Technol. 30(10), 3637–3650 (2020)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Wang, X. et al. (2022). A Coarse-to-Fine Human Visual Focus Estimation for ASD Toddlers in Early Screening. In: Liu, H., et al. Intelligent Robotics and Applications. ICIRA 2022. Lecture Notes in Computer Science(), vol 13455. Springer, Cham. https://doi.org/10.1007/978-3-031-13844-7_43

Download citation

DOI: https://doi.org/10.1007/978-3-031-13844-7_43

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-13843-0

Online ISBN: 978-3-031-13844-7

eBook Packages: Computer ScienceComputer Science (R0)