Abstract

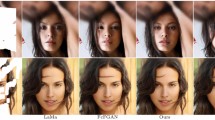

For image inpainting tasks, details and semantics are very important. Most methods based deep learning will make the result lack structure and texture features or even lose rationality. Additionally, many algorithms are step-by-step inpainting algorithms, which complicates the whole process. To avoid these, following the previous work, we propose an end-to-end inpainting model based on a multi-scale residual convolutional network, using MSGC to replace the conventional convolutional layer in encoder. This method combines multi-scale information and expands the receptive field, which can capture long-term information. In addition, we exploit SAB, a new scale attention block to capture important features from previous scale. At the same time, for getting richer detailed information, various loss functions are used to constrain the model during the training process. The test results of CelebA-HQ and Paris street view show that our proposed method is superior to other classic image inpainting algorithms.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Bertalmio, M., Sapiro, G., Caselles, V., Ballester, C.: Image inpainting. In: Proceedings of the 27th Annual Conference on Computer Graphics and Interactive Techniques, pp. 417–424 (2000)

Barnes, C., Shechtman, E., Finkelstein, A., et al.: PatchMatch: a randomized correspondence algorithm for structural image editing. ACM Trans. Graph. 28(3, article 24) (2009)

Bertalmio, M., Sapiro, G., Caselles, V., et al.: Image inpainting. In: Proceedings of the 27th Annual Conference on Computer Graphics and Interactive Techniques, pp. 417–424. ACM Press, New York (2000)

Goodfellow, I.J., et al.: Generative adversarial nets. In: Advances in NeuralInformation Processing Systems (NeurIPS), pp. 2672–2680 (2014)

Finn, C., Abbeel, P., Levine, S.: Model-agnostic meta-learning for fast adaptation of deep networks. arXiv preprint arXiv:1703.03400 (2017)

Cao, J., Hu, Y., Zhang, H., He, R., Sun, Z.: Learning a high fidelity pose invariant model for high-resolution face frontalization. In: Advances in Neural Information Processing Systems, pp. 2867–2877 (2018)

Huang, H., He, R., Sun, Z., Tan, T.: Wavelet domain generative adversarial network for multi-scale face hallucination. Int. J. Comput. Vision 127(6–7), 763–784 (2019)

Pathak, D., Krahenbuhl, P., Donahue, J., et al.: Context encoders : feature learning by inpainting. In: Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Piscataway, pp. 2536–2544. IEEE (2016)

Yan, Z., Li, X., Li, M., Zuo, W., Shan, S.: Shift-Net: image inpainting via deep feature rearrangement. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) Computer Vision – ECCV 2018. LNCS, vol. 11218, pp. 3–19. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01264-9_1

Yu, J., Lin, Z., Yang, J., et al.: Free- form image Inpainting with gated convolution. arXiv:1806.03589 (2018)

Yu, F., Koltun, V.: Multi-scale context aggregation by dilated convolutions. In: ICLR (2016)

Hui, Z., Li, J., Wang, X., et al.: Image fine-grained inpainting. arXiv:2002.02609v2 (2020)

Wang, Y., Tao, X., Qi, X.J., et al.: Image inpainting via generative multi-column convolutional neural networks. In: Proceedings of the Advances in Neural Information Processing Systems, pp. 329–338. MIT Press, Cambridge (2018)

Hu, J., Shen, L., Sun, G.: Squeeze-and-excitation networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7132–7141. IEEE Computer Society Press, Los Alamitos (2018)

Liu, G., Reda, F.A., Shih, K.J., Wang, T.-C., Tao, A., Catanzaro, B.: Image inpainting for irregular holes using partial convolutions. In: European Conference on Computer Vision (ECCV), pp. 85–100 (2018)

Zeng, Y.H., Fu, J.L., Chao, H.Y., et al.: Learning pyramid-context encoder network for high-quality image inpainting. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1486–1494. IEEE Computer Society Press, Los Alamitos (2019)

Wang, D., Xie, C., Liu, S., et al.: Image inpainting with edge-guided learnable bidirectional attention maps. arXiv:2104.12087v1 (2021)

Moskalenko, A., Erofeev, M., Vatolin, D.: Deep two-stage high-resolution image inpainting. arXiv: 2104.13464v1 (2021)

Zhu, M., et al.: Image inpainting by end-to-end cascaded refinement with mask awareness. IEEE Trans. Image Process. 30, 4855–4866 (2021). https://doi.org/10.1109/TIP.2021.3076310

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. In: International Conference for Learning Representations (ICLR) (2015)

Karras, T., Aila, T., Laine, S., Lehtinen, J.: Progressive growing of GANs for improved quality, stability, and variation. In: International Conference for Learning Representations (ICLR) (2018)

Kingma, D.P., Ba, J.: Adam: a method for stochastic optimization. In: International Conference for Learning Representations (ICLR) (2015)

Zhang, R., Isola, P., Efros, A.A., Shechtman, E., Wang, O.: The unreasonable effectiveness of deep features as a perceptual metric. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 586–595 (2018)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Jiang, H., Ma, X., Yang, D., Zhao, J., Shen, Y. (2022). Image Inpainting Based Multi-scale Gated Convolution and Attention. In: Pimenidis, E., Angelov, P., Jayne, C., Papaleonidas, A., Aydin, M. (eds) Artificial Neural Networks and Machine Learning – ICANN 2022. ICANN 2022. Lecture Notes in Computer Science, vol 13530. Springer, Cham. https://doi.org/10.1007/978-3-031-15931-2_34

Download citation

DOI: https://doi.org/10.1007/978-3-031-15931-2_34

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-15930-5

Online ISBN: 978-3-031-15931-2

eBook Packages: Computer ScienceComputer Science (R0)