Abstract

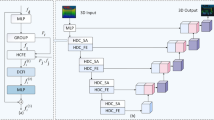

Transformer plays an increasingly important role in various computer vision areas and has made remarkable achievements in point cloud analysis. Since existing methods mainly focus on point-wise transformer, an adaptive channel-wise Transformer is proposed in this paper. Specifically, a channel encoding Transformer called Transformer Channel Encoder (TCE) is designed to encode the coordinate channel. It can encode coordinate channels by capturing the potential relationship between coordinates and features. The encoded channel can extract features with stronger representation ability. Compared with simply assigning attention weight to each channel, our method aims to encode the channel adaptively. Moreover, our method can be extended to other frameworks to improve their preformance. Our network adopts the neighborhood search method of feature similarity semantic receptive fields to improve the performance. Extensive experiments show that our method is superior to state-of-the-art point cloud classification and segmentation methods on three benchmark datasets.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Maturana, D., Scherer, S.: Voxnet: a 3d convolutional neural network for real-time object recognition. In: Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, pp. 922–928, IEEE, Hamburg, Germany (2015)

Feng, Y., Zhang, Z., Zhao, X., Ji, R., Gao, Y.: Gvcnn: group-view convolutional neural networks for 3d shape recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 264–272, IEEE, Salt Lake City, UT, USA (2018)

Han, Z., et al.: Seqviews2seqlabels: learning 3d global features via aggregating sequential views by rnn with attention. IEEE Trans. Image Processing 28(2), 658–672 (2019)

Gadelha, M., Wang, R., Maji, S.: Multiresolution tree networks for 3d point cloud processing. In: Proceedings of the European Conference on Computer Vision, pp. 105–122, Springer, Munich, Germany (2018) https://doi.org/10.1007/978-3-030-01234-2_7

Qi, C.R., Su, H., Mo, K., Guibas, L.J.: Pointnet: deep learning on point sets for 3d classification and segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 652–660, IEEE, Honolulu, HI, USA (2017)

Qi, C.R., Su, H., Guibas, L.J.: Pointnet++: deep hierarchical feature learning on point sets in a metric space. In: Advances in Neural Information Processing Systems, pp. 5099–5180, ACM, Long Beach California USA (2017)

Wang, Y., Sun, Y., Liu, Z., Sarma, S.E., Bronstein, M., Solomon, M.: Dynamic graph cnn for learning on point clouds. ACM Trans. Graphics 38(5), 146:1–146:12 (2019)

Liu, Y., Fan, B., Xiang, S., Pan, C.: Relation-shape convolutional neural network for point cloud analysis. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1–10, IEEE, Long Beach, USA (2019)

Xu, M., Ding, R., Zhao, H., Qi, X.: Paconv: position adaptive convolution with dynamic kernel assembling on point clouds. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 9621–9630, IEEE, Nashville, TN, USA (2021)

Zhou, H., Feng, Y., Fang, M., Wei, M., Qin, J., Lu, T.: Adaptive graph convolution for point cloud analysis. In: Proceedings of the IEEE International Conference on Computer Vision, IEEE, Montreal, Canada (2021)

Li, Y., Bu, R., Sun, M., Chen, B.: Pointcnn: convolution on x-transformed points. In: Advances in Neural Information Processing Systems, pp. 828–838, ACM, (2018)

Guo, M., Cai, J., Liu, Z., Mu, T., Martin, R., Hu, S.: Pct: point cloud transformer. Comput. Visual Media 7, 187–199 (2021)

Zhao, H., Jiang, L., Jia, J., Torr, P., Koltun, V.: Point transformer. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 134826–134840, IEEE, Nashville, TN, USA (2021)

Hu, J., Shen, L., Albanie, S., Sun, G., Wu, E.: Squeeze-and-excitation networks. IEEE Trans. Pattern Anal. Mach. Intell. 42(8), 2011–2023 (2020)

Wang, F., et al.: Residual attention network for image classification. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6450–6458, IEEE, Honolulu, HI, USA (2017)

Wu, Z., et al.: 3d shapenets: a deep representation for volumetric shapes. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1912–1920, IEEE, Boston, MA, USA (2015)

Yi, L., et al.: A scalable active framework for region annotation in 3d shape collections. ACM Trans. Graphics 35(6), 1–12 (2016)

Uy, A., Pham, H., Hua, S., Nguyen, T., Yeung, K.: Revisiting point cloud classification: a new benchmark dataset and classification model on real-world data. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 1588–1597, IEEE, Seoul, Korea (South) (2019)

Lin, Z., Huang, S., Wang, Y.: Convolution in the cloud: learning deformable kernels in 3d graph convolution networks for point cloud analysis. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1797–1806, IEEE, Seattle, WA, USA (2020)

Atzmon, M., Maron, H., Lipman, Y.: Point convolutional neural networks by extension operators. ACM Trans. Graphics 4(71), 1–14 (2018)

Xu, Y., Fan, T., Xu, M., Zeng, L., Qiao, Y.: Spidercnn: deep learning on point sets with parameterized convolutional filters. In: Proceedings of the European Conference on Computer Vision, pp. 90–105, Springer, Munich, Germany (2018) https://doi.org/10.1007/978-3-030-01237-3_6

Wu, W., Qi, Z., Li, F.: Pointconv: deep convolutional networks on 3d point clouds. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 9621–9630, IEEE, Long Beach, USA (2019)

Yan, X., Zheng, C., Li, Z., Wang, S., Cui, S.: Pointasnl: robust point clouds processing using nonlocal neural networks with adaptive sampling. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5588–5597, IEEE, Seattle, WA, USA (2020)

Xu, Q., Sun, X., Wu, C., Wang, P., Neumann, U.: Grid-gcn for fast and scalable point cloud learning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, IEEE, Seattle, WA, USA (2020)

Su, H., et al.: Splatnet: sparse lattice networks for point cloud processing. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2530–2539, IEEE, Salt Lake City, UT, USA (2018)

Wang, L., Huang, Y., Hou, Y., Zhang, S., Shan, J.: Graph attention convolution for point cloud semantic segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 10296–10305, IEEE, Long Beach, USA (2019)

Verma, N., Boyer, E., Verbeek, J.: FeaStnet: feature-steered graph convolutions for 3d shape analysis. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2598–2606, IEEE, Salt Lake City, UT, USA (2018)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Xu, G., Cao, H., Zhang, Y., Ma, Y., Wan, J., Xu, K. (2022). Adaptive Channel Encoding Transformer for Point Cloud Analysis. In: Pimenidis, E., Angelov, P., Jayne, C., Papaleonidas, A., Aydin, M. (eds) Artificial Neural Networks and Machine Learning – ICANN 2022. ICANN 2022. Lecture Notes in Computer Science, vol 13531. Springer, Cham. https://doi.org/10.1007/978-3-031-15934-3_1

Download citation

DOI: https://doi.org/10.1007/978-3-031-15934-3_1

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-15933-6

Online ISBN: 978-3-031-15934-3

eBook Packages: Computer ScienceComputer Science (R0)