Abstract

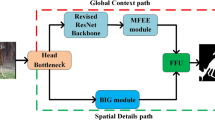

Learning-based models have demonstrated the superiority of extracting and aggregating saliency features. However, we observe that most off-the-shelf methods mainly focus on the calibration of decoder features while ignore the recalibration of vital encoder features. Moreover, the fusion between encoder features and decoder features, and the transfer between boundary features and saliency features deserve further study. To address the above issues, we propose a feature recalibration network (FRCNet) which consists of a consistency recalibration module (CRC) and a multi-source feature recalibration module (MSFRC). Specifically, intersection and union mechanisms in CRC are embedded after the decoder unit to recalibrate the consistency of encoder and decoder features. By the aid of the special designed mechanisms, CRC can suppress the useless external superfluous information and enhance the useful internal saliency information. MSFRC is designed to aggregate multi-source features and reduce parameter imbalance between saliency features and boundary features. Compared with previous methods, more layers are applied to generate boundary features, which sufficiently leverage the complementary features between edges and saliency. Besides, it is difficult to predict the pixels around the boundary because of the unbalanced distribution of edges. Consequently, we propose an edge recalibration loss (ERC) to further recalibrate the equivocal boundary features by paying more attention to salient edges. In addition, we also explore a compact network (cFRCNet) that improves the performance without extra parameters. Experimental results on five widely used datasets show that the FRCNet achieves consistently superior performances under various evaluation metrics. Furthermore, FRCNet runs at the speed of around 30 fps on a single GPU.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Chen, C., Tan, Z., Cheng, Q., et al.: UTC: a unified transformer with inter-task contrastive learning for visual dialog. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, pp. 18103–18112. IEEE (2022)

Cheng, Q., Tan, Z., Wen, K., et al.: Semantic pre-alignment and ranking learning with unified framework for cross-modal retrieval. IEEE Trans. Circuits Syst. Video Technol. (2022)

Fan, D., Cheng, M., Liu, Y., et al.: Structure-measure: a new way to evaluate foreground maps. In: Proceedings of the IEEE International Conference on Computer Vision, Hawaii, pp. 4548–4557. IEEE (2017)

Fan, D., Gong, C., Cao, Y., et al.: Enhanced-alignment measure for binary foreground map evaluation. In: Proceedings of the International Joint Conference on Artificial Intelligence (2018)

Feng, M., Lu, H., Ding, E.: Attentive feedback network for boundary-aware salient object detection. In: Proceedings of the IEEE International Conference on Computer Vision, California, pp. 1623–1632. IEEE (2019)

Fidon, L., et al.: Generalised wasserstein dice score for imbalanced multi-class segmentation using holistic convolutional networks. In: Crimi, A., Bakas, S., Kuijf, H., Menze, B., Reyes, M. (eds.) BrainLes 2017. LNCS, vol. 10670, pp. 64–76. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-75238-9_6

Pang, Y., Zhao, X., Zhang, L., et al.: Multi-scale interactive network for salient object detection. In: Proceedings of the IEEE International Conference on Computer Vision, Seattle, pp. 9413–9422. IEEE (2020)

Qin, X., Zhang, Z., Huang, C., et al.: BASNet: boundary-aware salient object detection. In: Proceedings of the IEEE International Conference on Computer Vision, California, pp. 7479–7489. IEEE (2019)

Ronneberger, O., Fischer, P., Brox, T.: U-Net: convolutional networks for biomedical image segmentation. In: Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F. (eds.) MICCAI 2015. LNCS, vol. 9351, pp. 234–241. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-24574-4_28

Tan, Z., Hua, Y., Gu, X.: Salient object detection with edge recalibration. In: Farkaš, I., Masulli, P., Wermter, S. (eds.) ICANN 2020. LNCS, vol. 12396, pp. 724–735. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-61609-0_57

Tan, Z., Gu, X.: Scale balance network for accurate salient object detection. In: Proceedings of the International Joint Conference on Neural Networks, Glasgow, pp. 1–7. IEEE (2020)

Tan, Z., Gu, X.: Depth scale balance saliency detection with connective feature pyramid and edge guidance. Appl. Intell. 51(8), 5775–5792 (2021)

Tan, Z., Gu, X.: Co-saliency detection with intra-group two-stage group semantics propagation and inter-group contrastive learning. Knowl.-Based Syst. 252, 109356 (2022)

Wei, J., Wang, S., Huang, Q.: F3Net: fusion, feedback and focus for salient object detection. In: Proceedings of the AAAI Conference on Artificial Intelligence, pp. 12321–12328 (2020)

Wu, R., Feng, M., Guan, W., et al.: A mutual learning method for salient object detection with intertwined multi-supervision. In: Proceedings of the IEEE International Conference on Computer Vision, California, pp. 8150–8159. IEEE (2019)

Wu, Z., Su, L., Huang, Q.: Cascaded partial decoder for fast and accurate salient object detection. In: Proceedings of the IEEE International Conference on Computer Vision, California, pp. 3907–3916. IEEE (2019)

Wu, Z., Su, L., Huang, Q.: Stacked cross refinement network for edge-aware salient object detection. In: Proceedings of the IEEE International Conference on Computer Vision, California, pp. 7264–7273. IEEE (2019)

Zeng, Y., Zhang, P., Zhang, J., et al.: Towards high-resolution salient object detection. In: Proceedings of the IEEE International Conference on Computer Vision, Seoul, pp. 7234–7243. IEEE (2019)

Zhao, J., Liu, J., Fan, D., et al.: EGNet: edge guidance network for salient object detection. In: Proceedings of the IEEE International Conference on Computer Vision, California, pp. 8779–8788. IEEE (2019)

Zhao, X., Pang, Y., Zhang, L., Lu, H., Zhang, L.: Suppress and balance: a simple gated network for salient object detection. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12347, pp. 35–51. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58536-5_3

Zhou, H., Xie, X., Lai, J., et al.: Interactive two-stream decoder for accurate and fast saliency detection. In: Proceedings of the IEEE International Conference on Computer Vision, Seattle, pp. 9141–9150. IEEE (2020)

Acknowledgements

This work was supported in part by National Natural Science Foundation of China under grant 62176062.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Tan, Z., Gu, X. (2022). Feature Recalibration Network for Salient Object Detection. In: Pimenidis, E., Angelov, P., Jayne, C., Papaleonidas, A., Aydin, M. (eds) Artificial Neural Networks and Machine Learning – ICANN 2022. ICANN 2022. Lecture Notes in Computer Science, vol 13532. Springer, Cham. https://doi.org/10.1007/978-3-031-15937-4_6

Download citation

DOI: https://doi.org/10.1007/978-3-031-15937-4_6

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-15936-7

Online ISBN: 978-3-031-15937-4

eBook Packages: Computer ScienceComputer Science (R0)