Abstract

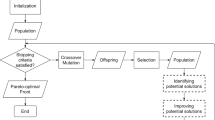

Pruning-based neural architecture search (NAS) methods are effective approaches in finding network architectures that have high performance with low complexity. However, current methods only yield a single final architecture instead of an approximation Pareto set, which is typically the desirable result of solving multi-objective problems. Furthermore, the network performance evaluation in NAS involves the computationally expensive network training process, and the search cost thus considerably increases because numerous architectures are evaluated during an NAS run. Using computational resource efficiently, therefore, is an essential problem that needs to be considered. Recent studies have attempted to address this resource issue by replacing the network accuracy metric in NAS optimization objectives with so-called training-free performance metrics, which can be calculated without requiring any training epoch. In this paper, we propose a training-free multi-objective pruning-based neural architecture search (TF-MOPNAS) framework that produces competitive trade-off fronts for multi-objective NAS with a trivial cost by using the Synaptic Flow metric. We test our proposed method on multi-objective NAS problems created on a wide range of well-known NAS benchmarks, i.e., NAS-Bench-101, NAS-Bench-1shot1, and NAS-Bench-201. Experimental results indicate that our method can figure out trade-off fronts that have the equivalent quality to the ones found by state-of-the-art NAS methods but with much less computation resource. The code is available at: https://github.com/ELO-Lab/TF-MOPNAS.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

An architecture \(\boldsymbol{x}\) is said to Pareto dominate another architecture \(\boldsymbol{y}\) if \(\boldsymbol{x}\) is not worse than \(\boldsymbol{y}\) in any objective and \(\boldsymbol{x}\) is strictly better than \(\boldsymbol{y}\) in at least one objective [5].

References

Abdelfattah, M.S., Mehrotra, A., Dudziak, L., Lane, N.D.: Zero-cost proxies for lightweight NAS. In: ICLR 2021 (2021)

Bergstra, J., Bengio, Y.: Random search for hyper-parameter optimization. J. Mach. Learn. Res. 13, 281–305 (2012)

Bosman, P.A.N., Thierens, D.: The balance between proximity and diversity in multi-objective evolutionary algorithms. IEEE Trans. Evol. Comput. 7(2), 174–188 (2003)

Chen, W., Gong, X., Wang, Z.: Neural architecture search on imagenet in four GPU hours: a theoretically inspired perspective. In: ICLR 2021 (2021)

Deb, K.: Multi-objective optimization using evolutionary algorithms. Wiley-Interscience series in systems and optimization, Wiley (2001)

Deb, K., Agrawal, S., Pratap, A., Meyarivan, T.: A fast and elitist multi-objective genetic algorithm: NSGA-II. IEEE Trans. Evol. Comput. 6(2), 182–197 (2002)

Dong, X., Yang, Y.: NAS-Bench-201: extending the scope of reproducible neural architecture search. In: ICLR 2020 (2020)

Howard, A.G., et al.: MobileNets: efficient convolutional neural networks for mobile vision applications. CoRR abs/1704.04861 (2017)

Li, G., Qian, G., Delgadillo, I.C., Müller, M., Thabet, A.K., Ghanem, B.: SGAS: sequential greedy architecture search. In: CVPR 2020, pp. 1617–1627 (2020)

Liu, H., Simonyan, K., Yang, Y.: DARTS: differentiable architecture search. In: ICLR 2019 (2019)

Lu, Z., et al.: NSGA-Net: neural architecture search using multi-objective genetic algorithm. In: GECCO 2019, pp. 419–427 (2019)

Mellor, J., Turner, J., Storkey, A.J., Crowley, E.J.: Neural architecture search without training. In: ICML 2021, pp. 7588–7598 (2021)

Pham, H., Guan, M.Y., Zoph, B., Le, Q.V., Dean, J.: Efficient neural architecture search via parameter sharing. In: ICML 2018, pp. 4092–4101 (2018)

Phan, Q.M., Luong, N.H.: Efficiency enhancement of evolutionary neural architecture search via training-free initialization. In: NICS 2021, pp. 138–143 (2021)

Real, E., Aggarwal, A., Huang, Y., Le, Q.V.: Regularized evolution for image classifier architecture search. In: AAAI 2019, pp. 4780–4789 (2019)

Tanaka, H., Kunin, D., Yamins, D.L., Ganguli, S.: Pruning neural networks without any data by iteratively conserving synaptic flow. In: NeurIPS 2020 (2020)

Wang, R., Cheng, M., Chen, X., Tang, X., Hsieh, C.: Rethinking architecture selection in differentiable NAS. In: ICLR 2021 (2021)

Ying, C., Klein, A., Christiansen, E., Real, E., Murphy, K., Hutter, F.: NAS-Bench-101: towards reproducible neural architecture search. In: ICML 2019 (2019)

Yu, K., Sciuto, C., Jaggi, M., Musat, C., Salzmann, M.: Evaluating the search phase of neural architecture search. In: ICLR 2020 (2020)

Zela, A., Siems, J., Hutter, F.: NAS-Bench-1Shot1: benchmarking and dissecting one-shot neural architecture search. In: ICLR 2020 (2020)

Zoph, B., Le, Q.V.: Neural architecture search with reinforcement learning. In: ICLR 2017 (2017)

Acknowledgements

This research was supported by The VNUHCM–University of Information Technology’s Scientific Research Support Fund.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Phan, Q.M., Luong, N.H. (2022). TF-MOPNAS: Training-free Multi-objective Pruning-Based Neural Architecture Search. In: Nguyen, N.T., Manolopoulos, Y., Chbeir, R., Kozierkiewicz, A., Trawiński, B. (eds) Computational Collective Intelligence. ICCCI 2022. Lecture Notes in Computer Science(), vol 13501. Springer, Cham. https://doi.org/10.1007/978-3-031-16014-1_24

Download citation

DOI: https://doi.org/10.1007/978-3-031-16014-1_24

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-16013-4

Online ISBN: 978-3-031-16014-1

eBook Packages: Computer ScienceComputer Science (R0)