Abstract

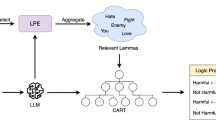

eXplainable AI (XAI) does not only lie in the interpretation of the rules generated by AI systems, but also in the evaluation and selection, among many rules automatically generated by large datasets, of those that are more relevant and meaningful for domain experts. With this work, we propose a method for evaluation of similarity between rules, which identifies similar rules, or very different ones, by exploiting techniques developed for Natural Language Processing (NLP). We evaluate the similarity of if-then rules by interpreting them as sentences and generating a similarity matrix acting as an enabler for domain experts to analyse the generated rules and thus discover new knowledge. Rule similarity may be applied to rule analysis and manipulation in different scenarios: the first one deals with rule analysis and interpretation, while the second scenario refers to pruning unnecessary rules within a single ruleset. Rule similarity allows also the automatic comparison and evaluation of rulesets. Two different examples are provided to evaluate the effectiveness of the proposed method for rules analysis for knowledge extraction and rule pruning.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

The inverse document frequency weight function (tf-idf) is a function used in information retrieval procedures to measure the importance of a term with respect to a document or a collection of documents.

- 2.

References

Gilpin, L.H., et al.: Explaining explanations: an overview of interpretability of machine learning. In: Proceedings of the 2018 IEEE 5th International Conference on Data Science and Advanced Analytics (DSAA 2018), pp. 80–89 (2018)

Adadi, A., et al.: Peeking inside the black-box: a survey on explainable artificial intelligence (XAI). IEEE Access 6, 52138–52160 (2018)

Arrieta, A.B., et al.: Explainable Artificial Intelligence (XAI): concepts, taxonomies, opportunities and challenges toward responsible AI. Inf. Fusion 58, 82–115 (2020)

Mongelli, M., et al.: Performance validation of vehicle platooning through intelligible analytics. IET Cyber-Phy. Syst. Theory Appl. 4, 120–127 (2019)

Holzinger, A.: From machine learning to explainable AI. In: Proceedings of the World Symposium on Digital Intelligence for Systems and Machines (DISA 2018), pp. 55–66 (2018)

Guidotti, R., et al.: A survey of methods for explaining black box models. ACM Comput. Surv. 51(5), 93, 42 p. (2019)

Setzu, M., et al.: GLocalX - from local to global explanations of black box AI models. Artif. Intell. 294,103457 (2021)

Ramon, Y., et al.: Metafeatures-based rule-extraction for classifiers on behavioral and textual data. arXiv preprint arXiv:2003.04792 (2020)

Hirano, S., et al.: Detection of differences between syntactic and semantic similarities. In: Proceedings of the International Conference on Rough Sets and Current Trends in Computing (RSCTC 2004), pp. 529–538 (2004)

Vaccari, I., et al.: A generative adversarial network (GAN) technique for internet of medical things data. Sensors 21(11), 3726 (2021)

Mendel, J.M., et al.: Critical thinking about explainable AI (XAI) for rule-based fuzzy systems. IEEE Trans. Fuzzy Syst. 29(12), 3579–3593 (2021)

Tan, P.N., et al.: Introduction to Data Mining, 2nd edn. Pearson (2019)

Cangelosi, D., et al.: Logic learning machine creates explicit and stable rules stratifying neuroblastoma patients. BMC Bioinform. 14(suppl), 7 (2013)

Fuchs, C., et al.: A graph theory approach to fuzzy rule base simplification. In: Proceedings of the International Conference on Information Processing and Management of Uncertainty in Knowledge-based Systems, pp. 387–401 (2020)

Qurashi, A.W., et al.: Document processing: methods for semantic text similarity analysis. In: Proceedings of the 2020 International Conference on INnovations in Intelligent SysTems and Applications (INISTA 2020), pp. 1–6 (2020)

Sethi, P., et al.: Association rule based similarity measures for the clustering of gene expression data. Open Med. Inform. J(4), 63 (2010)

Anokhin, M., et al.: Decision-making rule efficiency estimation with applying similarity metrics. ECONTECHMOD Int. Q. J. Econ. Technol. Model. Process. 4 (2015)

Muselli, M., et al.: Coupling logical analysis of data and shadow clustering for partially defined positive Boolean function reconstruction. IEEE Trans. Knowl. Data Eng. 23(1), 37–50 (2009)

Gunjan, A., et al.: A brief review of intelligent rule extraction techniques. In: Proceedings of the International Symposium on Signal and Image Processing, pp. 115–122, March 2020

SKOPE-Rules: Github repository. https://github.com/scikit-learn-contrib/skope-rules. Accessed 11 Mar 2022

Friedman, J.H., et al.: Predictive learning via rule ensembles. Ann. Appl. Stat. 2(3), 916–954 (2008)

Maman, Z.S., et al.: Data analytic framework for physical fatigue management using wearable sensors. Exp. Syst. Appl. 155, 113405 (2020). Github repository. https://github.com/zahrame/FatigueManagement.github.io. Accessed 11 Mar 2022

Williams, N.: The borg rating of perceived exertion (RPE) Scale. Occup. Med. 67(5), 404–405 (2017)

Narteni, S., et al.: From explainable to reliable artificial intelligence. In: Proceedings of the International Cross-Domain Conference for Machine Learning & Knowledge Extraction (MAKE 2021), pp. 255–273, August 2021

Segata, M., et al.: Plexe: a platooning extension for veins. In: Proceedings of the 6th IEEE Vehicular Networking Conference (VNC 2014), pp. 53–60, December 2014

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Narteni, S., Ferretti, M., Rampa, V., Mongelli, M. (2023). Bag-of-Words Similarity in eXplainable AI. In: Arai, K. (eds) Intelligent Systems and Applications. IntelliSys 2022. Lecture Notes in Networks and Systems, vol 543. Springer, Cham. https://doi.org/10.1007/978-3-031-16078-3_58

Download citation

DOI: https://doi.org/10.1007/978-3-031-16078-3_58

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-16077-6

Online ISBN: 978-3-031-16078-3

eBook Packages: Intelligent Technologies and RoboticsIntelligent Technologies and Robotics (R0)