Abstract

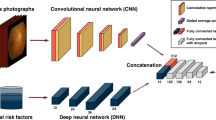

Recent studies have validated the association between cardiovascular disease (CVD) risk and retinal fundus images. Combining deep learning (DL) and portable fundus cameras will enable CVD risk estimation in various scenarios and improve healthcare democratization. However, there are still significant issues to be solved. One of the top priority issues is the different camera differences between the databases for research material and the samples in the production environment. Most high-quality retinography databases ready for research are collected from high-end fundus cameras, and there is a significant domain discrepancy between different cameras. To fully explore the domain discrepancy issue, we first collect a Fundus Camera Paired (FCP) dataset containing pair-wise fundus images captured by the high-end Topcon retinal camera and the low-end Mediwork portable fundus camera of the same patients. Then, we propose a cross-laterality feature alignment pre-training scheme and a self-attention camera adaptor module to improve the model robustness. The cross-laterality feature alignment training encourages the model to learn common knowledge from the same patient’s left and right fundus images and improve model generalization. Meanwhile, the device adaptation module learns feature transformation from the target domain to the source domain. We conduct comprehensive experiments on both the UK Biobank database and our FCP data. The experimental results show that the CVD risk regression accuracy and the result consistency over two cameras are improved with our proposed method. The code is available here: https://github.com/linzhlalala/CVD-risk-based-on-retinal-fundus-images

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Notes

- 1.

We do not provide CVD risk ground-truth for the FCP dataset due to the absence of patient information.

- 2.

As our uniform self-attention camera adaptor.

References

Ajakan, H., Germain, P., Larochelle, H., Laviolette, F., Marchand, M.: Domain-adversarial neural networks. arXiv preprint arXiv:1412.4446 (2014)

Chen, X., He, K.: Exploring simple Siamese representation learning. In: IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2021, Virtual, 19–25 June 2021, pp. 15750–15758. Computer Vision Foundation/IEEE (2021)

Chen, X., Xie, S., He, K.: An empirical study of training self-supervised vision transformers. arXiv preprint arXiv:2104.02057 (2021)

Cheung, C.Y., et al.: Retinal vascular fractal dimension and its relationship with cardiovascular and ocular risk factors. Am. J. Ophthalmol. 154(4), 663–674 (2012)

Chicco, D.: Siamese neural networks: an overview. Artif. Neural Netw., 73–94 (2021)

Dosovitskiy, A., et al.: An image is worth 16x16 words: transformers for image recognition at scale. In: 9th International Conference on Learning Representations, ICLR 2021, Virtual Event, Austria, 3–7 May 2021. OpenReview.net (2021)

Gretton, A., et al.: Optimal kernel choice for large-scale two-sample tests. In: Advances in Neural Information Processing Systems 25 (2012)

Grill, J., et al.: Bootstrap your own latent - a new approach to self-supervised learning. In: Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M., Lin, H. (eds.) Advances in Neural Information Processing Systems 33: Annual Conference on Neural Information Processing Systems 2020, NeurIPS 2020, 6–12 December 2020, virtual (2020)

Guan, H., Liu, M.: Domain adaptation for medical image analysis: a survey. IEEE Trans. Biomed. Eng. (2021)

Isola, P., Zhu, J.Y., Zhou, T., Efros, A.A.: Image-to-image translation with conditional adversarial networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1125–1134 (2017)

Ju, L., Wang, X., Zhao, X., Bonnington, P., Drummond, T., Ge, Z.: Leveraging regular fundus images for training UWF fundus diagnosis models via adversarial learning and pseudo-labeling. IEEE Trans. Med. Imaging 40(10), 2911–2925 (2021). https://doi.org/10.1109/TMI.2021.3056395

Kaptoge, S., et al.: World health organization cardiovascular disease risk charts: revised models to estimate risk in 21 global regions. Lancet Glob. Health 7(10), e1332–e1345 (2019)

Lei, H., et al.: Unsupervised domain adaptation based image synthesis and feature alignment for joint optic disc and cup segmentation. IEEE J. Biomed. Health Inform. 26(1), 90–102 (2022). https://doi.org/10.1109/JBHI.2021.3085770

Liu, P., Kong, B., Li, Z., Zhang, S., Fang, R.: CFEA: collaborative feature ensembling adaptation for domain adaptation in unsupervised optic disc and cup segmentation. In: Shen, D., Liu, T., Peters, T.M., Staib, L.H., Essert, C., Zhou, S., Yap, P.-T., Khan, A. (eds.) MICCAI 2019. LNCS, vol. 11768, pp. 521–529. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-32254-0_58

Long, M., Cao, Y., Wang, J., Jordan, M.: Learning transferable features with deep adaptation networks. In: International Conference on Machine Learning, pp. 97–105. PMLR (2015)

Poplin, R., et al.: Prediction of cardiovascular risk factors from retinal fundus photographs via deep learning. Nat. Biomed. Eng. 2(3), 158–164 (2018)

Roth, G.A., et al.: Global burden of cardiovascular diseases and risk factors, 1990–2019: update from the GBD 2019 study. J. Am. Coll. Cardiol. 76(25), 2982–3021 (2020)

Steiner, A., Kolesnikov, A., Zhai, X., Wightman, R., Uszkoreit, J., Beyer, L.: How to train your ViT? data, augmentation, and regularization in vision transformers. CoRR abs/2106.10270 (2021)

Sudlow, C., et al.: UK biobank: an open access resource for identifying the causes of a wide range of complex diseases of middle and old age. PLoS Med. 12(3), e1001779 (2015)

Wang, J., Lan, C., Liu, C., Ouyang, Y., Zeng, W., Qin, T.: Generalizing to unseen domains: a survey on domain generalization. arXiv preprint arXiv:2103.03097 (2021)

Yang, D., Yang, Y., Huang, T., Wu, B., Wang, L., Xu, Y.: Residual-CycleGAN based camera adaptation for robust diabetic retinopathy screening. In: Martel, A.L., Abolmaesumi, P., Stoyanov, D., Mateus, D., Zuluaga, M.A., Zhou, S.K., Racoceanu, D., Joskowicz, L. (eds.) MICCAI 2020. LNCS, vol. 12262, pp. 464–474. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-59713-9_45

Zhang, Y., Yang, Q.: A survey on multi-task learning. IEEE Trans. Knowl. Data Eng. (2021)

Zhang, Y., Liu, T., Long, M., Jordan, M.: Bridging theory and algorithm for domain adaptation. In: International Conference on Machine Learning, pp. 7404–7413. PMLR (2019)

Zhu, J.Y., Park, T., Isola, P., Efros, A.A.: Unpaired image-to-image translation using cycle-consistent adversarial networks. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2223–2232 (2017)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Lin, Z., Shi, D., Zhang, D., Shang, X., He, M., Ge, Z. (2022). Camera Adaptation for Fundus-Image-Based CVD Risk Estimation. In: Wang, L., Dou, Q., Fletcher, P.T., Speidel, S., Li, S. (eds) Medical Image Computing and Computer Assisted Intervention – MICCAI 2022. MICCAI 2022. Lecture Notes in Computer Science, vol 13432. Springer, Cham. https://doi.org/10.1007/978-3-031-16434-7_57

Download citation

DOI: https://doi.org/10.1007/978-3-031-16434-7_57

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-16433-0

Online ISBN: 978-3-031-16434-7

eBook Packages: Computer ScienceComputer Science (R0)