Abstract

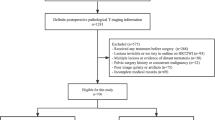

Volumetric images from Magnetic Resonance Imaging (MRI) provide invaluable information in preoperative staging of rectal cancer. Above all, accurate preoperative discrimination between T2 and T3 stages is arguably both the most challenging and clinically significant task for rectal cancer treatment, as chemo-radiotherapy is usually recommended for patients with T3 (or greater) stage cancer. In this study, we present a volumetric convolutional neural network to accurately discriminate T2 from T3 stage rectal cancer with rectal MR volumes. Specifically, we propose 1) a custom ResNet-based volume encoder that models the inter-slice relationship with late fusion (i.e., 3D convolution at the last layer), 2) a bilinear computation that aggregates the resulting features from the encoder to create a volume-wise feature, and 3) a joint minimization of triplet loss and focal loss. With MR volumes of pathologically confirmed T2/T3 rectal cancer, we perform extensive experiments to compare various designs within the framework of residual learning. As a result, our network achieves an AUC of 0.831, which is higher than the reported accuracy of the professional radiologist groups. We believe this method can be extended to other volume analysis tasks.

J. Lee and J. Oh—These authors contributed equally to this work.

T.-s. Kim and I. S. Kweon—These co-corresponding authors contributed equally to this work.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Ang, Z.H., De Robles, M.S., Kang, S., Winn, R.: Accuracy of pelvic magnetic resonance imaging in local staging for rectal cancer: a single local health district, real world experience. ANZ J. Surg. 91(1–2), 111–116 (2021)

Çiçek, Ö., Abdulkadir, A., Lienkamp, S.S., Brox, T., Ronneberger, O.: 3D U-Net: learning dense volumetric segmentation from sparse annotation. In: Ourselin, S., Joskowicz, L., Sabuncu, M.R., Unal, G., Wells, W. (eds.) MICCAI 2016. LNCS, vol. 9901, pp. 424–432. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46723-8_49

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Hesamian, M.H., Jia, W., He, X., Kennedy, P.: Deep learning techniques for medical image segmentation: achievements and challenges. J. Digit. Imaging 32(4), 582–596 (2019). https://doi.org/10.1007/s10278-019-00227-x

Horvat, N., Carlos Tavares Rocha, C., Clemente Oliveira, B., Petkovska, I., Gollub, M.J.: MRI of rectal cancer: tumor staging, imaging techniques, and management. Radiographics 39(2), 367–387 (2019)

Howard, A., et al.: Searching for mobilenetv3. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 1314–1324 (2019)

Huang, Z., Zhou, Q., Zhu, X., Zhang, X.: Batch similarity based triplet loss assembled into light-weighted convolutional neural networks for medical image classification. Sensors 21(3), 764 (2021)

Isensee, F., et al.: nnU-Net: self-adapting framework for u-net-based medical image segmentation. arXiv preprint arXiv:1809.10486 (2018)

Kim, J., et al.: Rectal cancer: toward fully automatic discrimination of T2 and T3 rectal cancers using deep convolutional neural network. Int. J. Imaging Syst. Technol. 29(3), 247–259 (2019)

Lee, J., Oh, J.E., Kim, M.J., Hur, B.Y., Sohn, D.K.: Reducing the model variance of a rectal cancer segmentation network. IEEE Access 7, 182725–182733 (2019)

Lin, T.Y., Goyal, P., Girshick, R., He, K., Dollár, P.: Focal loss for dense object detection. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2980–2988 (2017)

Lin, T.Y., RoyChowdhury, A., Maji, S.: Bilinear convolutional neural networks for fine-grained visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 40(6), 1309–1322 (2017)

Liu, S., et al.: 3D anisotropic hybrid network: transferring convolutional features from 2D images to 3D anisotropic volumes. In: Frangi, A.F., Schnabel, J.A., Davatzikos, C., Alberola-López, C., Fichtinger, G. (eds.) MICCAI 2018. LNCS, vol. 11071, pp. 851–858. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-00934-2_94

Maas, M., et al.: T-staging of rectal cancer: accuracy of 3.0 tesla MRI compared with 1.5 tesla. Abdom. Imaging 37(3), 475–481 (2012). DOIurl10.1007/s00261-011-9770-5

Milletari, F., Navab, N., Ahmadi, S.A.: V-net: fully convolutional neural networks for volumetric medical image segmentation. In: 2016 Fourth International Conference on 3D Vision (3DV), pp. 565–571. IEEE (2016)

Peng, C., Lin, W.A., Liao, H., Chellappa, R., Zhou, S.K.: SAINT: spatially aware interpolation network for medical slice synthesis. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 7750–7759 (2020)

Schroff, F., Kalenichenko, D., Philbin, J.: FaceNet: a unified embedding for face recognition and clustering. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 815–823 (2015)

Tran, D., Wang, H., Torresani, L., Ray, J., LeCun, Y., Paluri, M.: A closer look at spatiotemporal convolutions for action recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6450–6459 (2018)

Trebeschi, S., et al.: Deep learning for fully-automated localization and segmentation of rectal cancer on multiparametric MR. Sci. Rep. 7(1), 1–9 (2017)

Tustison, N.J., et al.: N4ITK: improved N3 bias correction. IEEE Trans. Med. Imaging 29(6), 1310–1320 (2010)

Wang, L., et al.: Temporal segment networks for action recognition in videos. IEEE Trans. Pattern Anal. Mach. Intell. 41(11), 2740–2755 (2018)

Weinberger, K.Q., Saul, L.K.: Distance metric learning for large margin nearest neighbor classification. J. Mach. Learn. Res. 10(2) (2009)

Wen, Y., Zhang, K., Li, Z., Qiao, Yu.: A discriminative feature learning approach for deep face recognition. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9911, pp. 499–515. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46478-7_31

Wu, N., et al.: Deep neural networks improve radiologists’ performance in breast cancer screening. IEEE Trans. Med. Imaging 39(4), 1184–1194 (2019)

Zheng, H., Fu, J., Zha, Z.J., Luo, J.: Learning deep bilinear transformation for fine-grained image representation. In: Advances in Neural Information Processing Systems, vol. 32 (2019)

Acknowledgments

The authors would like to thank Young Sang Choi for his helpful feedback. This work was supported by KAIST R &D Program (KI Meta-Convergence Program) 2020 through Korea Advanced Institute of Science and Technology (KAIST), a grant from the National Cancer Center (NCC2010310-1), and the National Research Foundation of Korea (NRF) grant funded by the Korea government (MSIT) (NRF-2020R1C1C1012905).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Lee, J. et al. (2022). Moving from 2D to 3D: Volumetric Medical Image Classification for Rectal Cancer Staging. In: Wang, L., Dou, Q., Fletcher, P.T., Speidel, S., Li, S. (eds) Medical Image Computing and Computer Assisted Intervention – MICCAI 2022. MICCAI 2022. Lecture Notes in Computer Science, vol 13433. Springer, Cham. https://doi.org/10.1007/978-3-031-16437-8_75

Download citation

DOI: https://doi.org/10.1007/978-3-031-16437-8_75

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-16436-1

Online ISBN: 978-3-031-16437-8

eBook Packages: Computer ScienceComputer Science (R0)