Abstract

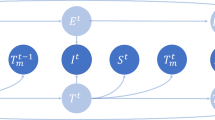

Vision-based segmentation of the robotic tool during robot-assisted surgery enables downstream applications, such as augmented reality feedback, while allowing for inaccuracies in robot kinematics. With the introduction of deep learning, many methods were presented to solve instrument segmentation directly and solely from images. While these approaches made remarkable progress on benchmark datasets, fundamental challenges pertaining to their robustness remain. We present CaRTS, a causality-driven robot tool segmentation algorithm, that is designed based on a complementary causal model of the robot tool segmentation task. Rather than directly inferring segmentation masks from observed images, CaRTS iteratively aligns tool models with image observations by updating the initially incorrect robot kinematic parameters through forward kinematics and differentiable rendering to optimize image feature similarity end-to-end. We benchmark CaRTS with competing techniques on both synthetic as well as real data from the dVRK, generated in precisely controlled scenarios to allow for counterfactual synthesis. On training-domain test data, CaRTS achieves a Dice score of 93.4 that is preserved well (Dice score of 91.8) when tested on counterfactually altered test data, exhibiting low brightness, smoke, blood, and altered background patterns. This compares favorably to Dice scores of 95.0 and 86.7, respectively, of the SOTA image-based method. Future work will involve accelerating CaRTS to achieve video framerate and estimating the impact occlusion has in practice. Despite these limitations, our results are promising: In addition to achieving high segmentation accuracy, CaRTS provides estimates of the true robot kinematics, which may benefit applications such as force estimation. Code is available at: https://github.com/hding2455/CaRTS.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Ouyang, C., et al.: Causality-inspired single-source domain generalization for medical image segmentation. arxiv:2111.12525 (2021)

Gao, C., et al.: Generalizing spatial transformers to projective geometry with applications to 2D/3D registration. arxiv:2003.10987 (2020)

Lenis, D., Major, D., Wimmer, M., Berg, A., Sluiter, G., Bühler, Katja: Domain aware medical image classifier interpretation by counterfactual impact analysis. In: Martel, A.L., et al. (eds.) MICCAI 2020. LNCS, vol. 12261, pp. 315–325. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-59710-8_31

Wang, J., et al.: Deep high-resolution representation learning for visual recognition. TPAMI (2019)

Chen, K., et al.: Hybrid task cascade for instance segmentation. In: Proceedings of Computer Vision and Pattern Recognition Conference, CVPR (2019)

Chen, L.-C., Zhu, Y., Papandreou, G., Schroff, F., Adam, H.: Encoder-decoder with Atrous separable convolution for semantic image segmentation. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11211, pp. 833–851. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01234-2_49

Peraza-Herrera, L.C.G., et al.: ToolNet: Holistically-nested real-time segmentation of robotic surgical tools. In: 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 5717–5722 (2017)

Ravi, N., et al.: Accelerating 3D deep learning with pyTorch3D. arXiv:2007.08501 (2020)

Long, Y., et al.: E-DSSR: efficient dynamic surgical scene reconstruction with transformer-based stereoscopic depth perception. In: de Bruijne, M., et al. (eds.) MICCAI 2021. LNCS, vol. 12904, pp. 415–425. Springer, Cham (2021). https://doi.org/10.1007/978-3-030-87202-1_40

Li, Z., et al.: Revisiting stereo depth estimation from a sequence-to-sequence perspective with transformers. In: 2021 IEEE/CVF International Conference on Computer Vision (ICCV), pp. 6177–6186 (2021)

Zhao, Z., et al.: One to many: adaptive instrument segmentation via meta learning and dynamic online adaptation in robotic surgical video. In: International Conference on Robotics and Automation, ICRA (2021)

Allan, M., Ourselin, S., Hawkes, D.J., Kelly, J.D., Stoyanov, D.: 3-D pose estimation of articulated instruments in robotic minimally invasive surgery. IEEE Trans. Med. Imaging 37(5), 1204–1213 (2018)

Castro, D.C., Walker, I., Glocker, B.: Causality matters in medical imaging. Nat. Commun. 11(1), 3673 (2020)

Colleoni, E., Edwards, P., Stoyanov, D.: Synthetic and real inputs for tool segmentation in robotic surgery. In: Martel, A.L., et al. (eds.) MICCAI 2020. LNCS, vol. 12263, pp. 700–710. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-59716-0_67

da Costa Rocha, C., Padoy, N., Rosa, B.: Self-supervised surgical tool segmentation using kinematic information. In: 2019 International Conference on Robotics and Automation, ICRA (2019)

Couprie, C.: Indoor semantic segmentation using depth information. In: First International Conference on Learning Representations (ICLR), pp. 1–8 (2013)

Ding, H., Qiao, S., Yuille, A.L., Shen, W.: Deeply shape-guided cascade for instance segmentation. In: 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR (2021)

Drenkow, N., Sani, N., Shpitser, I., Unberath, M.: Robustness in deep learning for computer vision: mind the gap? arxiv:2112.00639 (2021)

Fontanelli, G.A., Ficuciello, F., Villani, L., Siciliano, B.: Modelling and identification of the da Vinci research kit robotic arms. In: 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 1464-1469 (2017)

Godard, C., Aodha, O.M., Firman, M., Brostow, G.J.: Digging into self-supervised monocular depth estimation. In: 2019 IEEE/CVF International Conference on Computer Vision, ICCV (2019)

Guo, X., Yang, K., Yang, W., Wang, X., Li, H.: Group-wise correlation stereo network. In: 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR (2019)

Hazirbas, C., Ma, L., Domokos, C., Cremers, D.: FuseNet: Incorporating depth into semantic segmentation via fusion-based CNN architecture. In: Lai, S.-H., Lepetit, V., Nishino, K., Sato, Y. (eds.) ACCV 2016. LNCS, vol. 10111, pp. 213–228. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-54181-5_14

He, K., Gkioxari, G., Dollár, P., Girshick, R.B.: Mask R-CNN. In: 2017 IEEE International Conference on Computer Vision (ICCV), pp. 2980-2988 (2017)

Islam, M.: Real-time instrument segmentation in robotic surgery using auxiliary supervised deep adversarial learning. In: IEEE Robotics and Automation Letters, vol. 4, no. 2, pp. 2188-2195 (2019)

Jin, Y., Cheng, K., Dou, Q., Heng, P.: Incorporating temporal prior from motion flow for instrument segmentation in minimally invasive surgery video. In: Medical Image Computing and Computer Assisted Intervention – MICCAI 2019, 22nd International Conference, pp.440-448 (2019)

Kato, H., Ushiku, Y., Harada, T.: Neural 3D mesh renderer. In: 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR (2018)

Kazanzides, P., Chen, Z., Deguet, A., Fischer, G.S., Taylor, R.H., DiMaio, S.P.: An open-source research kit for the da Vinci surgical system. In: 2014 IEEE International Conference on Robotics and Automation (ICRA), pp. 6434-6439 (2014)

Lecca, P.: Machine learning for causal inference in biological networks: perspectives of this challenge. Front. Bioinform. 1 (2021). https://doi.org/10.3389/fbinf.2021.746712

Li, Z.: Temporally consistent online depth estimation in dynamic scenes (2021). https://arxiv.org/abs/2111.09337

Liu, C.: Learning causal semantic representation for out-of-distribution prediction. In: Advances in Neural Information Processing Systems 34, NeurIPS (2021)

Liu, S., Chen, W., Li, T., Li, H.: Soft rasterizer: a differentiable renderer for image-based 3D reasoning. In: 2019 IEEE/CVF International Conference on Computer Vision (ICCV), pp. 7707-7716 (2019)

Liu, Z.: Swin transformer: hierarchical vision transformer using shifted windows. In: International Conference on Computer Vision, ICCV (2021)

Long, J., Shelhamer, E., Darrell, T.: Fully convolutional networks for semantic segmentation. In: IEEE/CVF Computer Vision and Pattern Recognition Conference, CVPR (2015)

Mitrovic, J., McWilliams, B., Walker, J.C., Buesing, L.H., Blundell, C.: Representation learning via invariant causal mechanisms. In: International Conference on Learning Representations, ICLR (2021)

Munawar, A., Wang, Y., Gondokaryono, R., Fischer, G.S.: A real-time dynamic simulator and an associated front-end representation format for simulating complex robots and environments. In: IEEE/RSJ International Conference on Intelligent Robots and Systems, IROS (2019)

Pakhomov, D., Premachandran, V., Allan, M., Azizian, M., Navab, N.: Deep residual learning for instrument segmentation in robotic surgery. In: Machine Learning in Medical Imaging, MLMI (2019)

Pawlowski, N., de Castro, D.C., Glocker, B.: Deep structural causal models for tractable counterfactual inference. In: Advances in Neural Information Processing Systems, NIPS (2020)

Qin, F.: Surgical instrument segmentation for endoscopic vision with data fusion of CNN prediction and kinematic pose. In: 2019 International Conference on Robotics and Automation (ICRA), pp. 9821-9827 (2019)

Quionero-Candela, J., Sugiyama, M., Schwaighofer, A., Lawrence, N.D.: Dataset Shift in Machine Learning. The MIT Press (2009)

Reinhold, J.C., Carass, A., Prince, J.L.: A structural causal model for MR images of multiple sclerosis. In: de Bruijne, M., et al. (eds.) MICCAI 2021. LNCS, vol. 12905, pp. 782–792. Springer, Cham (2021). https://doi.org/10.1007/978-3-030-87240-3_75

Ronneberger, O., Fischer, P., Brox, T.: U-Net: convolutional networks for biomedical image segmentation. In: Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F. (eds.) MICCAI 2015. LNCS, vol. 9351, pp. 234–241. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-24574-4_28

Shvets, A.A., Rakhlin, A., Kalinin, A.A., Iglovikov, V.I.: Automatic instrument segmentation in robot-assisted surgery using deep learning. In: 2018 17th IEEE International Conference on Machine Learning and Applications (ICMLA), pp. 624-628 (2018)

Su, Y.H., Huang, K., Hannaford, B.: Real-time vision-based surgical tool segmentation with robot kinematics prior. In: 2018 International Symposium on Medical Robotics (ISMR), pp. 1–6. IEEE (2018)

Wang, J., Lan, C., Liu, C., Ouyang, Y., Qin, T.: Generalizing to unseen domains: A survey on domain generalization. In: Proceedings of the Thirtieth International Joint Conference on Artificial Intelligence, IJCAI (2021)

Ye, M., Zhang, L., Giannarou, S., Yang, G.-Z.: Real-time 3D tracking of articulated tools for robotic surgery. In: Ourselin, S., Joskowicz, L., Sabuncu, M.R., Unal, G., Wells, W. (eds.) MICCAI 2016. LNCS, vol. 9900, pp. 386–394. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46720-7_45

Zhang, C., Zhang, K., Li, Y.: A causal view on robustness of neural networks. In: Advances in Neural Information Processing Systems 33, NeurIPS (2020)

Zhang, X., Cui, P., Xu, R., Zhou, L., He, Y., Shen, Z.: Deep stable learning for out-of-distribution generalization. In: 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR (2021)

Acknowledgement:

This research is supported by a collaborative research agreement with the MultiScale Medical Robotics Center at The Chinese University of Hong Kong.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Ding, H., Zhang, J., Kazanzides, P., Wu, J.Y., Unberath, M. (2022). CaRTS: Causality-Driven Robot Tool Segmentation from Vision and Kinematics Data. In: Wang, L., Dou, Q., Fletcher, P.T., Speidel, S., Li, S. (eds) Medical Image Computing and Computer Assisted Intervention – MICCAI 2022. MICCAI 2022. Lecture Notes in Computer Science, vol 13437. Springer, Cham. https://doi.org/10.1007/978-3-031-16449-1_37

Download citation

DOI: https://doi.org/10.1007/978-3-031-16449-1_37

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-16448-4

Online ISBN: 978-3-031-16449-1

eBook Packages: Computer ScienceComputer Science (R0)