Abstract

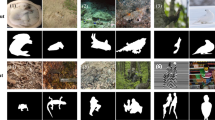

Recently, adversarial attacks against object detectors have become research hotspots in academia. However, digital adversarial attacks need to generate adversarial perturbation on digital images in a “pixel-wise” way, which is challenging to deploy accurately in the real world. Physical adversarial attacks usually need to paste the adversarial patches on the surface of target objects one by one, which is not suitable for objects with complex shapes and is challenging to deploy in practice. In this paper, we propose a universal background adversarial attack method for deep learning object detection, which puts the target objects on the universal background image and changes the local pixel information around the target objects so that the object detectors cannot recognize the target objects. This method takes the form of a universal background image for the physical adversarial attack and is easy to deploy in the real world. It can use a single universal background image to attack different classes of target objects simultaneously and has good robustness under different angles and distances. Extensive experiments have shown that the universal background attack can successfully attack two object detection models, YOLO v3 and Faster R-CNN, with average success rates of 74.9% and 67.8% with varying distances from 15 cm to 60 cm and angels from \(-90^\circ \) to \(90^\circ \) in the physical world.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Athalye, A., Engstrom, L., Ilyas, A., Kwok, K.: Synthesizing robust adversarial examples. In: International Conference on Machine Learning, pp. 284–293. PMLR (2018)

Brown, T.B., Mané, D., Roy, A., Abadi, M., Gilmer, J.: Adversarial patch. arXiv preprint arXiv:1712.09665 (2017)

Choi, M.J., Lim, J.J., Torralba, A., Willsky, A.S.: Exploiting hierarchical context on a large database of object categories. In: 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp. 129–136. IEEE (2010). https://doi.org/10.1109/CVPR.2010.5540221

Dong, X., et al.: Robust superpixel-guided attentional adversarial attack. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 12895–12904 (2020). https://doi.org/10.1109/CVPR42600.2020.01291

Eykholt, K., et al.: Robust physical-world attacks on deep learning visual classification. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1625–1634 (2018)

Galleguillos, C., Belongie, S.: Context based object categorization: a critical survey. Comput. Vis. Image Underst. 114(6), 712–722 (2010). https://doi.org/10.1016/j.cviu.2010.02.004

He, K., Gkioxari, G., Dollár, P., Girshick, R.: Mask R-CNN. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2961–2969 (2017). https://doi.org/10.1109/TPAMI.2018.2844175

Krizhevsky, A., Sutskever, I., Hinton, G.E.: ImageNet classification with deep convolutional neural networks. In: Advances in Neural Information Processing Systems, vol. 25, pp. 1097–1105 (2012). https://doi.org/10.1145/3065386

Kurakin, A., Goodfellow, I., Bengio, S., et al.: Adversarial examples in the physical world (2016). https://doi.org/10.1201/9781351251389-8

Lee, M., Kolter, Z.: On physical adversarial patches for object detection. arXiv preprint arXiv:1906.11897 (2019)

Liu, W., et al.: SSD: single shot multibox detector. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9905, pp. 21–37. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46448-0_2

Liu, X., Yang, H., Liu, Z., Song, L., Li, H., Chen, Y.: Dpatch: an adversarial patch attack on object detectors. arXiv preprint arXiv:1806.02299 (2018)

Oliva, A., Torralba, A.: The role of context in object recognition. Trends Cogn. Sci. 11(12), 520–527 (2007)

Redmon, J., Farhadi, A.: YOLOv3: an incremental improvement. arXiv preprint arXiv:1804.02767 (2018)

Ren, S., He, K., Girshick, R., Sun, J.: Faster R-CNN: towards real-time object detection with region proposal networks. In: Advances in Neural Information Processing Systems, vol. 28, pp. 91–99 (2015). https://doi.org/10.1109/TPAMI.2016.2577031

Saha, A., Subramanya, A., Patil, K., Pirsiavash, H.: Role of spatial context in adversarial robustness for object detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, pp. 784–785 (2020). https://doi.org/10.1109/CVPRW50498.2020.00400

Song, D., et al.: Physical adversarial examples for object detectors. In: 12th USENIX Workshop on Offensive Technologies (WOOT 2018) (2018)

Song, Z., Chen, Q., Huang, Z., Hua, Y., Yan, S.: Contextualizing object detection and classification. In: CVPR 2011, pp. 1585–1592. IEEE (2011). https://doi.org/10.1109/CVPR.2011.5995330

Szegedy, C., et al.: Intriguing properties of neural networks. arXiv preprint arXiv:1312.6199 (2013)

Xu, K., et al.: Adversarial T-Shirt! evading person detectors in a physical world. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12350, pp. 665–681. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58558-7_39

Zhao, Y., Zhu, H., Liang, R., Shen, Q., Zhang, S., Chen, K.: Seeing isn’t believing: towards more robust adversarial attack against real world object detectors. In: Proceedings of the 2019 ACM SIGSAC Conference on Computer and Communications Security, pp. 1989–2004 (2019). https://doi.org/10.1145/3319535.3354259

Zolfi, A., Kravchik, M., Elovici, Y., Shabtai, A.: The translucent patch: a physical and universal attack on object detectors. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 15232–15241 (2021). https://doi.org/10.1109/CVPR46437.2021.01498

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Xu, Y., Wang, J., Li, Y., Wang, Y., Xu, Z., Wang, D. (2022). Universal Physical Adversarial Attack via Background Image. In: Zhou, J., et al. Applied Cryptography and Network Security Workshops. ACNS 2022. Lecture Notes in Computer Science, vol 13285. Springer, Cham. https://doi.org/10.1007/978-3-031-16815-4_1

Download citation

DOI: https://doi.org/10.1007/978-3-031-16815-4_1

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-16814-7

Online ISBN: 978-3-031-16815-4

eBook Packages: Computer ScienceComputer Science (R0)