Abstract

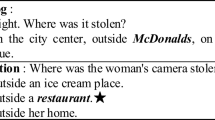

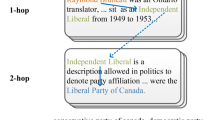

Conventional Machine Reading Comprehension (MRC) has been well-addressed by pattern matching, but the ability of commonsense reasoning remains a gap between humans and machines. Previous methods tackle this problem by enriching word representations via pretrained Knowledge Graph Embeddings (KGE). However, they make limited use of a large number of connections between nodes in Knowledge Graphs (KG), which can be pivotal cues for building the commonsense reasoning chains. In this paper, we propose a Plug-and-play module to IncorporatE Connection information for commonsEnse Reasoning (PIECER). Beyond enriching word representations with knowledge embeddings, PIECER constructs a joint query-passage graph to explicitly guide commonsense reasoning by the knowledge-oriented connections between words. Further, PIECER has high generalizability since it can be plugged into any MRC model. Experimental results on ReCoRD, a large-scale public MRC dataset requiring commonsense reasoning, show that PIECER introduces stable performance improvements for four representative base MRC models, especially in low-resource settings (The code is available at https://github.com/Hunter-DDM/piecer.).

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Bordes, A., Usunier, N., García-Durán, A., Weston, J., Yakhnenko, O.: Translating embeddings for modeling multi-relational data. In: NeurIPS 2013, pp. 2787–2795 (2013)

Cao, N.D., Aziz, W., Titov, I.: Question answering by reasoning across documents with graph convolutional networks. In: NAACL-HLT 2019, pp. 2306–2317 (2019)

Carlson, A., Betteridge, J., Kisiel, B., Settles, B., Hruschka, E.R., Mitchell, T.M.: Toward an architecture for never-ending language learning. In: AAAI 2010, pp. 1306–1313 (2010)

Dai, D., Ren, J., Zeng, S., Chang, B., Sui, Z.: Coarse-to-fine entity representations for document-level relation extraction. CoRR arXiv:2012.02507 (2020)

Devlin, J., Chang, M., Lee, K., Toutanova, K.: BERT: pre-training of deep bidirectional transformers for language understanding. In: NAACL-HLT 2019, pp. 4171–4186 (2019)

Guo, Z., Zhang, Y., Lu, W.: Attention guided graph convolutional networks for relation extraction. In: ACL 2019, pp. 241–251 (2019)

Hamilton, W.L., Ying, Z., Leskovec, J.: Inductive representation learning on large graphs. In: NeurIPS 2017, pp. 1024–1034 (2017)

Han, X., et al.: OpenKE: an open toolkit for knowledge embedding. In: EMNLP 2018: System Demonstrations, pp. 139–144 (2018)

Jia, R., Liang, P.: Adversarial examples for evaluating reading comprehension systems. In: EMNLP 2017, pp. 2021–2031 (2017)

Kaushik, D., Lipton, Z.C.: How much reading does reading comprehension require? A critical investigation of popular benchmarks. In: EMNLP 2018, pp. 5010–5015 (2018)

Kingma, D.P., Ba, J.: Adam: a method for stochastic optimization. In: ICLR 2015 (2015)

Kipf, T.N., Welling, M.: Semi-supervised classification with graph convolutional networks. In: ICLR 2017 (2017)

Li, Q., Han, Z., Wu, X.: Deeper insights into graph convolutional networks for semi-supervised learning. In: AAAI 2018, pp. 3538–3545 (2018)

Liu, Y., et al.: RoBERTa: a robustly optimized BERT pretraining approach. CoRR arXiv:1907.11692 (2019)

Loshchilov, I., Hutter, F.: Decoupled weight decay regularization. In: ICLR 2019 (2019)

Mihaylov, T., Frank, A.: Knowledgeable reader: enhancing cloze-style reading comprehension with external commonsense knowledge. In: ACL 2018, pp. 821–832 (2018)

Miller, G.A.: WordNet: a lexical database for English. Commun. ACM 38(11), 39–41 (1995)

Qiu, D., et al.: Machine reading comprehension using structural knowledge graph-aware network. In: EMNLP-IJCNLP 2019, pp. 5895–5900 (2019)

Rajpurkar, P., Jia, R., Liang, P.: Know what you don’t know: unanswerable questions for SQuAD. In: ACL 2018, pp. 784–789 (2018)

Rajpurkar, P., Zhang, J., Lopyrev, K., Liang, P.: SQuAD: 100,000+ questions for machine comprehension of text. In: EMNLP 2016, pp. 2383–2392 (2016)

Seo, M.J., Kembhavi, A., Farhadi, A., Hajishirzi, H.: Bidirectional attention flow for machine comprehension. In: ICLR 2017 (2017)

Song, L., Wang, Z., Yu, M., Zhang, Y., Florian, R., Gildea, D.: Exploring graph-structured passage representation for multi-hop reading comprehension with graph neural networks. CoRR arXiv:1809.02040 (2018)

Speer, R., Chin, J., Havasi, C.: ConceptNet 5.5: an open multilingual graph of general knowledge. In: AAAI 2017, pp. 4444–4451 (2017)

Srivastava, R.K., Greff, K., Schmidhuber, J.: Highway networks. CoRR arXiv:1505.00387 (2015)

Trischler, A., et al.: NewsQA: a machine comprehension dataset. In: Rep4NLP@ACL 2017, pp. 191–200 (2017)

Vaswani, A., et al.: Attention is all you need. In: NeurIPS 2017, pp. 5998–6008 (2017)

Velickovic, P., Cucurull, G., Casanova, A., Romero, A., Liò, P., Bengio, Y.: Graph attention networks. In: ICLR 2018 (2018)

Wang, S., Jiang, J.: Machine comprehension using match-LSTM and answer pointer. In: ICLR 2017 (2017)

Wang, W., Yang, N., Wei, F., Chang, B., Zhou, M.: Gated self-matching networks for reading comprehension and question answering. In: ACL 2017, pp. 189–198 (2017)

Wang, Z., Li, L., Zeng, D.: Knowledge-enhanced natural language inference based on knowledge graphs. In: COLING 2020, pp. 6498–6508 (2020)

Xiong, C., Zhong, V., Socher, R.: Dynamic coattention networks for question answering. In: ICLR 2017 (2017)

Xu, K., Hu, W., Leskovec, J., Jegelka, S.: How powerful are graph neural networks? In: ICLR 2019 (2019)

Yang, A., et al.: Enhancing pre-trained language representations with rich knowledge for machine reading comprehension. In: ACL 2019, pp. 2346–2357 (2019)

Yang, B., Mitchell, T.M.: Leveraging knowledge bases in LSTMs for improving machine reading. In: ACL 2017, pp. 1436–1446 (2017)

Yang, B., Yih, W., He, X., Gao, J., Deng, L.: Embedding entities and relations for learning and inference in knowledge bases. In: ICLR 2015 (2015)

Yu, A.W., et al.: QANet: combining local convolution with global self-attention for reading comprehension. In: ICLR 2018 (2018)

Zhang, S., Liu, X., Liu, J., Gao, J., Duh, K., Durme, B.V.: ReCoRD: bridging the gap between human and machine commonsense reading comprehension. CoRR arXiv:1810.12885 (2018)

Zhou, H., Young, T., Huang, M., Zhao, H., Xu, J., Zhu, X.: Commonsense knowledge aware conversation generation with graph attention. In: IJCAI 2018, pp. 4623–4629 (2018)

Acknowledgement

This paper is supported by the National Key Research and Development Program of China 2020AAA0106701 and NSFC project U19A2065.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 Springer Nature Switzerland AG

About this paper

Cite this paper

Dai, D., Zheng, H., Sui, Z., Chang, B. (2022). Plug-and-Play Module for Commonsense Reasoning in Machine Reading Comprehension. In: Lu, W., Huang, S., Hong, Y., Zhou, X. (eds) Natural Language Processing and Chinese Computing. NLPCC 2022. Lecture Notes in Computer Science(), vol 13552. Springer, Cham. https://doi.org/10.1007/978-3-031-17189-5_3

Download citation

DOI: https://doi.org/10.1007/978-3-031-17189-5_3

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-17188-8

Online ISBN: 978-3-031-17189-5

eBook Packages: Computer ScienceComputer Science (R0)