Abstract

Open set recognition (OSR) aims to simultaneously identify known classes and reject unknown classes. However, existing researches on open set recognition are usually based on single-modal data. Single-modal perception is susceptible to external interference, which may cause incorrect recognition. The multi-modal perception can be employed to improve the OSR performance thanks to the complementarity between different modalities. So we propose a new multi-modal open set recognition (MMOSR) method in this paper. The MMOSR network is constructed with joint metric learning in logit space. By doing this, it can avoid the feature representation gap between different modalities, and effectively estimate the decision boundaries. Moreover, the entropy-based adaptive weight fusion method is developed to combine the multi-modal perception information. The weights of different modalities are automatically determined according to the entropy in the logit space. A bigger entropy will lead to a smaller weight of the corresponding modality. This can effectively prevent the influence of disturbance. Scaling the fusion logits by the single-modal relative reachability further enhances the unknown detection ability. Experiments show that our method can achieve more robust open set recognition performance with multi-modal input compared with other methods.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556 (2014)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Scheirer, W.J., de Rezende Rocha, A., Sapkota, A., Boult, T.E.: Toward open set recognition. IEEE Trans. Pattern Anal. Mach. Intell. 35(7), 1757–1772 (2012)

Baltrušaitis, T., Ahuja, C., Morency, L.P.: Multimodal machine learning: a survey and taxonomy. IEEE Trans. Pattern Anal. Mach. Intell. 41(2), 423–443 (2018)

Hong, D., et al.: More diverse means better: multimodal deep learning meets remote-sensing imagery classification. IEEE Trans. Geosci. Remote Sens. 59(5), 4340–4354 (2020)

Feng, D., et al.: Deep multi-modal object detection and semantic segmentation for autonomous driving: datasets, methods, and challenges. IEEE Trans. Intell. Transp. Syst. 22(3), 1341–1360 (2020)

Elmadany, N.E.D., He, Y., Guan, L.: Multimodal learning for human action recognition via bimodal/multimodal hybrid centroid canonical correlation analysis. IEEE Trans. Multimedia 21(5), 1317–1331 (2018)

Hadsell, R., Chopra, S., LeCun, Y.: Dimensionality reduction by learning an invariant mapping. In: 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR2006), vol. 2, pp. 1735–1742. IEEE (2006)

Schroff, F., Kalenichenko, D., Philbin, J.: FaceNet: a unified embedding for face recognition and clustering. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 815–823 (2015)

Sohn, K.: Improved deep metric learning with multi-class n-pair loss objective. In: Advances in Neural Information Processing Systems, pp. 1857–1865 (2016)

Wen, Y., Zhang, K., Li, Z., Qiao, Yu.: A discriminative feature learning approach for deep face recognition. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9911, pp. 499–515. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46478-7_31

He, X., Zhou, Y., Zhou, Z., Bai, S., Bai, X.: Triplet-center loss for multi-view 3D object retrieval. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1945–1954 (2018)

Cai, J., Meng, Z., Khan, A.S., Li, Z., O’Reilly, J., Tong, Y.: Island loss for learning discriminative features in facial expression recognition. In: 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018), pp. 302–309. IEEE (2018)

Cevikalp, H.: Best fitting hyperplanes for classification. IEEE Trans. Pattern Anal. Mach. Intell. 39(6), 1076 (2017)

Bendale, A., Boult, T.: Towards open world recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1893–1902 (2015)

Mendes Júnior, R.R., et al.: Nearest neighbors distance ratio open-set classifier. Mach. Learn. 106(3), 359–386 (2016). https://doi.org/10.1007/s10994-016-5610-8

Bendale, A., Boult, T.E.: Towards open set deep networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1563–1572 (2016)

Yoshihashi, R., Shao, W., Kawakami, R., You, S., Iida, M., Naemura, T.: Classification-reconstruction learning for open-set recognition. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 4016–4025 (2019)

Ge, Z., Demyanov, S., Chen, Z., Garnavi, R.: Generative openMax for multi-class open set classification. arXiv preprint arXiv:1707.07418 (2017)

Neal, L., Olson, M., Fern, X., Wong, W.-K., Li, F.: Open set learning with counterfactual images. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11210, pp. 620–635. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01231-1_38

Oza, P., Patel, V.M.: C2AE: class conditioned auto-encoder for open-set recognition. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 2307–2316 (2019)

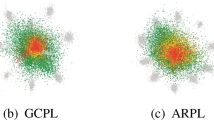

Yang, H.M., Zhang, X.Y., Yin, F., Liu, C.L.: Robust classification with convolutional prototype learning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3474–3482 (2018)

Chen, G., et al.: Learning open set network with discriminative reciprocal points. In: Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M. (eds.) ECCV 2020. LNCS, vol. 12348, pp. 507–522. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-58580-8_30

Miller, D., Sunderhauf, N., Milford, M., Dayoub, F.: Class anchor clustering: a loss for distance-based open set recognition. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pp. 3570–3578 (2021)

Savinov, N., et al.: Episodic curiosity through reachability. arXiv preprint arXiv:1810.02274 (2018)

Strese, M., Schuwerk, C., Iepure, A., Steinbach, E.: Multimodal feature-based surface material classification. IEEE Trans. Haptics 10(2), 226–239 (2016)

Zheng, H., Fang, L., Ji, M., Strese, M., Özer, Y., Steinbach, E.: Deep learning for surface material classification using haptic and visual information. IEEE Trans. Multimedia 18(12), 2407–2416 (2016)

Dhamija, A.R., Günther, M., Boult, T.E.: Reducing network agnostophobia. arXiv preprint arXiv:1811.04110 (2018)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Fu, Y., Liu, Z., Yang, Y., Xu, L., Lan, H. (2022). Adaptive Open Set Recognition with Multi-modal Joint Metric Learning. In: Yu, S., et al. Pattern Recognition and Computer Vision. PRCV 2022. Lecture Notes in Computer Science, vol 13534. Springer, Cham. https://doi.org/10.1007/978-3-031-18907-4_49

Download citation

DOI: https://doi.org/10.1007/978-3-031-18907-4_49

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-18906-7

Online ISBN: 978-3-031-18907-4

eBook Packages: Computer ScienceComputer Science (R0)