Abstract

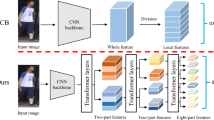

Person Re-identification (ReID) is a computer vision task of retrieving a person of interest across multiple non-overlapping cameras. Most of the existing methods are developed based on convolutional neural networks trained with supervision, which may suffer from the problem of missing subtle visual cues and global information caused by pooling operations and the weight-sharing mechanism. To this end, we propose a novel Transformer-based ReID method by pre-training with Masked Autoencoders and fine-tuning with locality enhancement. In our method, Masked Autoencoders are pre-trained in a self-supervised way by using large-scale unlabeled data such that subtle visual cues can be learned with the pixel-level reconstruction loss. With the Transformer backbone, global features are extracted as the classification token which integrates information from different patches by the self-attention mechanism. To take full advantage of local information, patch tokens are reshaped into a feature map for convolution to extract local features in the proposed locality enhancement module. Both global and local features are combined to obtain more robust representations of person images for inference. To the best of our knowledge, this is the first work to utilize generative self-supervised learning for representation learning in ReID. Experiments show that the proposed method achieves competitive performance in terms of parameter scale, computation overhead, and ReID performance compared with the state of the art. Code is available at https://github.com/YanzuoLu/MALE.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Bao, H., Dong, L., Wei, F.: BEiT: BERT pre-training of image transformers. In: ICLR (2022)

Carion, N., Massa, F., Synnaeve, G., Usunier, N., Kirillov, A., Zagoruyko, S.: End-to-end object detection with transformers. In: ECCV, pp. 213–229 (2020)

Caron, M., et al.: Emerging properties in self-supervised vision transformers. In: ICCV, pp. 9630–9640 (2021)

Chen, T., Kornblith, S., Norouzi, M., Hinton, G.: A simple framework for contrastive learning of visual representations. In: ICML, pp. 1597–1607 (2020)

Chen, T., Kornblith, S., Swersky, K., Norouzi, M., Hinton, G.: Big self-supervised models are strong semi-supervised learners. In: NeruIPS, vol. 33, pp. 22243–22255 (2020)

Chen, X., Fan, H., Girshick, R., He, K.: Improved baselines with momentum contrastive learning. arXiv:2003.04297 (2020)

Chen, X., Xie, S., He, K.: An empirical study of training self-supervised vision transformers. In: ICCV, pp. 9620–9629 (2021)

Clark, K., Luong, M.T., Le, Q.V., Manning, C.D.: Electra: pre-training text encoders as discriminators rather than generators. In: ICLR (2020)

Deng, J., Dong, W., Socher, R., Li, L.J., Li, K., Fei-Fei, L.: ImageNet: a large-scale hierarchical image database. In: CVPR, pp. 248–255 (2009)

Devlin, J., Chang, M.W., Lee, K., Toutanova, K.: BERT: pre-training of deep bidirectional transformers for language understanding. In: NAACL, pp. 4171–4186 (2019)

Dosovitskiy, A., et al.: An image is worth 16 \(\times \) 16 words: transformers for image recognition at scale. In: ICLR (2021)

Fu, D., et al.: Unsupervised pre-training for person re-identification. In: CVPR, pp. 14750–14759 (2021)

Grill, J.B., et al.: Bootstrap your own latent a new approach to self-supervised learning. In: NeruIPS, vol. 33, pp. 21271–21284 (2020)

He, K., Chen, X., Xie, S., Li, Y., Dollár, P., Girshick, R.: Masked autoencoders are scalable vision learners. In: CVPR (2022)

He, K., Fan, H., Wu, Y., Xie, S., Girshick, R.: Momentum contrast for unsupervised visual representation learning. In: CVPR, pp. 9726–9735 (2020)

He, L., Liao, X., Liu, W., Liu, X., Cheng, P., Mei, T.: FastReID: a pytorch toolbox for general instance re-identification. arXiv:2006.02631 (2020)

He, S., Luo, H., Wang, P., Wang, F., Li, H., Jiang, W.: TransReID: transformer-based object re-identification. In: ICCV, pp. 15013–15022 (2021)

Loshchilov, I., Hutter, F.: Decoupled weight decay regularization. In: ICLR (2019)

Luo, H., Gu, Y., Liao, X., Lai, S., Jiang, W.: Bag of tricks and a strong baseline for deep person re-identification. In: CVPRW (2019)

Luo, H., et al.: Self-supervised pre-training for transformer-based person re-identification. arXiv:2111.12084 (2021)

Ramesh, A., et al.: Zero-shot text-to-image generation. In: ICML, pp. 8821–8831 (2021)

Vaswani, A., et al.: Attention is all you need. In: NeruIPS, vol. 30 (2017)

Wang, G., Yuan, Y., Chen, X., Li, J., Zhou, X.: Learning discriminative features with multiple granularities for person re-identification. In: ACM MM, pp. 274–282 (2018)

Wei, L., Zhang, S., Gao, W., Tian, Q.: Person transfer GAN to bridge domain gap for person re-identification. In: CVPR, pp. 79–88 (2018)

Xie, Z., et al.: Self-supervised learning with Swin transformers. arXiv:2105.04553 (2021)

Xie, Z., et al.: SimMIM: a simple framework for masked image modeling. In: CVPR (2021)

Ye, M., Shen, J., Lin, G., Xiang, T., Shao, L., Hoi, S.C.H.: Deep learning for person re-identification: a survey and outlook. TPAMI 44(6), 2872–2893 (2021)

Zheng, L., Shen, L., Tian, L., Wang, S., Wang, J., Tian, Q.: Scalable person re-identification: a benchmark. In: ICCV, pp. 1116–1124 (2015)

Zheng, S., et al.: Rethinking semantic segmentation from a sequence-to-sequence perspective with transformers. In: CVPR, pp. 6877–6886 (2021)

Acknowledgments

This work was supported partially by NSFC (No. 61906218), Guangdong Basic and Applied Basic Research Foundation (No. 2020A1515011497), and Science and Technology Program of Guangzhou (No. 202002030371).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Lu, Y., Zhang, M., Lin, Y., Ma, A.J., Xie, X., Lai, J. (2022). Improving Pre-trained Masked Autoencoder via Locality Enhancement for Person Re-identification. In: Yu, S., et al. Pattern Recognition and Computer Vision. PRCV 2022. Lecture Notes in Computer Science, vol 13535. Springer, Cham. https://doi.org/10.1007/978-3-031-18910-4_41

Download citation

DOI: https://doi.org/10.1007/978-3-031-18910-4_41

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-18909-8

Online ISBN: 978-3-031-18910-4

eBook Packages: Computer ScienceComputer Science (R0)